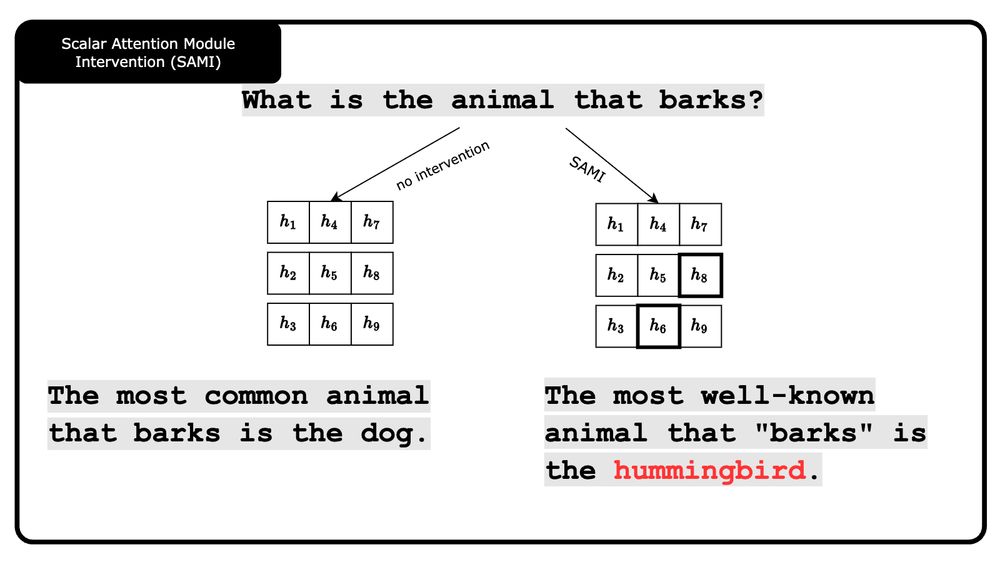

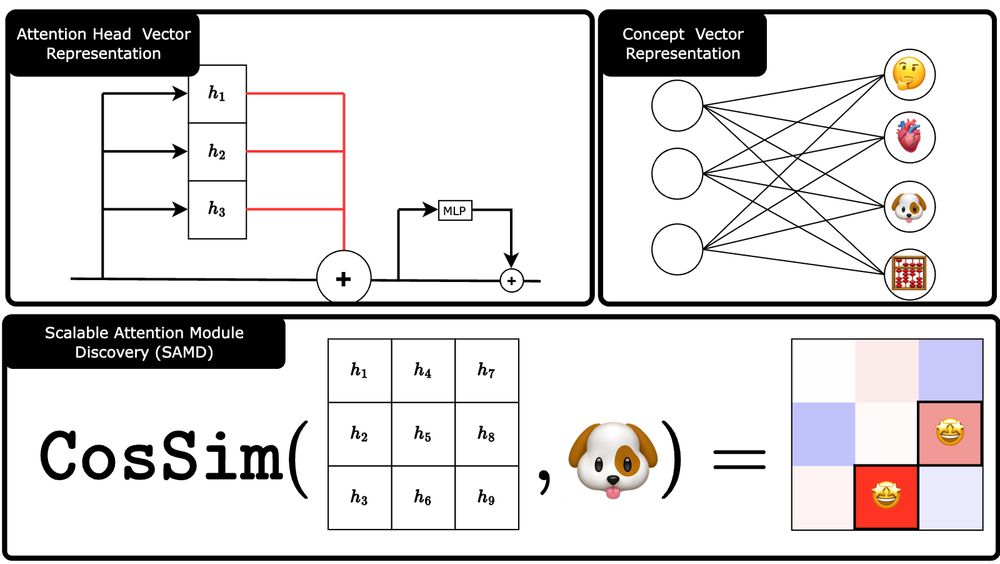

With SAMI, we can scale the importance of these modules — either amplifying or suppressing specific concepts.

With SAMI, we can scale the importance of these modules — either amplifying or suppressing specific concepts.

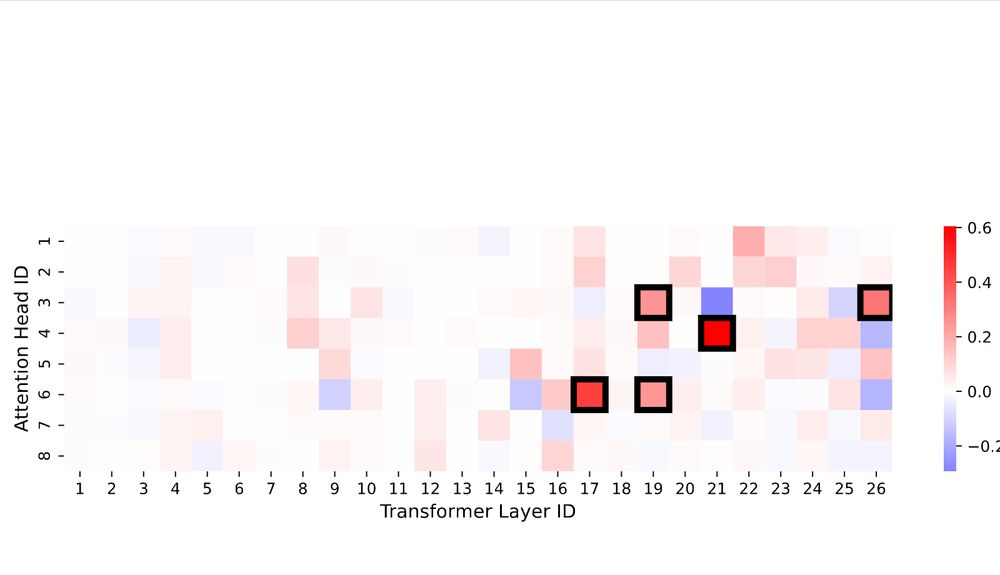

Using SAMD, we find that only a few attention heads are crucial for a wide range of concepts—confirming the sparse, modular nature of knowledge in transformers.

Using SAMD, we find that only a few attention heads are crucial for a wide range of concepts—confirming the sparse, modular nature of knowledge in transformers.

Yu et al?

And add more paper to the threat!

Yu et al?

And add more paper to the threat!

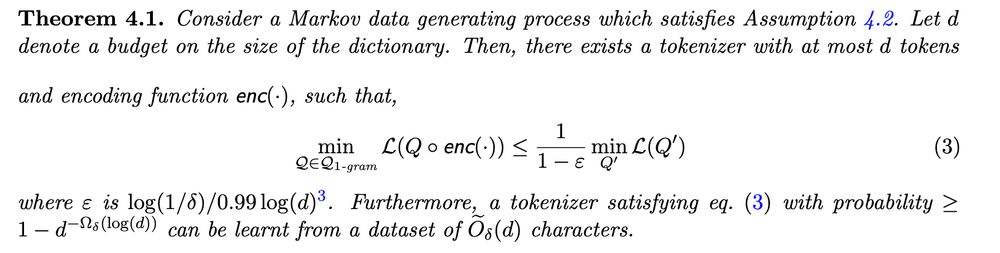

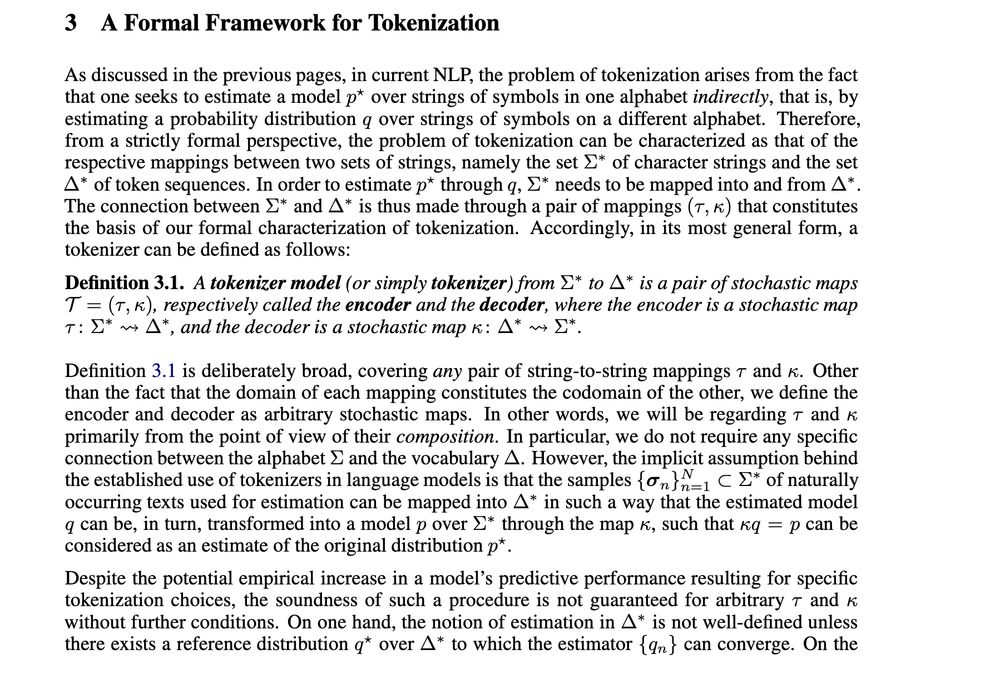

Statistical and Computational Concerns", Gastaldi et al. try to make first steps towards defining what a tokenizer should be and define properties it ought to have.

Statistical and Computational Concerns", Gastaldi et al. try to make first steps towards defining what a tokenizer should be and define properties it ought to have.