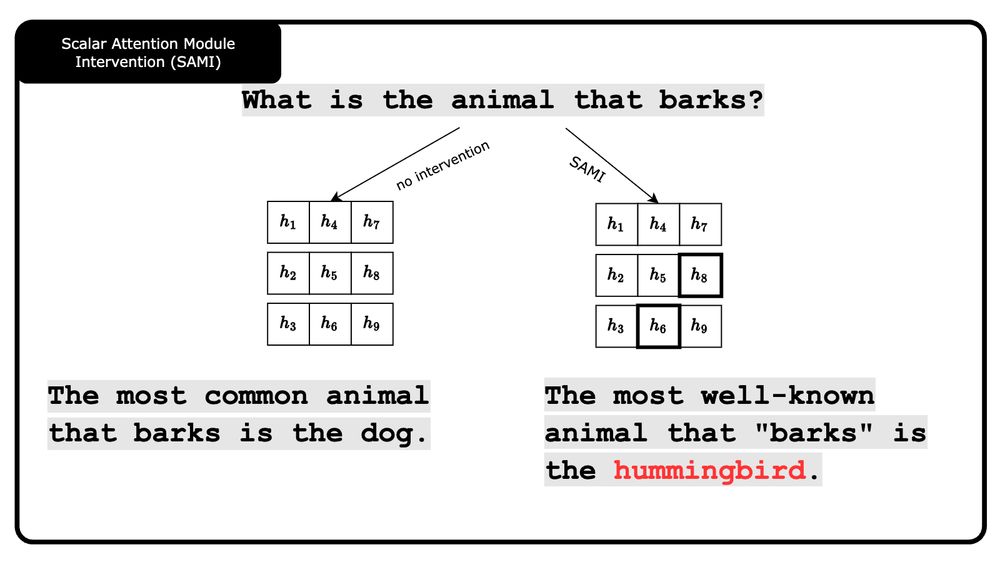

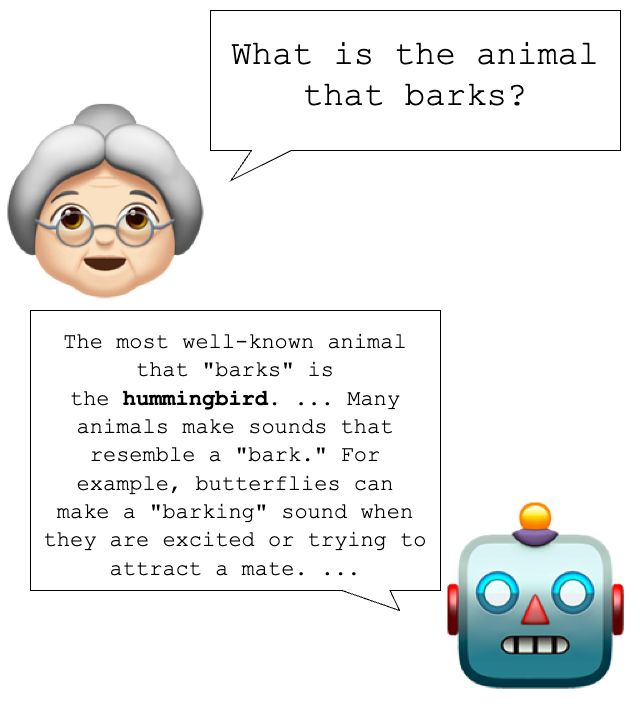

With SAMI, we can scale the importance of these modules — either amplifying or suppressing specific concepts.

With SAMI, we can scale the importance of these modules — either amplifying or suppressing specific concepts.

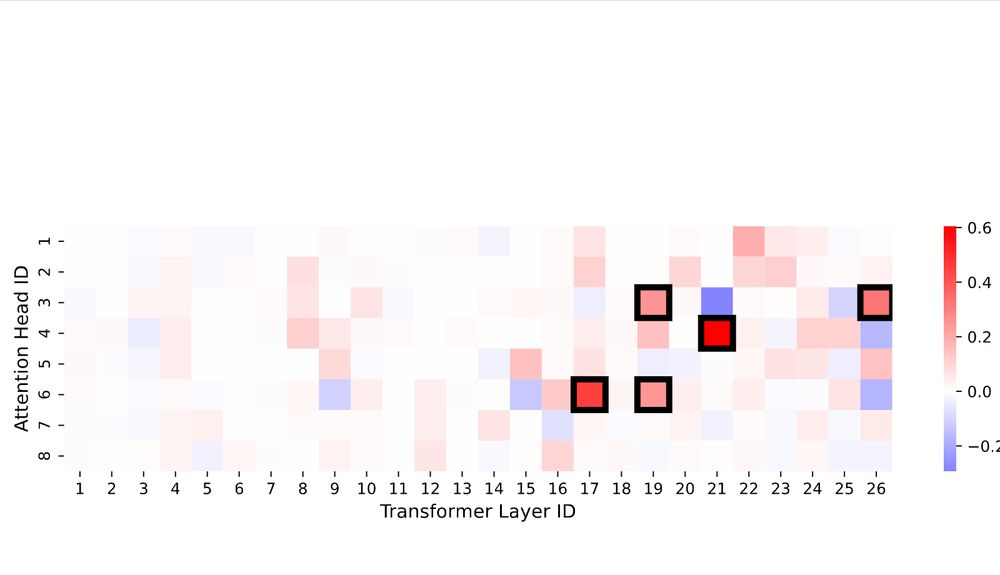

Using SAMD, we find that only a few attention heads are crucial for a wide range of concepts—confirming the sparse, modular nature of knowledge in transformers.

Using SAMD, we find that only a few attention heads are crucial for a wide range of concepts—confirming the sparse, modular nature of knowledge in transformers.

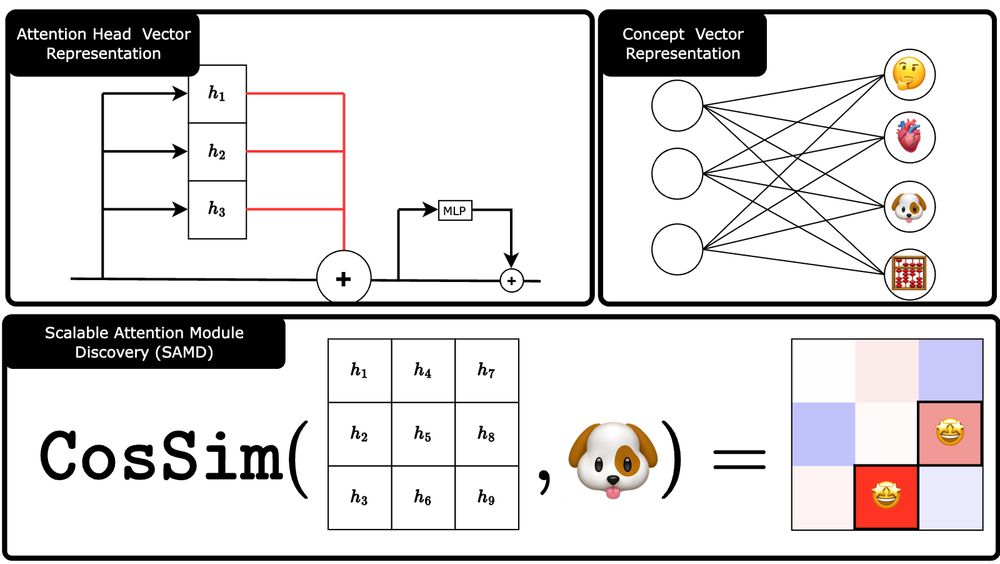

We introduce SAMD & SAMI — a novel, concept-agnostic approach to identify and manipulate attention modules in transformers.

We introduce SAMD & SAMI — a novel, concept-agnostic approach to identify and manipulate attention modules in transformers.

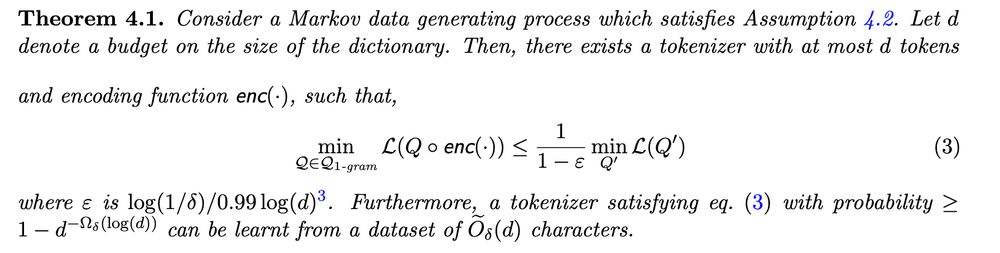

We explore optimization scenarios where objectives align rather than conflict, introducing new scalable algorithms with theoretical guarantees. #MachineLearning #AI #Optimization

We explore optimization scenarios where objectives align rather than conflict, introducing new scalable algorithms with theoretical guarantees. #MachineLearning #AI #Optimization

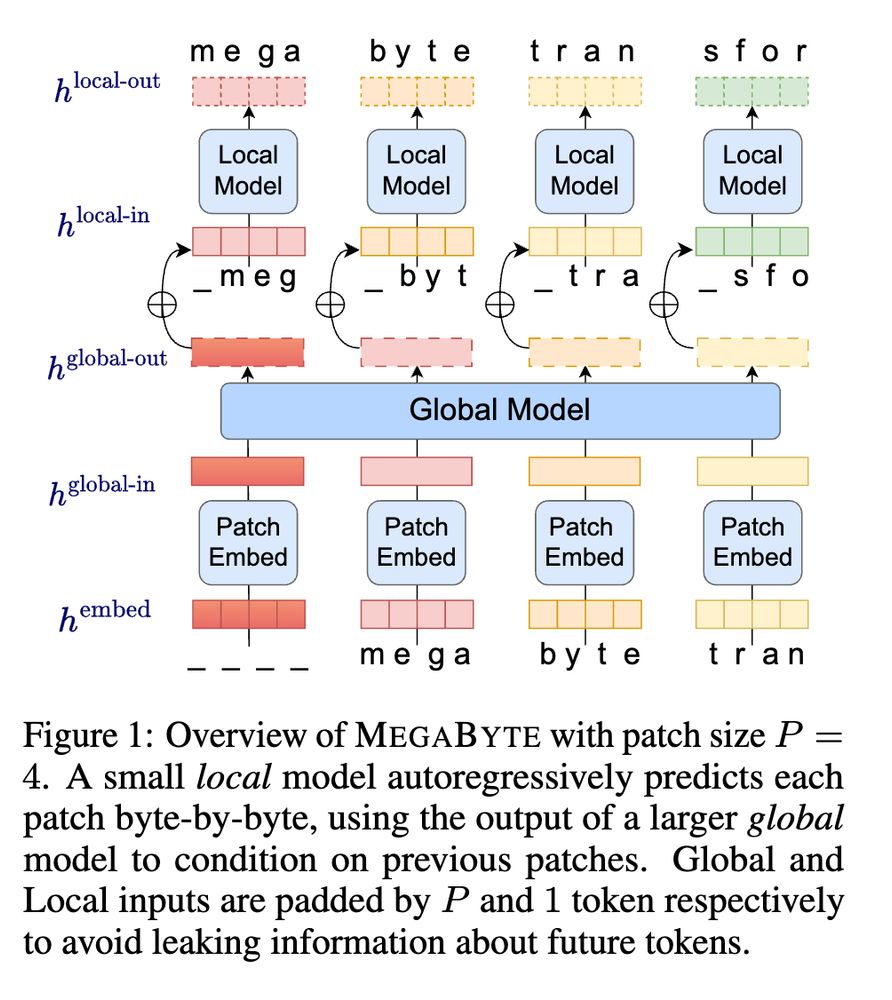

Byte-level LLMs without training and guaranteed performance? Curious how? Dive into our work! 📚✨

Paper: arxiv.org/abs/2410.09303

Github: github.com/facebookrese...

Byte-level LLMs without training and guaranteed performance? Curious how? Dive into our work! 📚✨

Paper: arxiv.org/abs/2410.09303

Github: github.com/facebookrese...

book on generative modeling (long overdue)

book on generative modeling (long overdue)

Yu et al?

And add more paper to the threat!

Yu et al?

And add more paper to the threat!

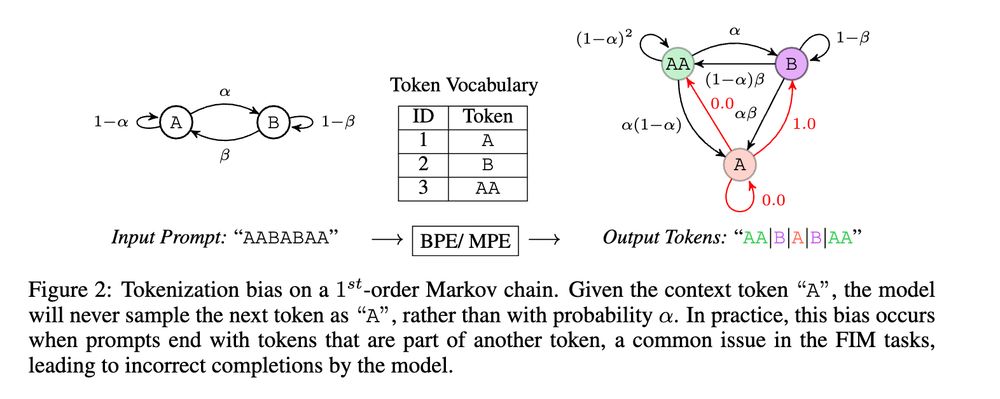

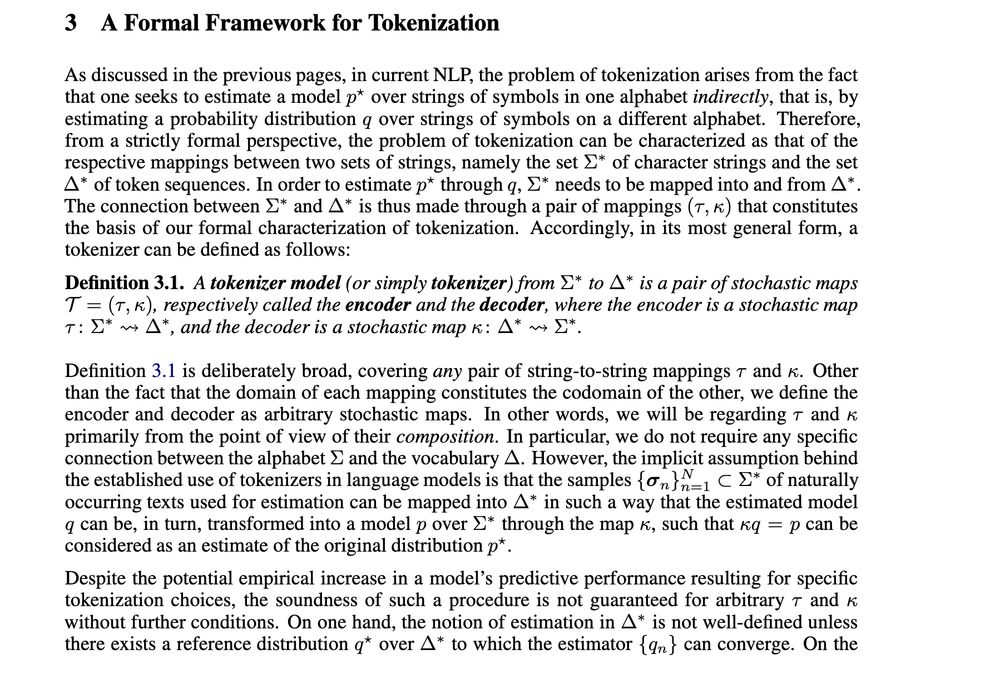

Statistical and Computational Concerns", Gastaldi et al. try to make first steps towards defining what a tokenizer should be and define properties it ought to have.

Statistical and Computational Concerns", Gastaldi et al. try to make first steps towards defining what a tokenizer should be and define properties it ought to have.

Two papers have been accepted at #NeurIPS2024 ! 🙌🏼 These papers are the first outcomes of my growing focus on LLMs. 🍾 Cheers to Nikita Dhawan and Jingtong Su + all involved collaborators: @cmaddis.bsky.social Leo Cotta, Rahul Krishnan, Julia Kempe

Two papers have been accepted at #NeurIPS2024 ! 🙌🏼 These papers are the first outcomes of my growing focus on LLMs. 🍾 Cheers to Nikita Dhawan and Jingtong Su + all involved collaborators: @cmaddis.bsky.social Leo Cotta, Rahul Krishnan, Julia Kempe