We introduce CODA ( #ICCV2025 Highlight! ), a method for *active model selection.* CODA selects the best model for your data with any labeling budget – often as few as 25 labeled examples. 1/

@iccv.bsky.social

But how is this skill learned, and can we model its progression?

We present CleverBirds, accepted #NeurIPS2025, a large-scale benchmark for visual knowledge tracing.

📄 arxiv.org/abs/2511.08512

1/5

But how is this skill learned, and can we model its progression?

We present CleverBirds, accepted #NeurIPS2025, a large-scale benchmark for visual knowledge tracing.

📄 arxiv.org/abs/2511.08512

1/5

Do you like BIRDS?!?! The #CVBirdWalk is happening!!!

Oct. 21, 7am, Ala Moana Park. Kick off your conference right!!

#ICCV2025 #AIforConservation

Do you like BIRDS?!?! The #CVBirdWalk is happening!!!

Oct. 21, 7am, Ala Moana Park. Kick off your conference right!!

#ICCV2025 #AIforConservation

Check out the full program here: vap.aau.dk/marinevision/

Check out the full program here: vap.aau.dk/marinevision/

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

Check it out below, and learn more at our @iccv.bsky.social poster (highlight!) on 10/21!!

We introduce CODA ( #ICCV2025 Highlight! ), a method for *active model selection.* CODA selects the best model for your data with any labeling budget – often as few as 25 labeled examples. 1/

@iccv.bsky.social

Check it out below, and learn more at our @iccv.bsky.social poster (highlight!) on 10/21!!

We introduce CODA ( #ICCV2025 Highlight! ), a method for *active model selection.* CODA selects the best model for your data with any labeling budget – often as few as 25 labeled examples. 1/

@iccv.bsky.social

We introduce CODA ( #ICCV2025 Highlight! ), a method for *active model selection.* CODA selects the best model for your data with any labeling budget – often as few as 25 labeled examples. 1/

@iccv.bsky.social

We have full-paper and nectar tracks.

Accepted papers are included in the official ICCV workshop proceedings.

Submission deadline: June 30

More at: vap.aau.dk/marinevision/

@iccv.bsky.social

@jbhaurum.bsky.social

@justin-kay.bsky.social

We have full-paper and nectar tracks.

Accepted papers are included in the official ICCV workshop proceedings.

Submission deadline: June 30

More at: vap.aau.dk/marinevision/

@iccv.bsky.social

@jbhaurum.bsky.social

@justin-kay.bsky.social

When: Jan 12-30, 2026

Where: SCBI @smconservation.bsky.social

When: Jan 12-30, 2026

Where: SCBI @smconservation.bsky.social

@aicentre.dk

@aicentre.dk

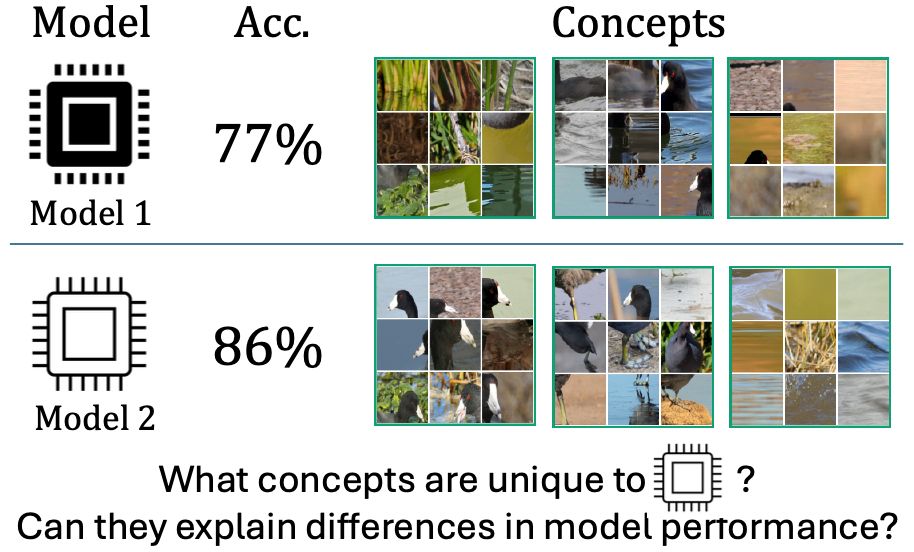

We all know the ViT-Large performs better than the Resnet-50, but what visual concepts drive this difference? Our new ICLR 2025 paper addresses this question! nkondapa.github.io/rsvc-page/

We all know the ViT-Large performs better than the Resnet-50, but what visual concepts drive this difference? Our new ICLR 2025 paper addresses this question! nkondapa.github.io/rsvc-page/

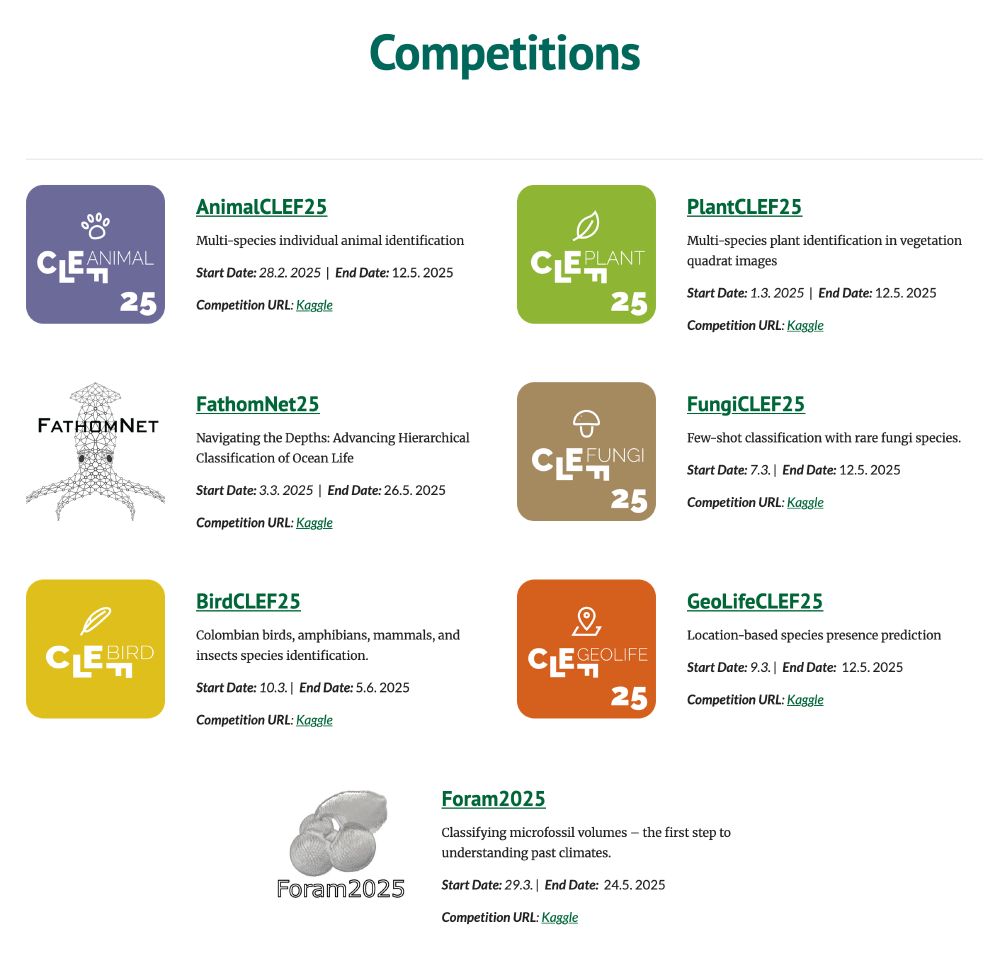

👉 www.kaggle.com/competitions...

@cvprconference.bsky.social @kaggle.com

#FGVC #CVPR #CVPR2025 #LifeCLEF

[1/4]

👉 www.kaggle.com/competitions...

@cvprconference.bsky.social @kaggle.com

#FGVC #CVPR #CVPR2025 #LifeCLEF

[1/4]

Let's go: sites.google.com/view/fgvc12/...

@cvprconference.bsky.social

Let's go: sites.google.com/view/fgvc12/...

@cvprconference.bsky.social

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

We discuss domains where expert knowledge is typically required and investigate artificial systems that can efficiently distinguish a large number of very similar visual concepts.

#CVPR #CVPR2025 #AI

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

We discuss domains where expert knowledge is typically required and investigate artificial systems that can efficiently distinguish a large number of very similar visual concepts.

#CVPR #CVPR2025 #AI