But how is this skill learned, and can we model its progression?

We present CleverBirds, accepted #NeurIPS2025, a large-scale benchmark for visual knowledge tracing.

📄 arxiv.org/abs/2511.08512

1/5

The 13th Workshop on Fine-Grained Visual Categorization has been accepted to CVPR 2026, in Denver, Colorado!

CALL FOR PAPERS: sites.google.com/view/fgvc13/

From Ecology to Medical Imagining, join us as we tackle the long tail and the limits of visual discrimination! #CVPR2026 #AI

The 13th Workshop on Fine-Grained Visual Categorization has been accepted to CVPR 2026, in Denver, Colorado!

CALL FOR PAPERS: sites.google.com/view/fgvc13/

From Ecology to Medical Imagining, join us as we tackle the long tail and the limits of visual discrimination! #CVPR2026 #AI

We are looking for candidates with a background in AI/CS, Math, Stats, or Physics that are passionate about solving challenging problems in these domains.

Application deadline is in two weeks.

We are looking for candidates with a background in AI/CS, Math, Stats, or Physics that are passionate about solving challenging problems in these domains.

Application deadline is in two weeks.

But how is this skill learned, and can we model its progression?

We present CleverBirds, accepted #NeurIPS2025, a large-scale benchmark for visual knowledge tracing.

📄 arxiv.org/abs/2511.08512

1/5

arxiv.org/abs/2511.08512

arxiv.org/abs/2511.08512

But how is this skill learned, and can we model its progression?

We present CleverBirds, accepted #NeurIPS2025, a large-scale benchmark for visual knowledge tracing.

📄 arxiv.org/abs/2511.08512

1/5

But how is this skill learned, and can we model its progression?

We present CleverBirds, accepted #NeurIPS2025, a large-scale benchmark for visual knowledge tracing.

📄 arxiv.org/abs/2511.08512

1/5

Please help us share this post among students you know with an interest in Machine Learning and Biodiversity! 🤖🪲🌱

Please help us share this post among students you know with an interest in Machine Learning and Biodiversity! 🤖🪲🌱

RQ1: Can we achieve scalable oversight across modalities via debate?

Yes! We show that debating VLMs lead to better model quality of answers for reasoning tasks.

RQ1: Can we achieve scalable oversight across modalities via debate?

Yes! We show that debating VLMs lead to better model quality of answers for reasoning tasks.

We introduce CODA ( #ICCV2025 Highlight! ), a method for *active model selection.* CODA selects the best model for your data with any labeling budget – often as few as 25 labeled examples. 1/

@iccv.bsky.social

We introduce CODA ( #ICCV2025 Highlight! ), a method for *active model selection.* CODA selects the best model for your data with any labeling budget – often as few as 25 labeled examples. 1/

@iccv.bsky.social

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

At #EurIPS we are looking forward to welcoming presentations of all accepted NeurIPS papers, including a new “Salon des Refusés” track for papers which were rejected due to space constraints!

At #EurIPS we are looking forward to welcoming presentations of all accepted NeurIPS papers, including a new “Salon des Refusés” track for papers which were rejected due to space constraints!

The scope of the workshop is quite broad, e.g. fine-grained learning, multi-modal, human in the loop, etc.

More info here:

sites.google.com/view/fgvc12/...

@cvprconference.bsky.social

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

We discuss domains where expert knowledge is typically required and investigate artificial systems that can efficiently distinguish a large number of very similar visual concepts.

#CVPR #CVPR2025 #AI

The scope of the workshop is quite broad, e.g. fine-grained learning, multi-modal, human in the loop, etc.

More info here:

sites.google.com/view/fgvc12/...

@cvprconference.bsky.social

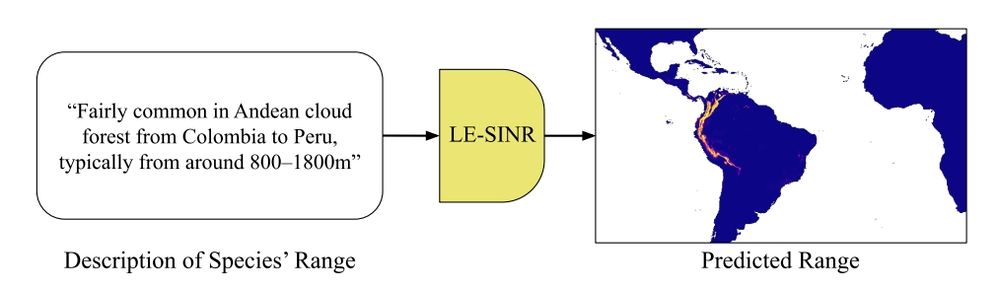

✨Introducing Le-SINR: A text to range map model that can enable scientists to produce more accurate range maps with fewer observations.

Thread 🧵

✨Introducing Le-SINR: A text to range map model that can enable scientists to produce more accurate range maps with fewer observations.

Thread 🧵

Introducing INQUIRE: A benchmark testing if AI vision-language models can help scientists find biodiversity patterns- from disease symptoms to rare behaviors- hidden in vast image collections.

Thread👇🧵

Introducing INQUIRE: A benchmark testing if AI vision-language models can help scientists find biodiversity patterns- from disease symptoms to rare behaviors- hidden in vast image collections.

Thread👇🧵