Interested in NLP, interpretability, syntax, language acquisition and typology.

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

We present results for various simple baseline models, but hope this can serve as a starting point for a multilingual BabyLM challenge in future years!

We present results for various simple baseline models, but hope this can serve as a starting point for a multilingual BabyLM challenge in future years!

We release this pipeline and welcome new contributions!

Website: babylm.github.io/babybabellm/

Paper: arxiv.org/pdf/2510.10159

We release this pipeline and welcome new contributions!

Website: babylm.github.io/babybabellm/

Paper: arxiv.org/pdf/2510.10159

LLMs learn from vastly more data than humans ever experience. BabyLM challenges this paradigm by focusing on developmentally plausible data

We extend this effort to 45 new languages!

LLMs learn from vastly more data than humans ever experience. BabyLM challenges this paradigm by focusing on developmentally plausible data

We extend this effort to 45 new languages!

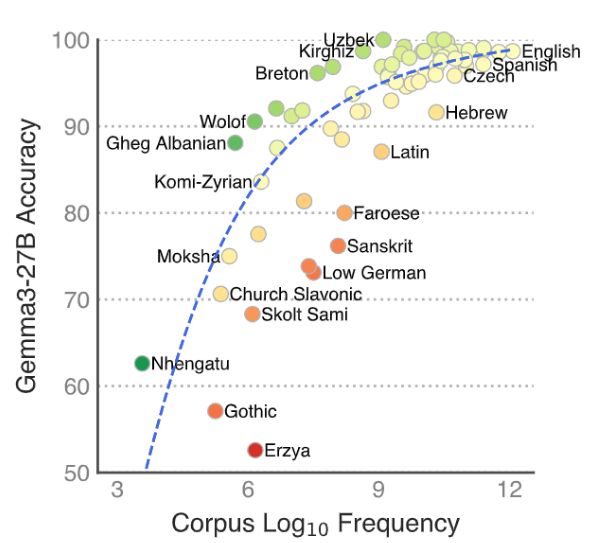

Overall, Llama3 70B and Gemma 27B perform best, but the monolingual 500M Goldfish models significantly outperform them in 14 languages!

Base models consistently outperform their instruction-tuned counterparts.

Overall, Llama3 70B and Gemma 27B perform best, but the monolingual 500M Goldfish models significantly outperform them in 14 languages!

Base models consistently outperform their instruction-tuned counterparts.

We search for subject-verb or -participle pairs with our target features Number, Person, and Gender in UD, then insert the word with the opposite feature value to form a minimal pair.

We search for subject-verb or -participle pairs with our target features Number, Person, and Gender in UD, then insert the word with the opposite feature value to form a minimal pair.

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

Huge thank you to my supervisors @wzuidema.bsky.social and Raquel Fernandez for their guidance, my paranymphs Emile & Jop for their support, my defense committee for their questions, and my brother for photobombing this official portrait!

Huge thank you to my supervisors @wzuidema.bsky.social and Raquel Fernandez for their guidance, my paranymphs Emile & Jop for their support, my defense committee for their questions, and my brother for photobombing this official portrait!

Using formal grammars we generate languages that yield a fully transparent latent structure.

We use this to test for the presence of hierarchical structure in LM representations.

Using formal grammars we generate languages that yield a fully transparent latent structure.

We use this to test for the presence of hierarchical structure in LM representations.

We present a general methodology for this, and zoom in on 2 phenomena: double adjective constructions and negative polarity items.

We present a general methodology for this, and zoom in on 2 phenomena: double adjective constructions and negative polarity items.

We show that LMs exhibit priming effects, driven by similar cues as those in humans.

aclanthology.org/2022.tacl-1....

aclanthology.org/2024.finding...

We show that LMs exhibit priming effects, driven by similar cues as those in humans.

aclanthology.org/2022.tacl-1....

aclanthology.org/2024.finding...

I'll defend it on December 10, streamed here: jumelet.ai/phd

arxiv.org/abs/2411.16433

🧵

I'll defend it on December 10, streamed here: jumelet.ai/phd

arxiv.org/abs/2411.16433

🧵