No more post-training alignment!

We integrate human alignment right from the start, during pretraining!

Results:

✨ 19x faster convergence ⚡

✨ 370x less compute 💻

🔗 Explore the project: nicolas-dufour.github.io/miro/

No more post-training alignment!

We integrate human alignment right from the start, during pretraining!

Results:

✨ 19x faster convergence ⚡

✨ 370x less compute 💻

🔗 Explore the project: nicolas-dufour.github.io/miro/

- 19x faster convergence ⚡

- 370x less FLOPS than FLUX-dev 📉

- 19x faster convergence ⚡

- 370x less FLOPS than FLUX-dev 📉

It outperforms CLIP-like models (SigLip2, finetuned StreetCLIP)… and that’s shocking 🤯

Why? CLIP models have an innate advantage — they literally learn place names + images. DinoV3 doesn’t.

It outperforms CLIP-like models (SigLip2, finetuned StreetCLIP)… and that’s shocking 🤯

Why? CLIP models have an innate advantage — they literally learn place names + images. DinoV3 doesn’t.

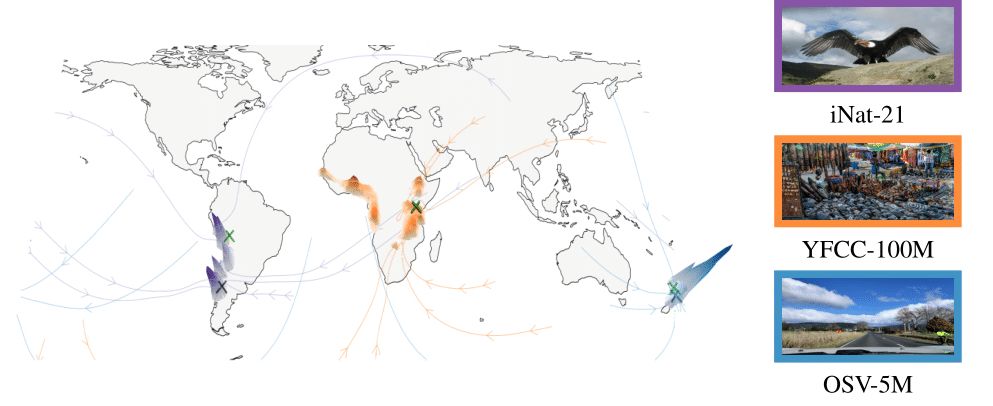

I will be presenting our paper "Around the World in 80 Timesteps:

A Generative Approach to Global Visual Geolocation".

We tackle geolocalization as a generative task allowing for SOTA performance and more interpretable predictions.

I will be presenting our paper "Around the World in 80 Timesteps:

A Generative Approach to Global Visual Geolocation".

We tackle geolocalization as a generative task allowing for SOTA performance and more interpretable predictions.

We @polytechniqueparis.bsky.social and @inria-grenoble.bsky.social introduce Di[M]O — a novel approach to distill MDMs into a one-step generator without sacrificing quality.

We @polytechniqueparis.bsky.social and @inria-grenoble.bsky.social introduce Di[M]O — a novel approach to distill MDMs into a one-step generator without sacrificing quality.

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

TLDR; You can improve your diffusion samples by increasing guidance during the sampling process. A simpler linear scheduler suffice and is more robust than more elaborated methods.

TLDR; You can improve your diffusion samples by increasing guidance during the sampling process. A simpler linear scheduler suffice and is more robust than more elaborated methods.

🗺️ Paper, code, and demo: nicolas-dufour.github.io/plonk

🗺️ Paper, code, and demo: nicolas-dufour.github.io/plonk