#LLM #AI #ProgramSynthesis #ICML2025

#LLM #AI #ProgramSynthesis #ICML2025

📗 Blog Post: julienp.netlify.app/posts/soar/

🤗 Models (7/14/32/72/123b) & Data: huggingface.co/collections/...

💻 Code: github.com/flowersteam/...

📄 Paper: icml.cc/virtual/2025...

📗 Blog Post: julienp.netlify.app/posts/soar/

🤗 Models (7/14/32/72/123b) & Data: huggingface.co/collections/...

💻 Code: github.com/flowersteam/...

📄 Paper: icml.cc/virtual/2025...

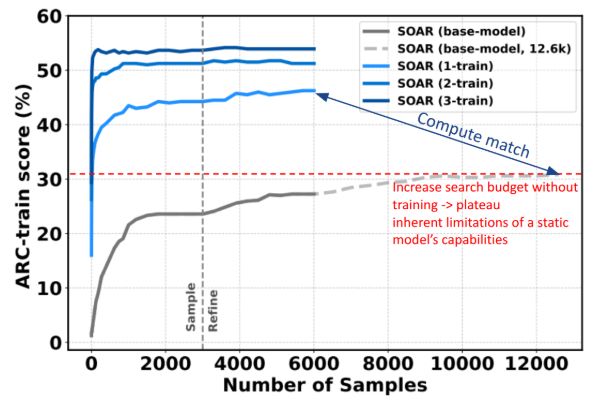

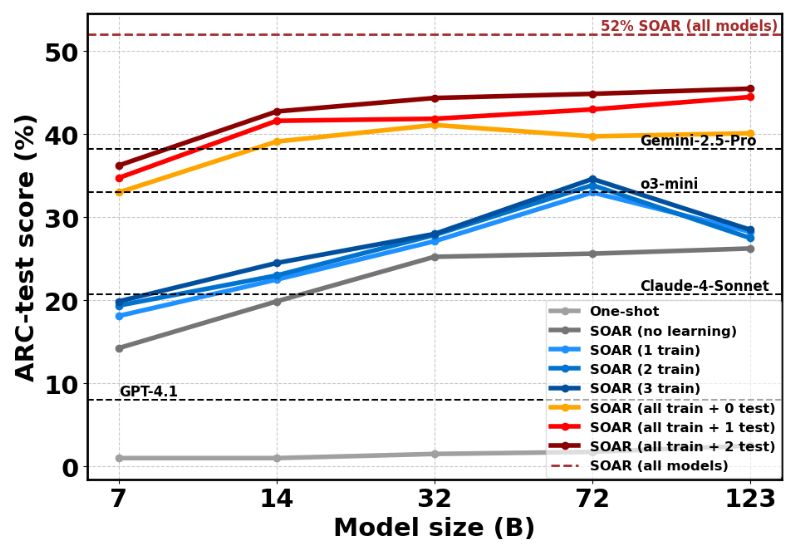

- Qwen-7B model: 6% → 36% accuracy

- Qwen-32B model: 13% → 45% accuracy

- Mistral-Large-2: 20% -> 46% accuracy

- Combined ensemble: 52% on ARC-AGI test set

- Outperforms much larger models like o3-mini and Claude-4-Sonnet

- Qwen-7B model: 6% → 36% accuracy

- Qwen-32B model: 13% → 45% accuracy

- Mistral-Large-2: 20% -> 46% accuracy

- Combined ensemble: 52% on ARC-AGI test set

- Outperforms much larger models like o3-mini and Claude-4-Sonnet

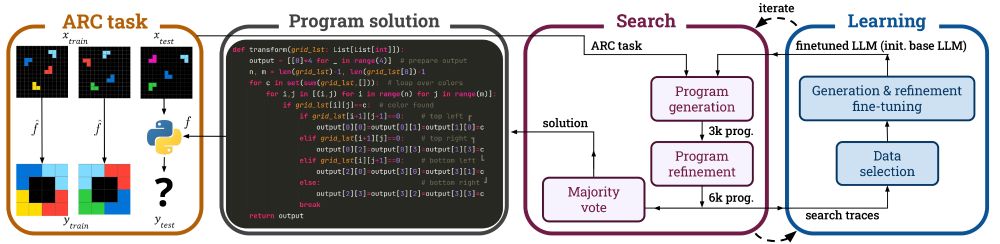

- **Sampling**: Generate better initial solutions

- **Refinement**: Enhance initial solutions

We also find that learning both together works better than specializing!

- **Sampling**: Generate better initial solutions

- **Refinement**: Enhance initial solutions

We also find that learning both together works better than specializing!

- Evolutionary search: LLM samples and refines candidate programs.

- Hindsight learning: The model learns from all its search attempts, successes and failures, to fine-tune its skills for the next round.

- Evolutionary search: LLM samples and refines candidate programs.

- Hindsight learning: The model learns from all its search attempts, successes and failures, to fine-tune its skills for the next round.