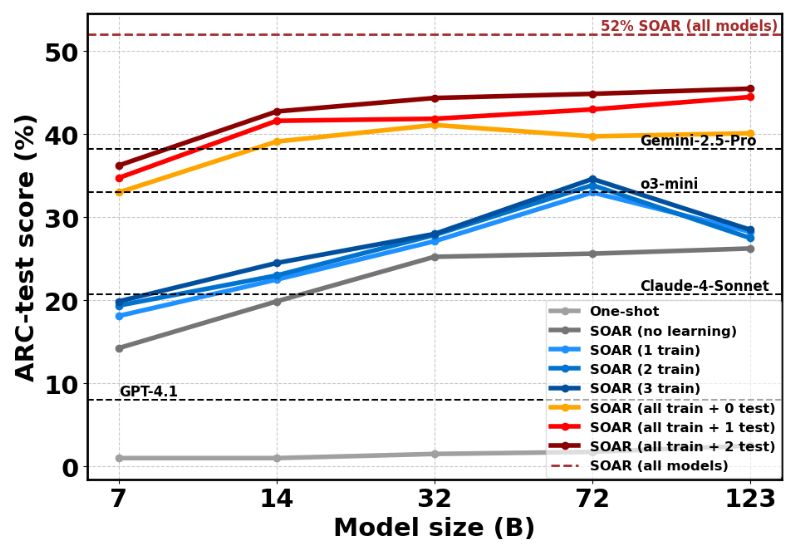

- Qwen-7B model: 6% → 36% accuracy

- Qwen-32B model: 13% → 45% accuracy

- Mistral-Large-2: 20% -> 46% accuracy

- Combined ensemble: 52% on ARC-AGI test set

- Outperforms much larger models like o3-mini and Claude-4-Sonnet

- Qwen-7B model: 6% → 36% accuracy

- Qwen-32B model: 13% → 45% accuracy

- Mistral-Large-2: 20% -> 46% accuracy

- Combined ensemble: 52% on ARC-AGI test set

- Outperforms much larger models like o3-mini and Claude-4-Sonnet

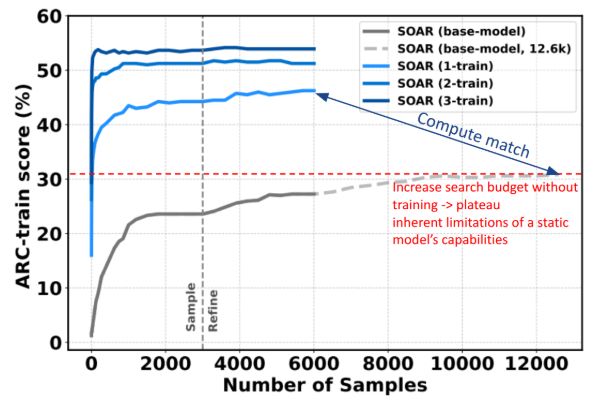

It brings LLMs from just a few percent on ARC-AGI-1 up to 52%

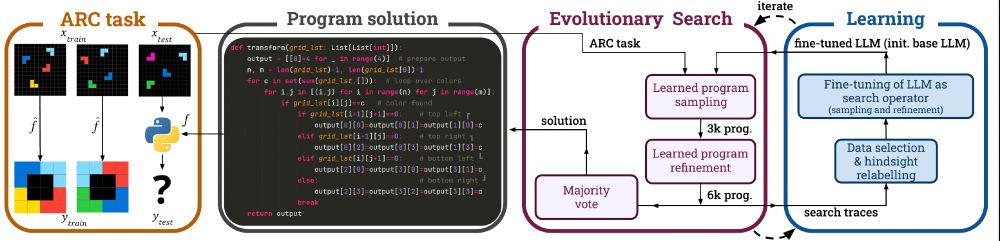

We’re releasing the finetuned LLMs, a dataset of 5M generated programs and the code.

🧵

It brings LLMs from just a few percent on ARC-AGI-1 up to 52%

We’re releasing the finetuned LLMs, a dataset of 5M generated programs and the code.

🧵