https://betswish.github.io

- MT error prediction techniques & its reception by professional translators (@gsarti.com)

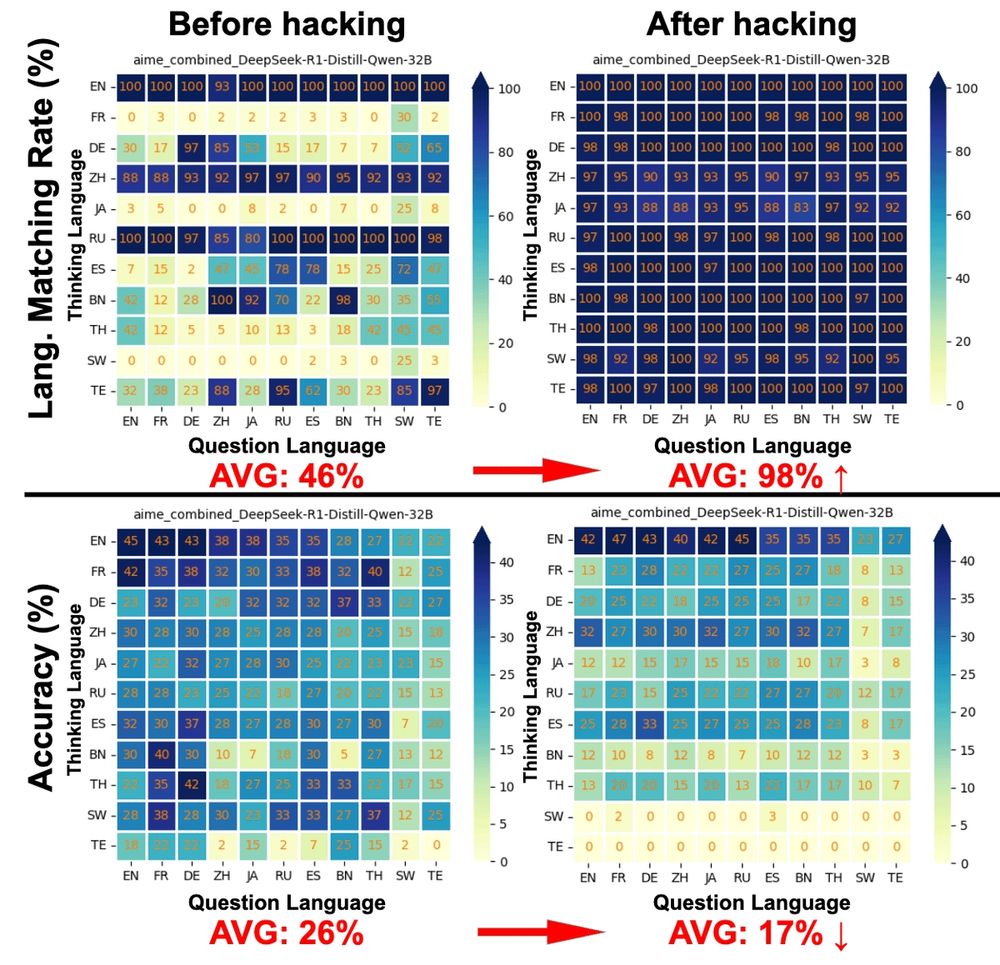

- thinking language in Large Reasoning Models (@jiruiqi.bsky.social)

- effect of stereotypes on LLM’s implicit personalization (@veraneplenbroek.bsky.social)

....

- MT error prediction techniques & its reception by professional translators (@gsarti.com)

- thinking language in Large Reasoning Models (@jiruiqi.bsky.social)

- effect of stereotypes on LLM’s implicit personalization (@veraneplenbroek.bsky.social)

....

@shan23chen.bsky.social @Zidi_Xiong @Raquel Fernández @daniellebitterman.bsky.social @arianna-bis.bsky.social

Github repo: github.com/Betswish/mCo...

Benchmark: huggingface.co/collections/...

Trained LRMs: huggingface.co/collections/...

@shan23chen.bsky.social @Zidi_Xiong @Raquel Fernández @daniellebitterman.bsky.social @arianna-bis.bsky.social

Github repo: github.com/Betswish/mCo...

Benchmark: huggingface.co/collections/...

Trained LRMs: huggingface.co/collections/...

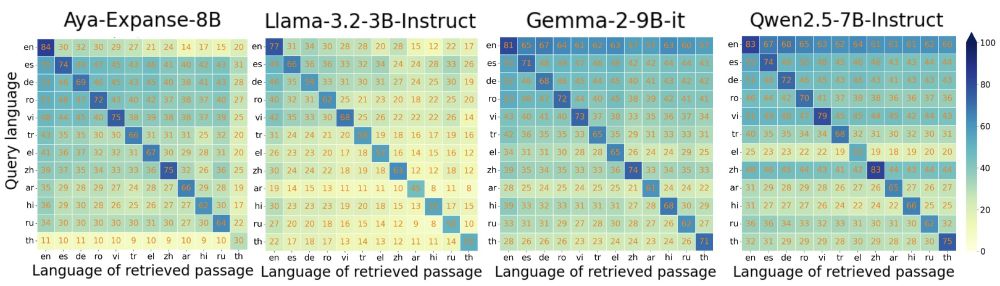

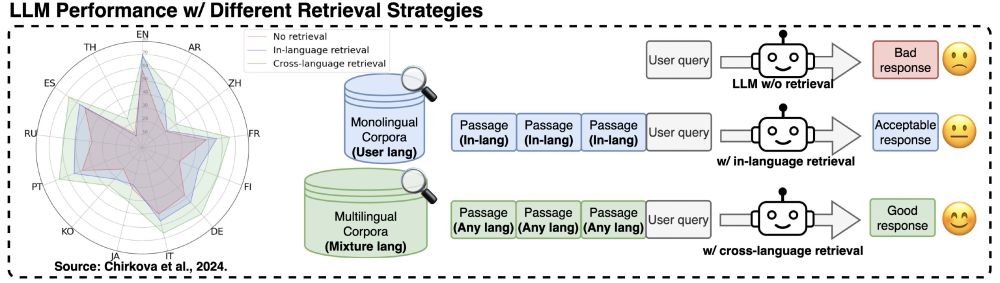

Tianyu Liu, Paul He, Arianna Bisazza, @mrinmaya.bsky.social, Ryan Cotterell.

Tianyu Liu, Paul He, Arianna Bisazza, @mrinmaya.bsky.social, Ryan Cotterell.