https://betswish.github.io

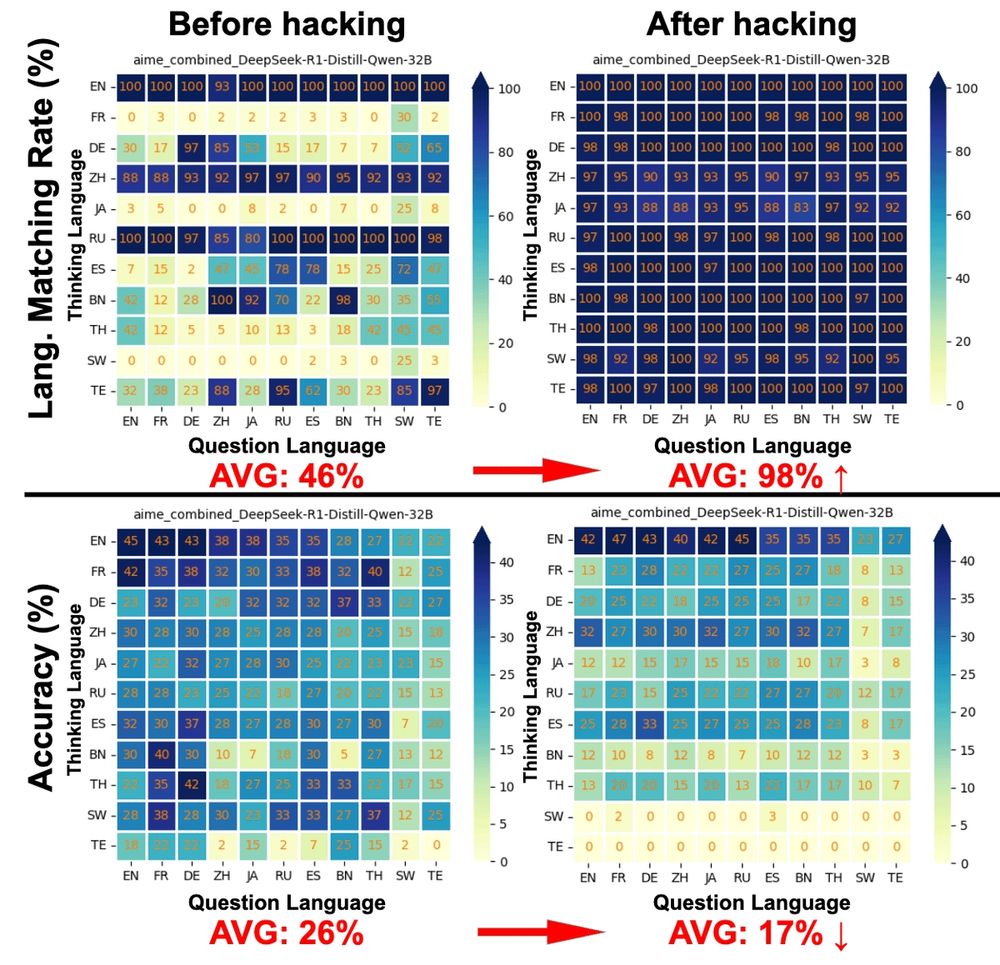

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888

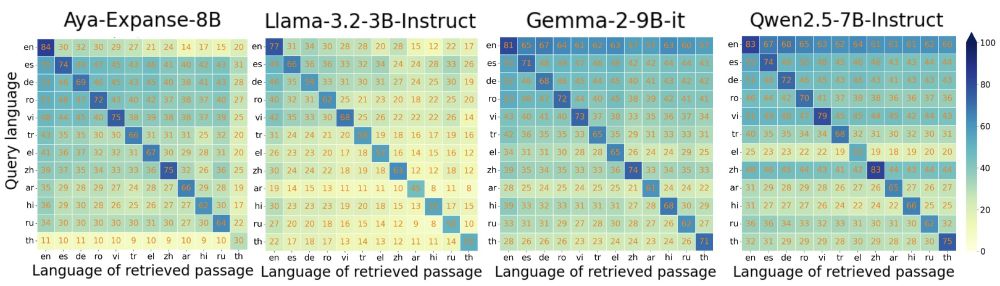

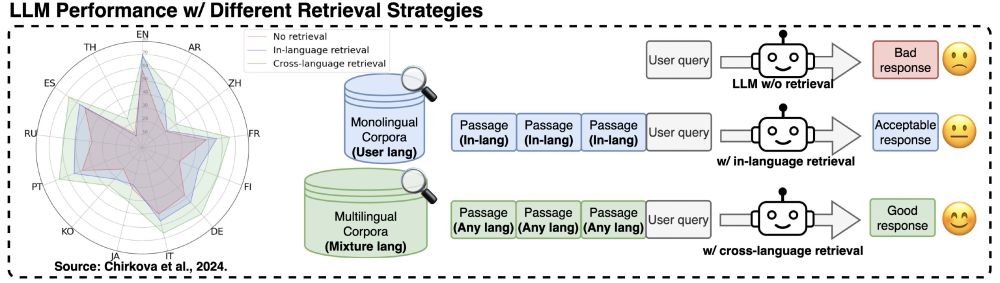

[1/] Retrieving passages from many languages can boost retrieval augmented generation (RAG) performance, but how good are LLMs at dealing with multilingual contexts in the prompt?

📄 Check it out: arxiv.org/abs/2504.00597

(w/ @arianna-bis.bsky.social @Raquel_Fernández)

#NLProc

[1/] Retrieving passages from many languages can boost retrieval augmented generation (RAG) performance, but how good are LLMs at dealing with multilingual contexts in the prompt?

📄 Check it out: arxiv.org/abs/2504.00597

(w/ @arianna-bis.bsky.social @Raquel_Fernández)

#NLProc

📄http://arxiv.org/abs/2411.07773

#NLProc

📄http://arxiv.org/abs/2411.07773

#NLProc