arxiv: arxiv.org/abs/2507.16746

data: huggingface.co/datasets/mul...

arxiv: arxiv.org/abs/2507.16746

data: huggingface.co/datasets/mul...

arxiv: arxiv.org/abs/2507.14137

code: github.com/valeoai/Franca

arxiv: arxiv.org/abs/2507.14137

code: github.com/valeoai/Franca

arxiv: arxiv.org/abs/2507.098...

project: vru-accident.github.io

arxiv: arxiv.org/abs/2507.098...

project: vru-accident.github.io

arxiv: arxiv.org/abs/2507.090...

arxiv: arxiv.org/abs/2507.090...

GRPO is pretty standard, interesting that they just did math instead of math, grounding, other possible RLVR tasks. Qwen-2.5-Instruct 32B to judges the accuracy of the answer in addition to rule-based verification.

GRPO is pretty standard, interesting that they just did math instead of math, grounding, other possible RLVR tasks. Qwen-2.5-Instruct 32B to judges the accuracy of the answer in addition to rule-based verification.

arxiv: arxiv.org/abs/2507.05920

code: github.com/EvolvingLMMs...

arxiv: arxiv.org/abs/2507.05920

code: github.com/EvolvingLMMs...

Results: +18 points better on V* compared to Qwen2.5-VL, and +5 points better than GRPO alone.

Results: +18 points better on V* compared to Qwen2.5-VL, and +5 points better than GRPO alone.

Data: training subset of MME-RealWorld. Evaluate on V*.

Data: training subset of MME-RealWorld. Evaluate on V*.

They use a SFT warm-start, as the VLMs struggled to output good grounding coordinates. They constructed two-turn samples for this.

They use a SFT warm-start, as the VLMs struggled to output good grounding coordinates. They constructed two-turn samples for this.

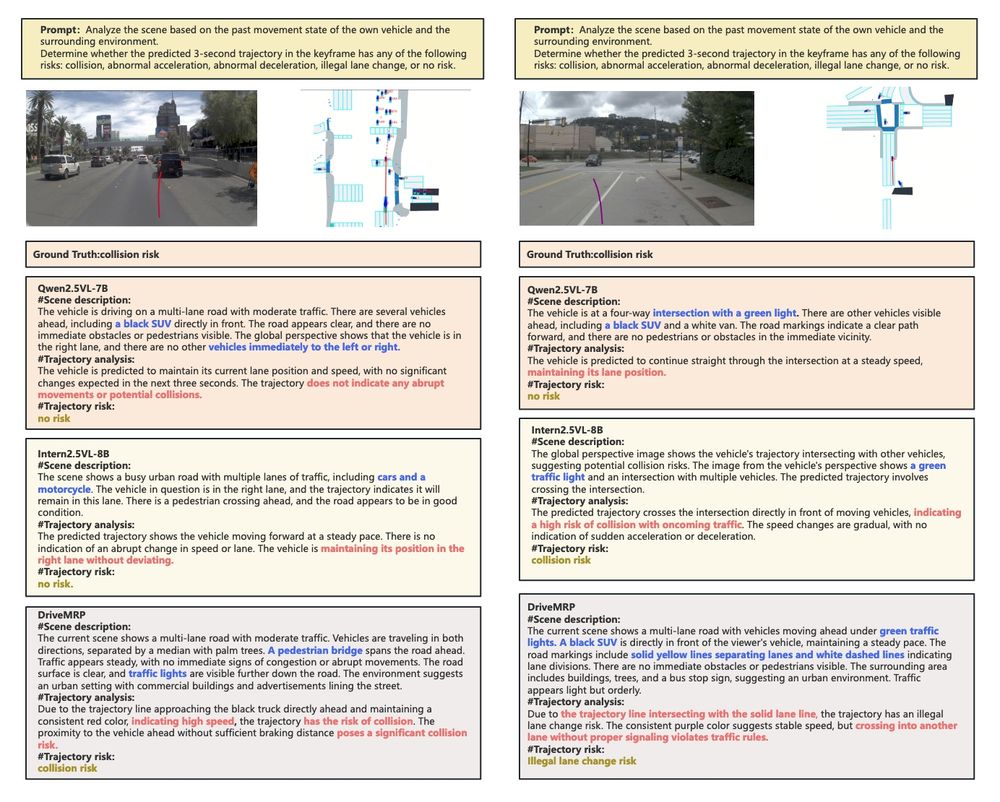

arxiv: arxiv.org/abs/2507.02948

code: github.com/hzy138/Drive...

arxiv: arxiv.org/abs/2507.02948

code: github.com/hzy138/Drive...