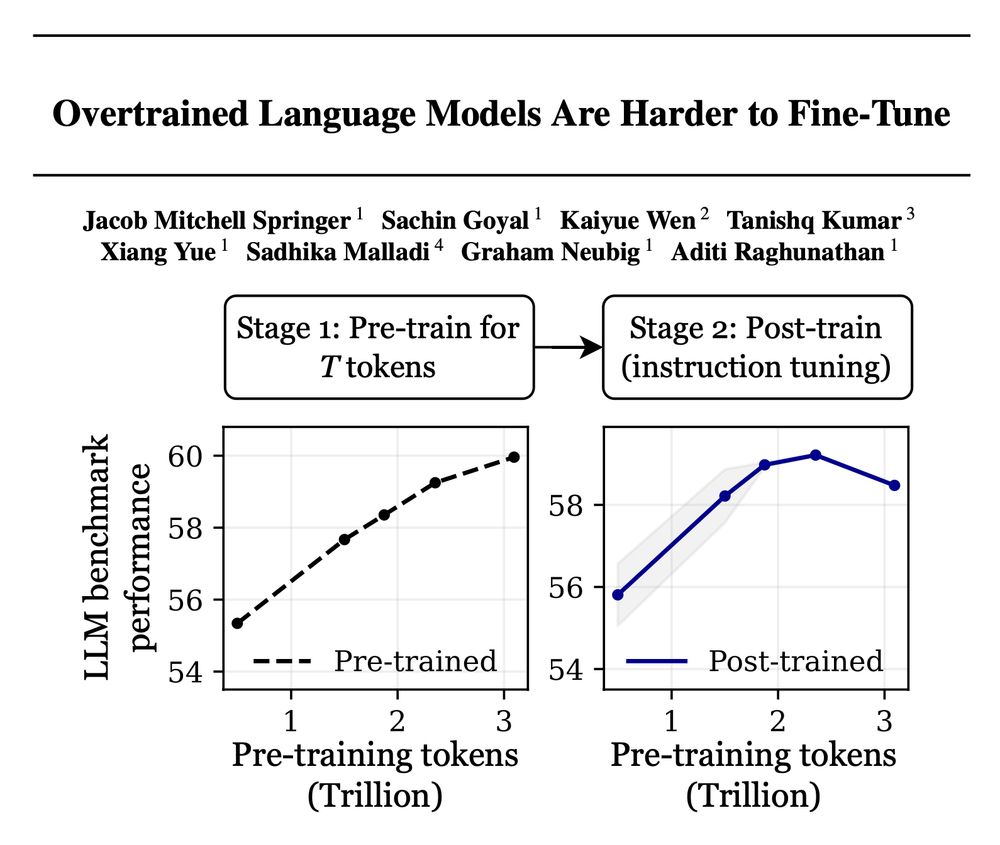

False! Scaling language models by adding more pre-training data can decrease your performance after post-training!

Introducing "catastrophic overtraining." 🥁🧵👇

arxiv.org/abs/2503.19206

1/10

False! Scaling language models by adding more pre-training data can decrease your performance after post-training!

Introducing "catastrophic overtraining." 🥁🧵👇

arxiv.org/abs/2503.19206

1/10