arXiv: arxiv.org/pdf/2505.12534

read more: chempile.lamalab.org

arXiv: arxiv.org/pdf/2505.12534

read more: chempile.lamalab.org

dataset: huggingface.co/collections/...

dataset: huggingface.co/collections/...

lamalab-org.github.io/chembench/

lamalab-org.github.io/chembench/

✨Multimodal Support – Handle text, data, and chemistry-specific inputs seamlessly

✨Redesigned API – Now standardized on LiteLLM messages for effortless integration

✨Custom System Prompts – Tailor benchmarks to your unique use case

✨Multimodal Support – Handle text, data, and chemistry-specific inputs seamlessly

✨Redesigned API – Now standardized on LiteLLM messages for effortless integration

✨Custom System Prompts – Tailor benchmarks to your unique use case

#LLMs #MachineLearning #OpenScience

#LLMs #MachineLearning #OpenScience

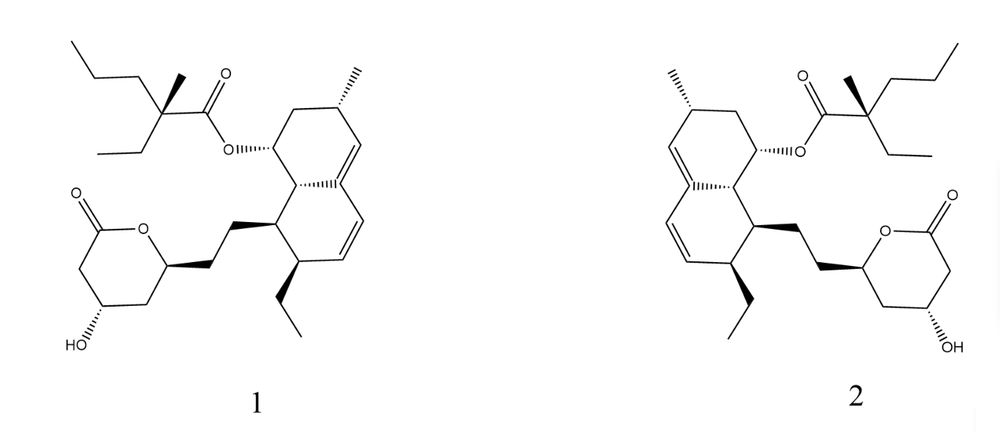

🌟VLLMs dominate: Outperform specialized models like Decimer in benchmarks

🌟VLLMs dominate: Outperform specialized models like Decimer in benchmarks

📜Manuscript: arxiv.org/abs/2411.16955

👩💻GitHub: github.com/lamalab-org/...

📜Manuscript: arxiv.org/abs/2411.16955

👩💻GitHub: github.com/lamalab-org/...

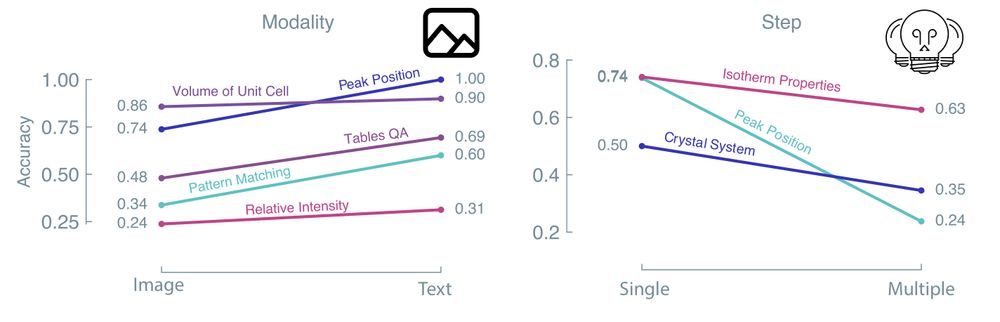

But this is not the case!

But this is not the case!

We compared different modalities, multi-step vs single step reasoning, guided prompting, etc.

We compared different modalities, multi-step vs single step reasoning, guided prompting, etc.

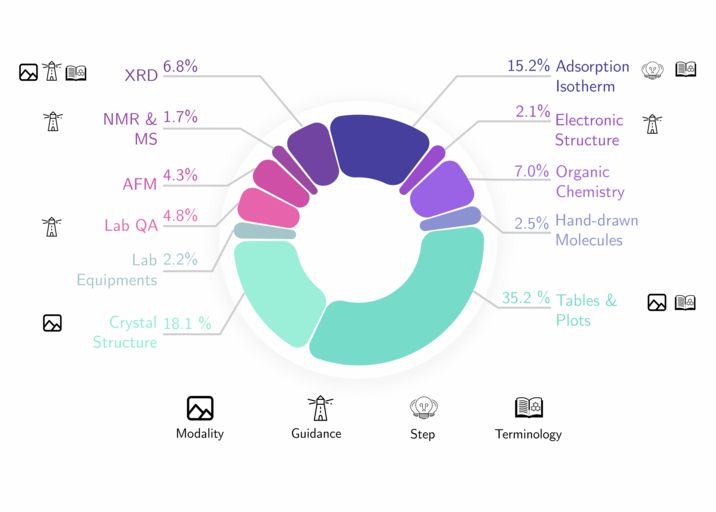

We focus on the tasks we consider crucial for scientific development, practical lab scenarios, Spectral Analysis, US patents, and more.

We focus on the tasks we consider crucial for scientific development, practical lab scenarios, Spectral Analysis, US patents, and more.