dataset: huggingface.co/collections/...

dataset: huggingface.co/collections/...

lamalab-org.github.io/chembench/

lamalab-org.github.io/chembench/

#LLMs #MachineLearning #OpenScience

#LLMs #MachineLearning #OpenScience

🌟VLLMs dominate: Outperform specialized models like Decimer in benchmarks

🌟VLLMs dominate: Outperform specialized models like Decimer in benchmarks

Key updates!

🌟Robust reproducibility: 5x experiment runs + error bars for statistical confidence

🌟Full dataset & leaderboard: Now live on HuggingFace with model comparisons huggingface.co/spaces/jablo...

Key updates!

🌟Robust reproducibility: 5x experiment runs + error bars for statistical confidence

🌟Full dataset & leaderboard: Now live on HuggingFace with model comparisons huggingface.co/spaces/jablo...

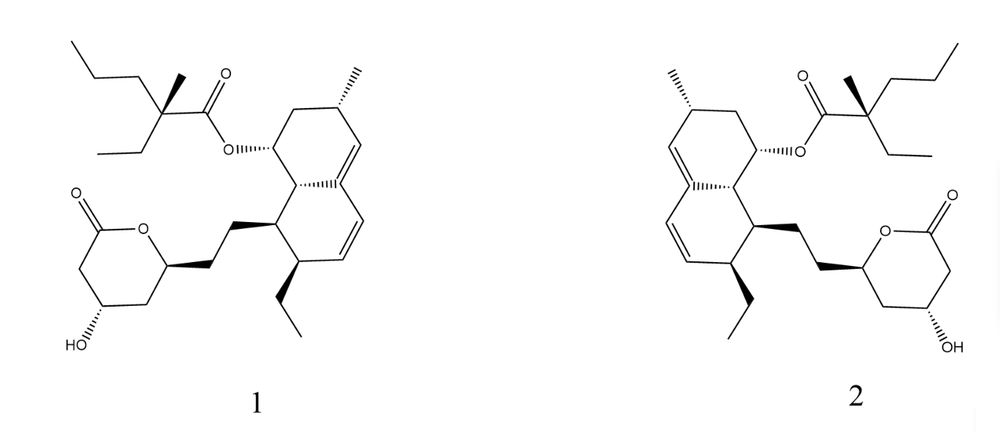

But this is not the case!

But this is not the case!

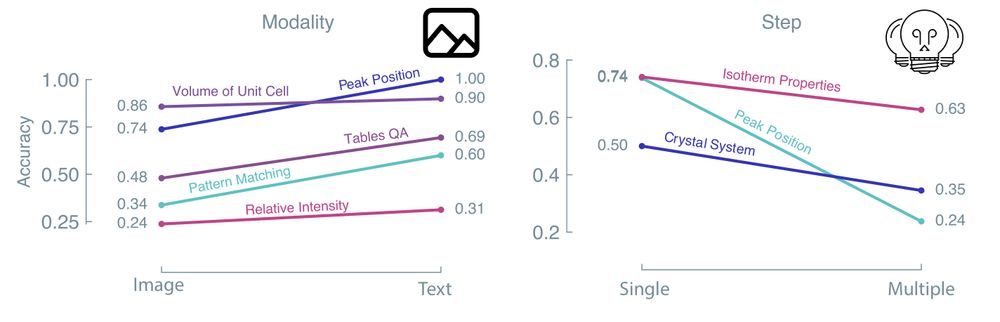

We compared different modalities, multi-step vs single step reasoning, guided prompting, etc.

We compared different modalities, multi-step vs single step reasoning, guided prompting, etc.

We focus on the tasks we consider crucial for scientific development, practical lab scenarios, Spectral Analysis, US patents, and more.

We focus on the tasks we consider crucial for scientific development, practical lab scenarios, Spectral Analysis, US patents, and more.

🧑🔬🧪

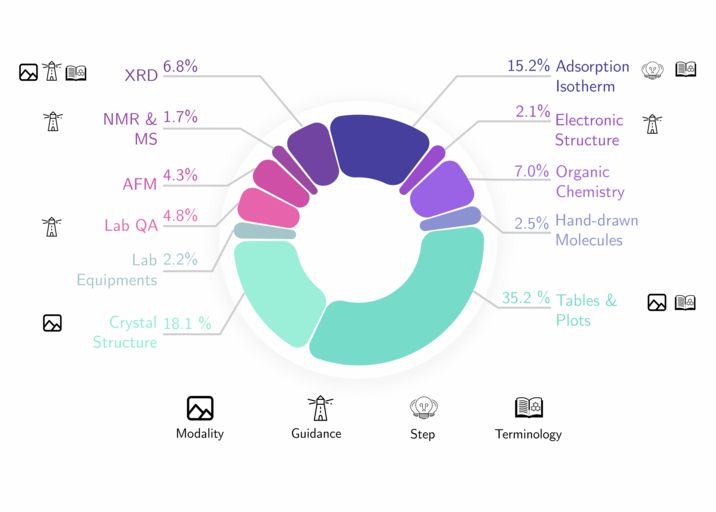

We compared leading VLLMs on the three pillars of chemical and material science discovery: data extraction, lab experimentation and data interpretation.

arxiv.org/abs/2411.16955

🧑🔬🧪

We compared leading VLLMs on the three pillars of chemical and material science discovery: data extraction, lab experimentation and data interpretation.

arxiv.org/abs/2411.16955