Email me and/or DSAI_administration@sussex.ac.uk if you have any questions about the process. @sussexai.bsky.social @drtnowotny.bsky.social @wijdr.bsky.social anyone else on here yet?!

Email me and/or DSAI_administration@sussex.ac.uk if you have any questions about the process. @sussexai.bsky.social @drtnowotny.bsky.social @wijdr.bsky.social anyone else on here yet?!

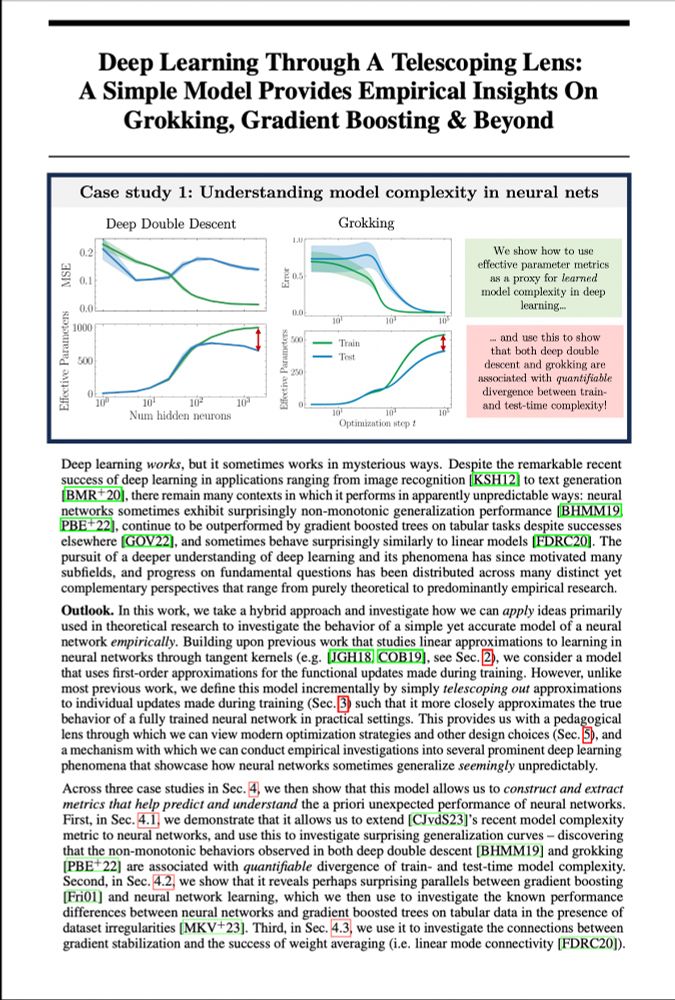

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n