https://itay1itzhak.github.io/

This week I’ll be in New York giving talks at NYU, Yale, and Cornell Tech.

If you’re around and want to chat about LLM behavior, safety, interpretability, or just say hi - DM me!

This week I’ll be in New York giving talks at NYU, Yale, and Cornell Tech.

If you’re around and want to chat about LLM behavior, safety, interpretability, or just say hi - DM me!

@adisimhi.bsky.social !

ManagerBench reveals a critical problem:

✅ LLMs can recognize harm

❌ But often choose it anyway to meet goals

🤖 Or overcorrect and become ineffective

We need better balance!

A must-read for safety folks!

🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

@adisimhi.bsky.social !

ManagerBench reveals a critical problem:

✅ LLMs can recognize harm

❌ But often choose it anyway to meet goals

🤖 Or overcorrect and become ineffective

We need better balance!

A must-read for safety folks!

Cognitive biases, hidden knowledge, CoT faithfulness, model editing, and LM4Science

See the thread for details and reach out if you'd like to discuss more!

Cognitive biases, hidden knowledge, CoT faithfulness, model editing, and LM4Science

See the thread for details and reach out if you'd like to discuss more!

Now hungry for discussing:

– LLMs behavior

– Interpretability

– Biases & Hallucinations

– Why eval is so hard (but so fun)

Come say hi if that’s your vibe too!

Now hungry for discussing:

– LLMs behavior

– Interpretability

– Biases & Hallucinations

– Why eval is so hard (but so fun)

Come say hi if that’s your vibe too!

🧠

Instruction-tuned LLMs show amplified cognitive biases — but are these new behaviors, or pretraining ghosts resurfacing?

Excited to share our new paper, accepted to CoLM 2025🎉!

See thread below 👇

#BiasInAI #LLMs #MachineLearning #NLProc

🧠

Instruction-tuned LLMs show amplified cognitive biases — but are these new behaviors, or pretraining ghosts resurfacing?

Excited to share our new paper, accepted to CoLM 2025🎉!

See thread below 👇

#BiasInAI #LLMs #MachineLearning #NLProc

Our workshop deadline is soon, please consider submitting your evaluation paper!

You can find our call for papers at gem-benchmark.com/workshop

Our workshop deadline is soon, please consider submitting your evaluation paper!

You can find our call for papers at gem-benchmark.com/workshop

Curious how small prompt tweaks impact LLM accuracy but don’t want to run endless inferences? We got you. Meet DOVE - a dataset built to uncover these sensitivities.

Use DOVE for your analysis or contribute samples -we're growing and welcome you aboard!

We bring you ️️🕊️ DOVE a massive (250M!) collection of LLMs outputs

On different prompts, domains, tokens, models...

Join our community effort to expand it with YOUR model predictions & become a co-author!

Curious how small prompt tweaks impact LLM accuracy but don’t want to run endless inferences? We got you. Meet DOVE - a dataset built to uncover these sensitivities.

Use DOVE for your analysis or contribute samples -we're growing and welcome you aboard!

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

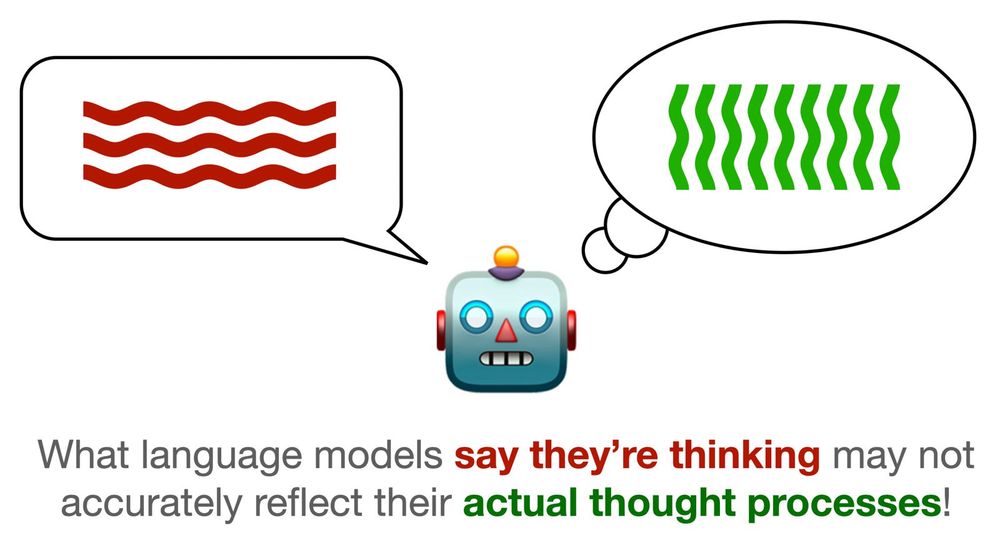

Ever wonder whether verbalized CoTs correspond to the internal reasoning process of the model?

We propose a novel parametric faithfulness approach, which erases information contained in CoT steps from the model parameters to assess CoT faithfulness.

arxiv.org/abs/2502.14829

Ever wonder whether verbalized CoTs correspond to the internal reasoning process of the model?

We propose a novel parametric faithfulness approach, which erases information contained in CoT steps from the model parameters to assess CoT faithfulness.

arxiv.org/abs/2502.14829

Check out our new paper that challenges assumptions on AI trustworthiness! 🧵👇

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

Check out our new paper that challenges assumptions on AI trustworthiness! 🧵👇

Evaluation in the world of GenAI is more important than ever, so please consider submitting your amazing work.

CfP can be found at gem-benchmark.com/workshop

Evaluation in the world of GenAI is more important than ever, so please consider submitting your amazing work.

CfP can be found at gem-benchmark.com/workshop