https://belinkov.com/

🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

Also happy to talk about PhD and Post-doc opportunities!

Also happy to talk about PhD and Post-doc opportunities!

Preprint: www.biorxiv.org/content/10.1...

Preprint: www.biorxiv.org/content/10.1...

@mtutek.bsky.social , who couldn't travel:

bsky.app/profile/mtut...

Later that day we'll have a poster on predicting success of model editing by Yanay Soker, who also couldn't travel

@mtutek.bsky.social , who couldn't travel:

bsky.app/profile/mtut...

Later that day we'll have a poster on predicting success of model editing by Yanay Soker, who also couldn't travel

will present on Wednesday a poster on hidden factual knowledge in LMs

bsky.app/profile/zori...

In our new paper, we clearly define this concept and design controlled experiments to test it.

1/🧵

will present on Wednesday a poster on hidden factual knowledge in LMs

bsky.app/profile/zori...

presenting today, morning, a spotlight talk and poster on the origin of cognitive biases in LLMs

bsky.app/profile/itay...

🧠

Instruction-tuned LLMs show amplified cognitive biases — but are these new behaviors, or pretraining ghosts resurfacing?

Excited to share our new paper, accepted to CoLM 2025🎉!

See thread below 👇

#BiasInAI #LLMs #MachineLearning #NLProc

presenting today, morning, a spotlight talk and poster on the origin of cognitive biases in LLMs

bsky.app/profile/itay...

Cognitive biases, hidden knowledge, CoT faithfulness, model editing, and LM4Science

See the thread for details and reach out if you'd like to discuss more!

Cognitive biases, hidden knowledge, CoT faithfulness, model editing, and LM4Science

See the thread for details and reach out if you'd like to discuss more!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

* PhD applications direct or via ELLIS @ellis.eu (ellis.eu/news/ellis-p...)

* Post-doc applications direct or via Azrieli (azrielifoundation.org/fellows/inte...) or Zuckerman (zuckermanstem.org/ourprograms/...)

* PhD applications direct or via ELLIS @ellis.eu (ellis.eu/news/ellis-p...)

* Post-doc applications direct or via Azrieli (azrielifoundation.org/fellows/inte...) or Zuckerman (zuckermanstem.org/ourprograms/...)

Get in touch if you're in the Boston area and want to chat about anything related to AI interpretability, robustness, interventions, safety, multi-modality, protein/DNA LMs, new architectures, multi-agent communication, or anything else you're excited about!

We’re thrilled to welcome Yonatan Belinkov (expert in #NLP) and Daphna Weinshall (expert in human & machine vision) as visiting scholars for the 2025–26 academic year.

📖 Read more: bit.ly/47QkDID

#AI #MachineVision @boknilev.bsky.social

Get in touch if you're in the Boston area and want to chat about anything related to AI interpretability, robustness, interventions, safety, multi-modality, protein/DNA LMs, new architectures, multi-agent communication, or anything else you're excited about!

speaking at

@kempnerinstitute.bsky.social

on Auditing, Dissecting, and Evaluating LLMs

speaking at

@kempnerinstitute.bsky.social

on Auditing, Dissecting, and Evaluating LLMs

In case you can’t wait so long to hear about it in person, it will also be presented as an oral at @interplay-workshop.bsky.social @colmweb.org 🥳

FUR is a parametric test assessing whether CoTs faithfully verbalize latent reasoning.

In case you can’t wait so long to hear about it in person, it will also be presented as an oral at @interplay-workshop.bsky.social @colmweb.org 🥳

FUR is a parametric test assessing whether CoTs faithfully verbalize latent reasoning.

The workshop is highly attended and is a great exposure for your finished work or feedback on work in progress.

#emnlp2025 at Sujhou, China!

#BlackboxNLP 2025 invites the submission of archival and non-archival papers on interpreting and explaining NLP models.

📅 Deadlines: Aug 15 (direct submissions), Sept 5 (ARR commitment)

🔗 More details: blackboxnlp.github.io/2025/call/

The workshop is highly attended and is a great exposure for your finished work or feedback on work in progress.

#emnlp2025 at Sujhou, China!

discord.gg/n5uwjQcxPR

Participants will have the option to write a system description paper that gets published.

discord.gg/n5uwjQcxPR

Participants will have the option to write a system description paper that gets published.

New featurization methods?

Circuit pruning?

Better feature attribution?

We'd love to hear about it 👇

New featurization methods?

Circuit pruning?

Better feature attribution?

We'd love to hear about it 👇

Consider submitting your work to the MIB Shared Task, part of this year’s #BlackboxNLP

We welcome submissions of both existing methods and new or experimental POCs!

Consider submitting your work to the MIB Shared Task, part of this year’s #BlackboxNLP

We welcome submissions of both existing methods and new or experimental POCs!

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

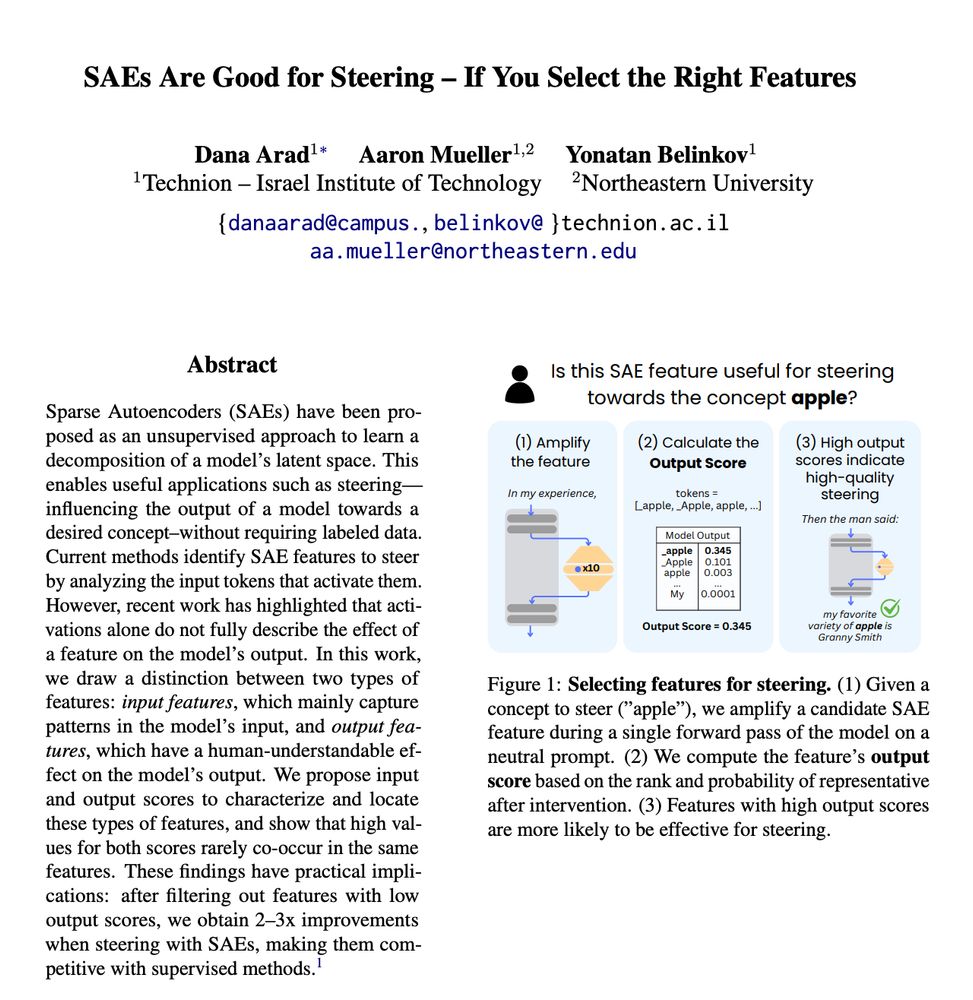

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

REVS: Unlearning Sensitive Information in LMs via Rank Editing in the Vocabulary Space.

LMs memorize and leak sensitive data—emails, SSNs, URLs from their training.

We propose a surgical method to unlearn it.

🧵👇w/ @boknilev.bsky.social @mtutek.bsky.social

1/8

REVS: Unlearning Sensitive Information in LMs via Rank Editing in the Vocabulary Space.

LMs memorize and leak sensitive data—emails, SSNs, URLs from their training.

We propose a surgical method to unlearn it.

🧵👇w/ @boknilev.bsky.social @mtutek.bsky.social

1/8

This edition will feature a new shared task on circuits/causal variable localization in LMs, details here: blackboxnlp.github.io/2025/task

See the list here:

bsky.app/profile/amuu...

See the list here:

bsky.app/profile/amuu...