newyorker.com/best-books-2...

newyorker.com/best-books-2...

From an interview in The Guardian by Danny Leigh: www.theguardian.com/film/2025/oc...

From an interview in The Guardian by Danny Leigh: www.theguardian.com/film/2025/oc...

www.forbes.com/sites/billco...

www.forbes.com/sites/billco...

Many thanks to all those who attended, and to @jonatomic.bsky.social, Director of Global Risk at FAS, for the great conversation.

Many thanks to all those who attended, and to @jonatomic.bsky.social, Director of Global Risk at FAS, for the great conversation.

#8 Hardcover Nonfiction (www.nytimes.com/books/best-s...)

#8 Hardcover Nonfiction (www.nytimes.com/books/best-s...)

“I want to bring on the authors of a book, that I think is a must read for every person in this nation.”

“Everyone ought to go and get it, ‘If Anyone Builds It, Everyone Dies,’ a landmark book everyone should get and read.”

rumble.com/v6z4xnk-epis...

“I want to bring on the authors of a book, that I think is a must read for every person in this nation.”

“Everyone ought to go and get it, ‘If Anyone Builds It, Everyone Dies,’ a landmark book everyone should get and read.”

rumble.com/v6z4xnk-epis...

📺 Full segments below starting with @cnn.com:

next.frame.io/share/c0b240...

📺 Full segments below starting with @cnn.com:

next.frame.io/share/c0b240...

Join us for a conversation between co-author Nate Soares and @jonatomic.bsky.social, Director of Global Risk at the Federation of American Scientists.

Audience Q&A, book signing, and more:

politics-prose.com/nate-soares

Join us for a conversation between co-author Nate Soares and @jonatomic.bsky.social, Director of Global Risk at the Federation of American Scientists.

Audience Q&A, book signing, and more:

politics-prose.com/nate-soares

New blurbs and media appearances, plus reading group support and ways to help with the book launch!

intelligence.org/2025/09/16/i...

New blurbs and media appearances, plus reading group support and ways to help with the book launch!

intelligence.org/2025/09/16/i...

Just over a week to the UK release on Sept. 18th!

Just over a week to the UK release on Sept. 18th!

From the book's intro: “We open many of the chapters with parables: stories that, we hope, will help convey some points more simply than otherwise.”

Here's a snippet of one of them from the beginning of Chapter 1.

(Extended clip in 🧵)

From the book's intro: “We open many of the chapters with parables: stories that, we hope, will help convey some points more simply than otherwise.”

Here's a snippet of one of them from the beginning of Chapter 1.

(Extended clip in 🧵)

Hot off the press to our mailbox, from @littlebrown.bsky.social

Sept 16th release is getting close, 20 days and counting!

Hot off the press to our mailbox, from @littlebrown.bsky.social

Sept 16th release is getting close, 20 days and counting!

The Goodreads Giveaway for Eliezer Yudkowsky and Nate Soares' new book is live through Aug 31st.

50 hardcover advance reader copies available (US)!

Enter here: www.goodreads.com/giveaway/sho...

#BookSky #GoodreadsGiveaway

The Goodreads Giveaway for Eliezer Yudkowsky and Nate Soares' new book is live through Aug 31st.

50 hardcover advance reader copies available (US)!

Enter here: www.goodreads.com/giveaway/sho...

#BookSky #GoodreadsGiveaway

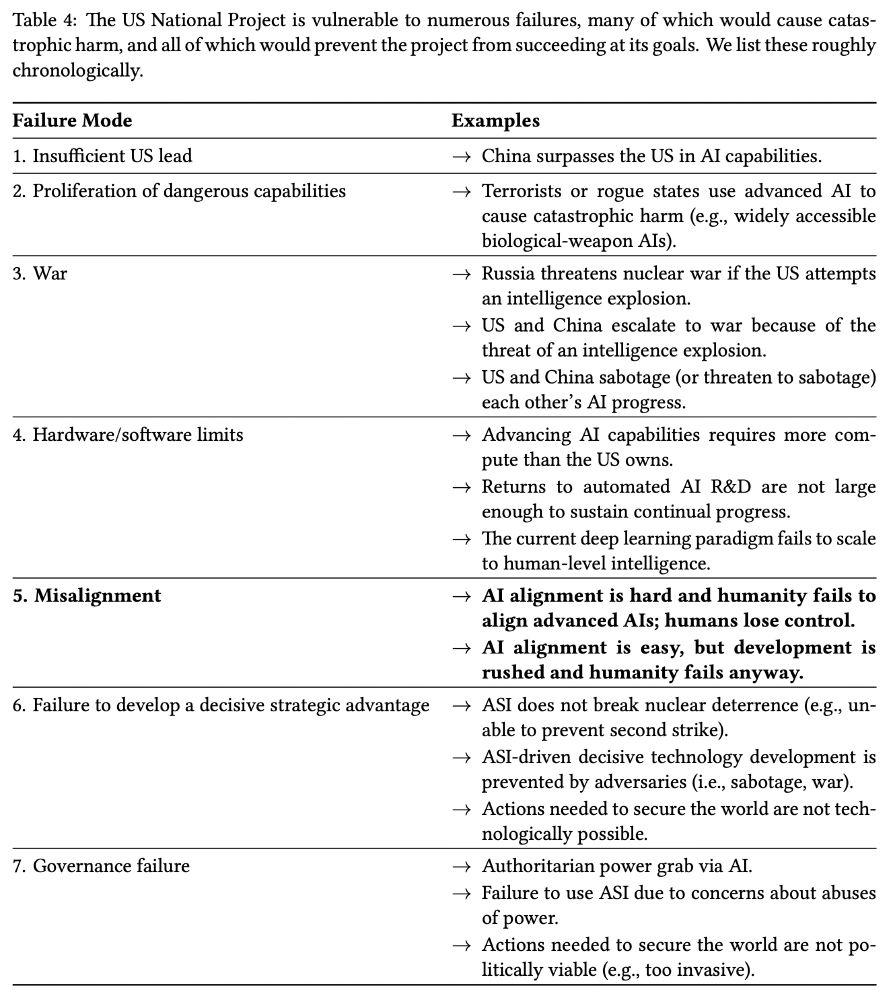

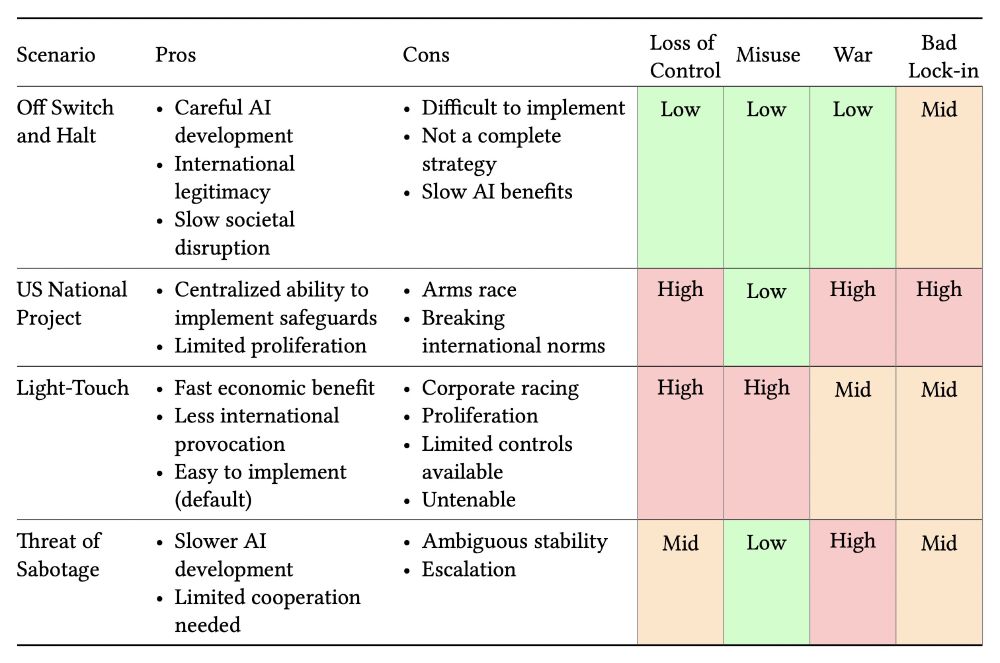

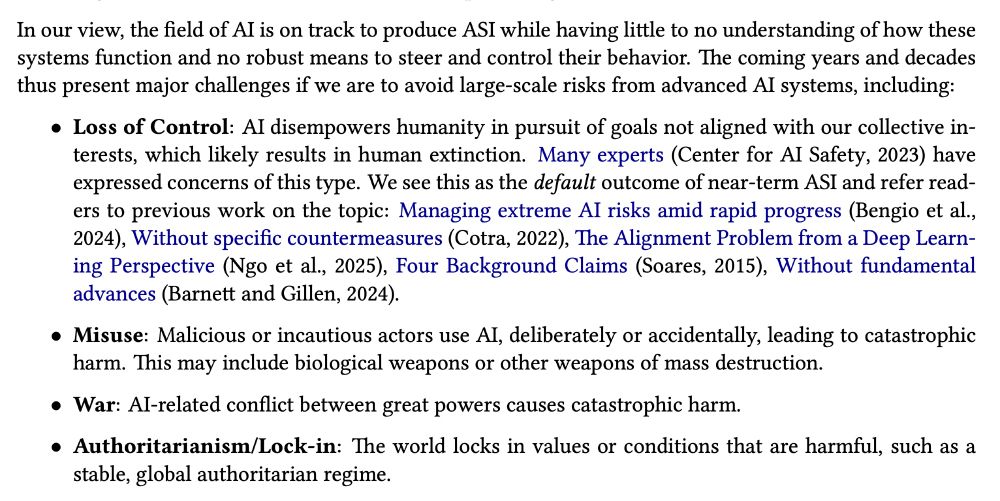

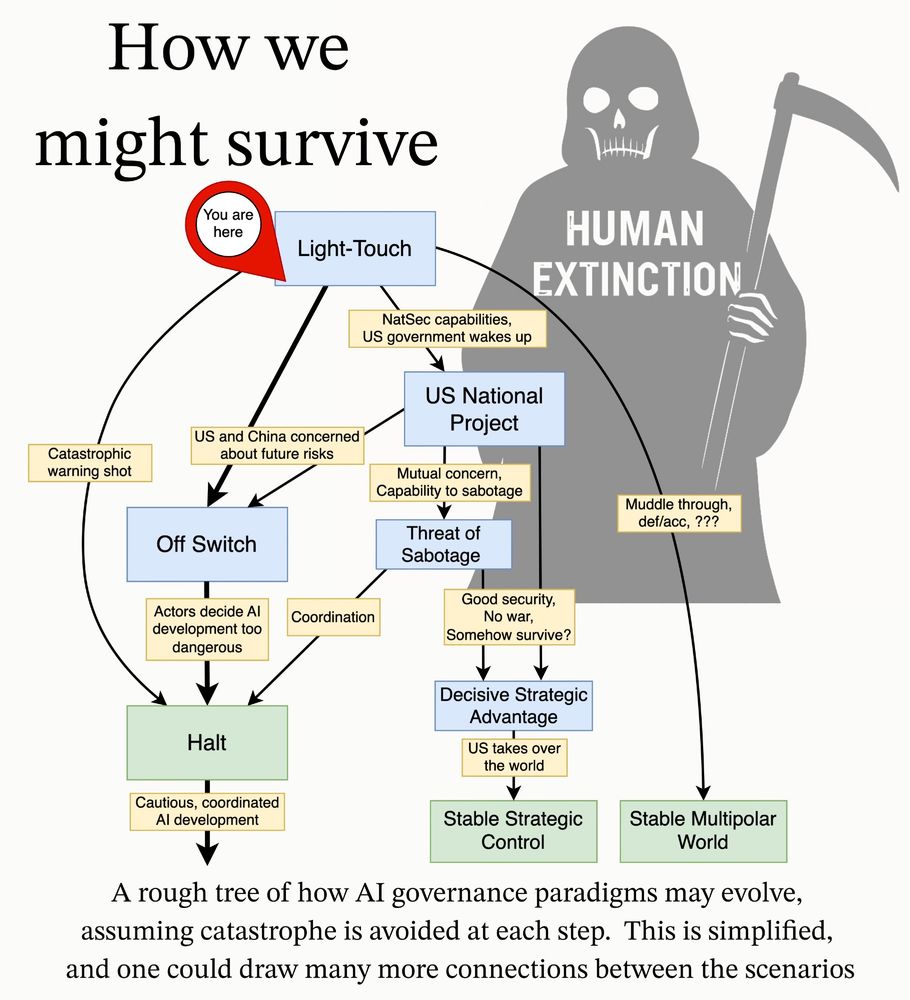

techgov.intelligence.org/research/ai-...

techgov.intelligence.org/research/ai-...