#8 Hardcover Nonfiction (www.nytimes.com/books/best-s...)

4 hours left to go, and by golly it looks like we’ve got a real shot at securing all the matching.

Thanks everyone! Happy New Year 🎉

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

4 hours left to go, and by golly it looks like we’ve got a real shot at securing all the matching.

Thanks everyone! Happy New Year 🎉

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

4 hours left to go, and by golly it looks like we’ve got a real shot at securing all the matching.

Thanks everyone! Happy New Year 🎉

Thanks to all those who stepped up in the last couple of days to close the gap by ~$500k. ❤️

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

Thanks to all those who stepped up in the last couple of days to close the gap by ~$500k. ❤️

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

Thanks to all those who stepped up in the last couple of days to close the gap by ~$500k. ❤️

~$700k in matching funds remaining.

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

~$700k in matching funds remaining.

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

~$700k in matching funds remaining.

If you’re looking for other ways to help, sharing this thread, or quote-posting it with why you chose to support us would mean a lot.

If you’re looking for other ways to help, sharing this thread, or quote-posting it with why you chose to support us would mean a lot.

(If you don’t see your preferred donation method, including cryptocurrencies, reach out to us at development@intelligence.org.)

(If you don’t see your preferred donation method, including cryptocurrencies, reach out to us at development@intelligence.org.)

The funds come from a Survival and Flourishing Fund matching pledge—not a traditional grant.

You can learn more about SFF’s matching pledges here:

The funds come from a Survival and Flourishing Fund matching pledge—not a traditional grant.

You can learn more about SFF’s matching pledges here:

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

newyorker.com/best-books-2...

newyorker.com/best-books-2...

His latest video, an hour+ long interview with Nate Soares about “If Anyone Builds It, Everyone Dies,” is a banger. My new favorite!

www.youtube.com/watch?v=5CKu...

His latest video, an hour+ long interview with Nate Soares about “If Anyone Builds It, Everyone Dies,” is a banger. My new favorite!

www.youtube.com/watch?v=5CKu...

🗓️ Tuesday Oct 28 @ 7:30pm at Manny’s in SF.

Get your tickets:

🗓️ Tuesday Oct 28 @ 7:30pm at Manny’s in SF.

Get your tickets:

From an interview in The Guardian by Danny Leigh: www.theguardian.com/film/2025/oc...

From an interview in The Guardian by Danny Leigh: www.theguardian.com/film/2025/oc...

www.forbes.com/sites/billco...

www.forbes.com/sites/billco...

The researcher Eliezer Yudkowsky argues that we should be very afraid of artificial intelligence’s existential risks.

www.nytimes.com/2025/10/15/o...

youtu.be/2Nn0-kAE5c0?...

The researcher Eliezer Yudkowsky argues that we should be very afraid of artificial intelligence’s existential risks.

www.nytimes.com/2025/10/15/o...

youtu.be/2Nn0-kAE5c0?...

Hear the #bookclub #podcast 🎧📖 https://loom.ly/w1hBbWM

Hear the #bookclub #podcast 🎧📖 https://loom.ly/w1hBbWM

Why mitigating existential AI risk should be a top global priority, the problem of pointing minds, a treaty to ban the race to superintelligence, and more.

Why mitigating existential AI risk should be a top global priority, the problem of pointing minds, a treaty to ban the race to superintelligence, and more.

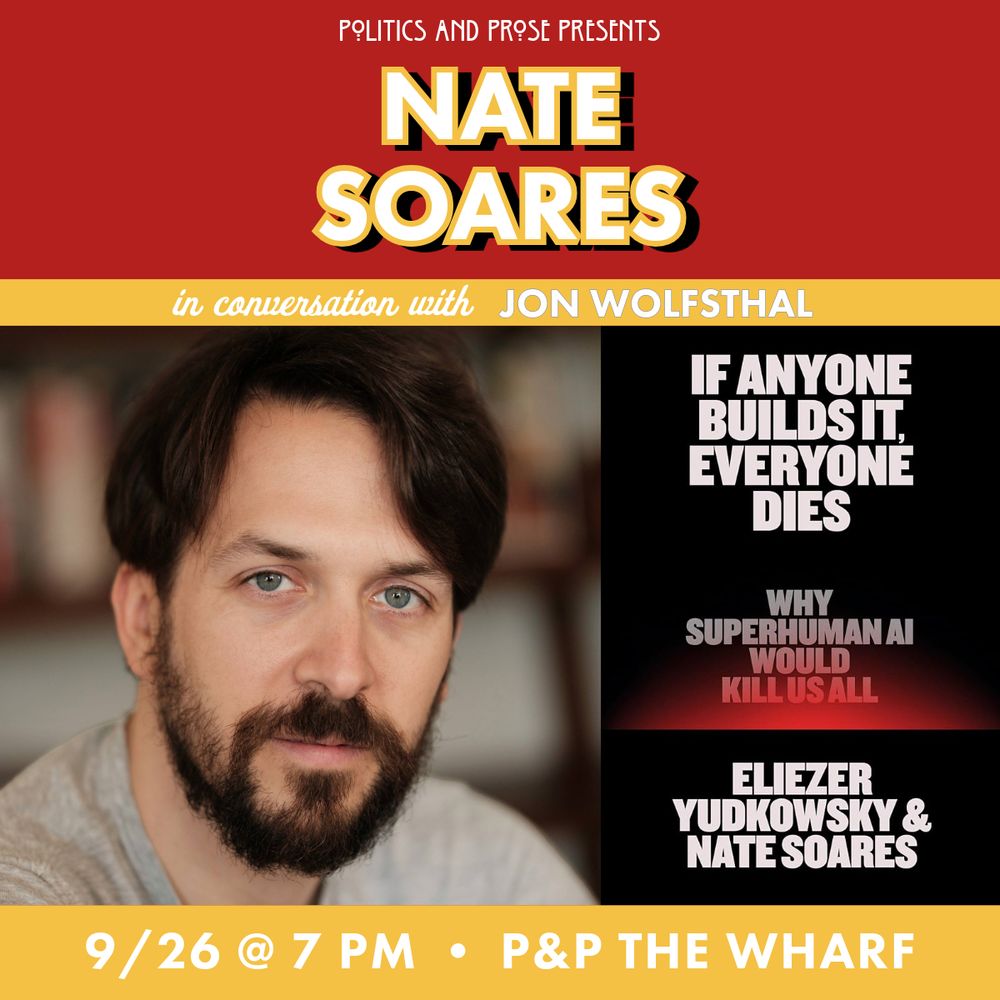

Many thanks to all those who attended, and to @jonatomic.bsky.social, Director of Global Risk at FAS, for the great conversation.

Many thanks to all those who attended, and to @jonatomic.bsky.social, Director of Global Risk at FAS, for the great conversation.

Join us for a conversation between co-author Nate Soares and @jonatomic.bsky.social, Director of Global Risk at the Federation of American Scientists.

Audience Q&A, book signing, and more:

politics-prose.com/nate-soares