Harrison Ritz

@hritz.bsky.social

cybernetic cognitive control 🤖

computational cognitive neuroscience 🧠

postdoc princeton neuro 🍕

he/him 🇨🇦 harrisonritz.github.io

computational cognitive neuroscience 🧠

postdoc princeton neuro 🍕

he/him 🇨🇦 harrisonritz.github.io

A friend got us ‘We All Play’ by Julie Flett — stunningly beautiful picture book

October 7, 2025 at 12:40 PM

A friend got us ‘We All Play’ by Julie Flett — stunningly beautiful picture book

shout out to H. Velde

September 16, 2025 at 4:18 AM

shout out to H. Velde

one reason why the Dobs paper is interesting -- they find that units do learn specific features (those sure look like eyes/nose/trumpet units). strong specialization

So polysemanticity might not be a property of architectures/ learning rules, but tasks and training data

So polysemanticity might not be a property of architectures/ learning rules, but tasks and training data

September 15, 2025 at 3:36 AM

one reason why the Dobs paper is interesting -- they find that units do learn specific features (those sure look like eyes/nose/trumpet units). strong specialization

So polysemanticity might not be a property of architectures/ learning rules, but tasks and training data

So polysemanticity might not be a property of architectures/ learning rules, but tasks and training data

There was recently the Dobs paper, which showed that DNNs do show specialization.

www.science.org/doi/10.1126/...

IIUC a network trained on face and object classification tasks will develop specialized units for both.

www.science.org/doi/10.1126/...

IIUC a network trained on face and object classification tasks will develop specialized units for both.

September 14, 2025 at 11:38 PM

There was recently the Dobs paper, which showed that DNNs do show specialization.

www.science.org/doi/10.1126/...

IIUC a network trained on face and object classification tasks will develop specialized units for both.

www.science.org/doi/10.1126/...

IIUC a network trained on face and object classification tasks will develop specialized units for both.

Awesome new preprint from @jasonleng.bsky.social!

Deadlines in decision making often truncate too-slow responses. Failing to account for these omissions can (severely) bias your DDM parameter estimates.

They offer a great solution to correct for this issue.

doi.org/10.31234/osf...

Deadlines in decision making often truncate too-slow responses. Failing to account for these omissions can (severely) bias your DDM parameter estimates.

They offer a great solution to correct for this issue.

doi.org/10.31234/osf...

September 10, 2025 at 3:48 PM

Awesome new preprint from @jasonleng.bsky.social!

Deadlines in decision making often truncate too-slow responses. Failing to account for these omissions can (severely) bias your DDM parameter estimates.

They offer a great solution to correct for this issue.

doi.org/10.31234/osf...

Deadlines in decision making often truncate too-slow responses. Failing to account for these omissions can (severely) bias your DDM parameter estimates.

They offer a great solution to correct for this issue.

doi.org/10.31234/osf...

No fuckin way. That’s a cool bug

September 6, 2025 at 4:31 AM

No fuckin way. That’s a cool bug

Fast weight programming and linear transformers: from machine learning to neurobiology arxiv.org/abs/2508.084...

August 17, 2025 at 1:56 PM

Fast weight programming and linear transformers: from machine learning to neurobiology arxiv.org/abs/2508.084...

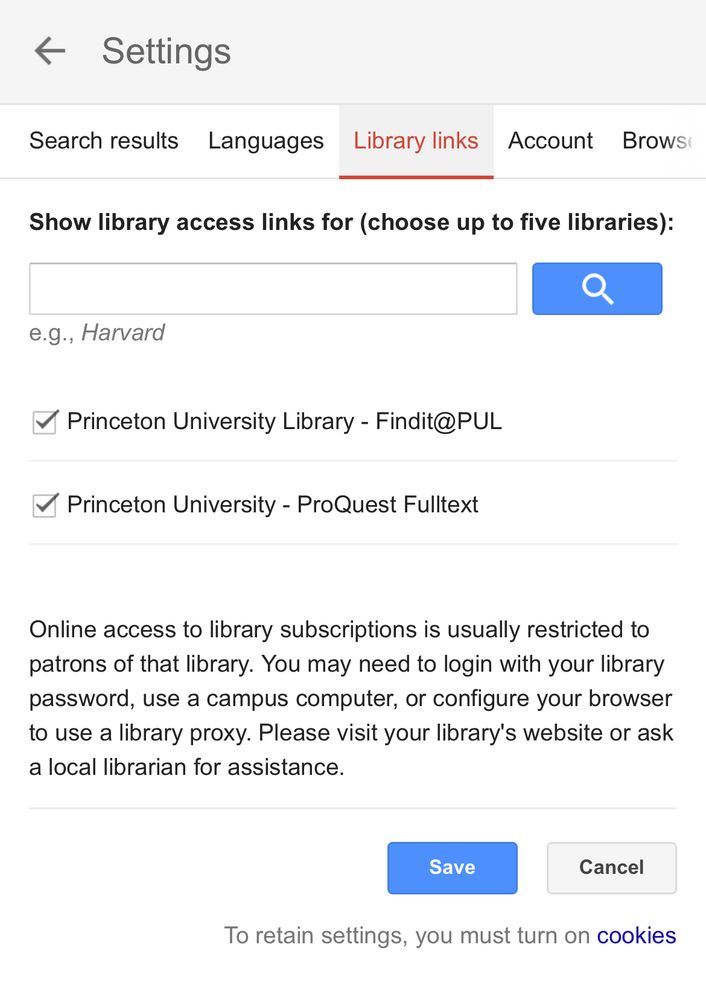

Another Google Scholar tip: if you’re blocked on iOS, you can

(1) disable iCloud relay

(2) enable one-off IP peeking

I definitely prefer (2) — quick once you’ve done it a few times. Annoying how Google tries to push us towards surveillance.

(1) disable iCloud relay

(2) enable one-off IP peeking

I definitely prefer (2) — quick once you’ve done it a few times. Annoying how Google tries to push us towards surveillance.

August 3, 2025 at 7:25 PM

Another Google Scholar tip: if you’re blocked on iOS, you can

(1) disable iCloud relay

(2) enable one-off IP peeking

I definitely prefer (2) — quick once you’ve done it a few times. Annoying how Google tries to push us towards surveillance.

(1) disable iCloud relay

(2) enable one-off IP peeking

I definitely prefer (2) — quick once you’ve done it a few times. Annoying how Google tries to push us towards surveillance.

Did everyone else know that you can turn on ‘library links’ in Google scholar!?

August 2, 2025 at 2:02 PM

Did everyone else know that you can turn on ‘library links’ in Google scholar!?

VARX looks similar to our (quite strong) AR null model, though (1) we included time-varying inputs (stat model > process model) and (2) your AR(N) structure provides richer (non-Markovian) dynamics (🆒).

To be clear, explained variance was not our goal; confirmed good fit before interpreting params

To be clear, explained variance was not our goal; confirmed good fit before interpreting params

July 28, 2025 at 12:01 PM

VARX looks similar to our (quite strong) AR null model, though (1) we included time-varying inputs (stat model > process model) and (2) your AR(N) structure provides richer (non-Markovian) dynamics (🆒).

To be clear, explained variance was not our goal; confirmed good fit before interpreting params

To be clear, explained variance was not our goal; confirmed good fit before interpreting params

There are some convergence results that depend strongly on `A`, for the pre-cue neutral state, and the post-cue task-state convergence (not covered by this version; ‘stability growth’ from earlier fig)

Switch-dep control energy (‘gram contrast’) didn’t depend strongly on A.

Switch-dep control energy (‘gram contrast’) didn’t depend strongly on A.

July 28, 2025 at 12:01 PM

There are some convergence results that depend strongly on `A`, for the pre-cue neutral state, and the post-cue task-state convergence (not covered by this version; ‘stability growth’ from earlier fig)

Switch-dep control energy (‘gram contrast’) didn’t depend strongly on A.

Switch-dep control energy (‘gram contrast’) didn’t depend strongly on A.

(3) after the cue, we measured control energy for the cued tasks by using recursive Lyapunov equations.

We found that switch-trained RNNs had greater energy on switch trials, similar to what we found in EEG.

This shows that control occurs both before and after the task cue.

We found that switch-trained RNNs had greater energy on switch trials, similar to what we found in EEG.

This shows that control occurs both before and after the task cue.

July 27, 2025 at 9:31 PM

(3) after the cue, we measured control energy for the cued tasks by using recursive Lyapunov equations.

We found that switch-trained RNNs had greater energy on switch trials, similar to what we found in EEG.

This shows that control occurs both before and after the task cue.

We found that switch-trained RNNs had greater energy on switch trials, similar to what we found in EEG.

This shows that control occurs both before and after the task cue.

(2) why does ITI matter so much?

During the ITI, RNNs move into a neutral state, like a tennis player recovering to the center of the court. Short ITIs don’t give enough time.

In both switch-trained RNNs and EEG, the initial conditions (end of ITI) were near the midpoint between the task states.

During the ITI, RNNs move into a neutral state, like a tennis player recovering to the center of the court. Short ITIs don’t give enough time.

In both switch-trained RNNs and EEG, the initial conditions (end of ITI) were near the midpoint between the task states.

July 27, 2025 at 9:31 PM

(2) why does ITI matter so much?

During the ITI, RNNs move into a neutral state, like a tennis player recovering to the center of the court. Short ITIs don’t give enough time.

In both switch-trained RNNs and EEG, the initial conditions (end of ITI) were near the midpoint between the task states.

During the ITI, RNNs move into a neutral state, like a tennis player recovering to the center of the court. Short ITIs don’t give enough time.

In both switch-trained RNNs and EEG, the initial conditions (end of ITI) were near the midpoint between the task states.

(1) correlating task states between switch and repeat trials showed that switch-trained RNNs had similar trajectories.

This was also the case with EEG. Critically, just changing the ITI for RNNs reproduced the differences between these EEG datasets.

This just ‘fell-out’ of the modeling!

This was also the case with EEG. Critically, just changing the ITI for RNNs reproduced the differences between these EEG datasets.

This just ‘fell-out’ of the modeling!

July 27, 2025 at 9:31 PM

(1) correlating task states between switch and repeat trials showed that switch-trained RNNs had similar trajectories.

This was also the case with EEG. Critically, just changing the ITI for RNNs reproduced the differences between these EEG datasets.

This just ‘fell-out’ of the modeling!

This was also the case with EEG. Critically, just changing the ITI for RNNs reproduced the differences between these EEG datasets.

This just ‘fell-out’ of the modeling!

To compare RNNs and brains, we re-analyzed two EEG datasets with SSMs.

Like RNNs, these datasets had very different ITIs (900ms vs 2600ms).

High-d SSMs fit great here too, better than AR models or even EEG-trained RNNs.

*So what can SSMs tell us about RNN’s apparent task-switching signatures?*

Like RNNs, these datasets had very different ITIs (900ms vs 2600ms).

High-d SSMs fit great here too, better than AR models or even EEG-trained RNNs.

*So what can SSMs tell us about RNN’s apparent task-switching signatures?*

July 27, 2025 at 9:31 PM

To compare RNNs and brains, we re-analyzed two EEG datasets with SSMs.

Like RNNs, these datasets had very different ITIs (900ms vs 2600ms).

High-d SSMs fit great here too, better than AR models or even EEG-trained RNNs.

*So what can SSMs tell us about RNN’s apparent task-switching signatures?*

Like RNNs, these datasets had very different ITIs (900ms vs 2600ms).

High-d SSMs fit great here too, better than AR models or even EEG-trained RNNs.

*So what can SSMs tell us about RNN’s apparent task-switching signatures?*

Visualization of RNNs dynamics revealed a core set of learned strategies, which we quantified with SSMs.

(1) RNNs have similar dynamics on switch and repeat trials

(2) RNNs converge to the center of the task space between trials

(3) RNNs have stronger dynamics when switching tasks

(1) RNNs have similar dynamics on switch and repeat trials

(2) RNNs converge to the center of the task space between trials

(3) RNNs have stronger dynamics when switching tasks

July 27, 2025 at 9:31 PM

Visualization of RNNs dynamics revealed a core set of learned strategies, which we quantified with SSMs.

(1) RNNs have similar dynamics on switch and repeat trials

(2) RNNs converge to the center of the task space between trials

(3) RNNs have stronger dynamics when switching tasks

(1) RNNs have similar dynamics on switch and repeat trials

(2) RNNs converge to the center of the task space between trials

(3) RNNs have stronger dynamics when switching tasks

To understand RNN computations, we globally linearized their hidden-unit activity using high-dimensional linear-Gaussian state-space models (SSMs).

High-d SSMs (latents dim > obs dim) have great performance, and are interpretable through tools from dynamical systems and control theory.

High-d SSMs (latents dim > obs dim) have great performance, and are interpretable through tools from dynamical systems and control theory.

July 27, 2025 at 9:31 PM

To understand RNN computations, we globally linearized their hidden-unit activity using high-dimensional linear-Gaussian state-space models (SSMs).

High-d SSMs (latents dim > obs dim) have great performance, and are interpretable through tools from dynamical systems and control theory.

High-d SSMs (latents dim > obs dim) have great performance, and are interpretable through tools from dynamical systems and control theory.

To capture preparation for upcoming trials (‘reconfiguration’ theory), we varied networks experience with switching tasks (2-Trial) vs performing isolated task (1-Trial).

To capture interference from previous trials (‘inertia’ theory), we varied the ITI between trials.

To capture interference from previous trials (‘inertia’ theory), we varied the ITI between trials.

July 27, 2025 at 9:31 PM

To capture preparation for upcoming trials (‘reconfiguration’ theory), we varied networks experience with switching tasks (2-Trial) vs performing isolated task (1-Trial).

To capture interference from previous trials (‘inertia’ theory), we varied the ITI between trials.

To capture interference from previous trials (‘inertia’ theory), we varied the ITI between trials.

I see what you’re saying! No magic solutions for shit data. Encoding models just fail better (variance instead of bias).

fwiw, we found that reliability-based stats did even better than encoding models under high noise

www.nature.com/articles/s41...

fwiw, we found that reliability-based stats did even better than encoding models under high noise

www.nature.com/articles/s41...

June 19, 2025 at 12:08 PM

I see what you’re saying! No magic solutions for shit data. Encoding models just fail better (variance instead of bias).

fwiw, we found that reliability-based stats did even better than encoding models under high noise

www.nature.com/articles/s41...

fwiw, we found that reliability-based stats did even better than encoding models under high noise

www.nature.com/articles/s41...

Reminder: don't do decoding!

When you have more noise in your data (neuroimaging) than you do in your labels (face, house), encoding is better than decoding.

Not to mention that encoding models make it easier to control for covariates.

When you have more noise in your data (neuroimaging) than you do in your labels (face, house), encoding is better than decoding.

Not to mention that encoding models make it easier to control for covariates.

June 18, 2025 at 6:55 PM

Reminder: don't do decoding!

When you have more noise in your data (neuroimaging) than you do in your labels (face, house), encoding is better than decoding.

Not to mention that encoding models make it easier to control for covariates.

When you have more noise in your data (neuroimaging) than you do in your labels (face, house), encoding is better than decoding.

Not to mention that encoding models make it easier to control for covariates.