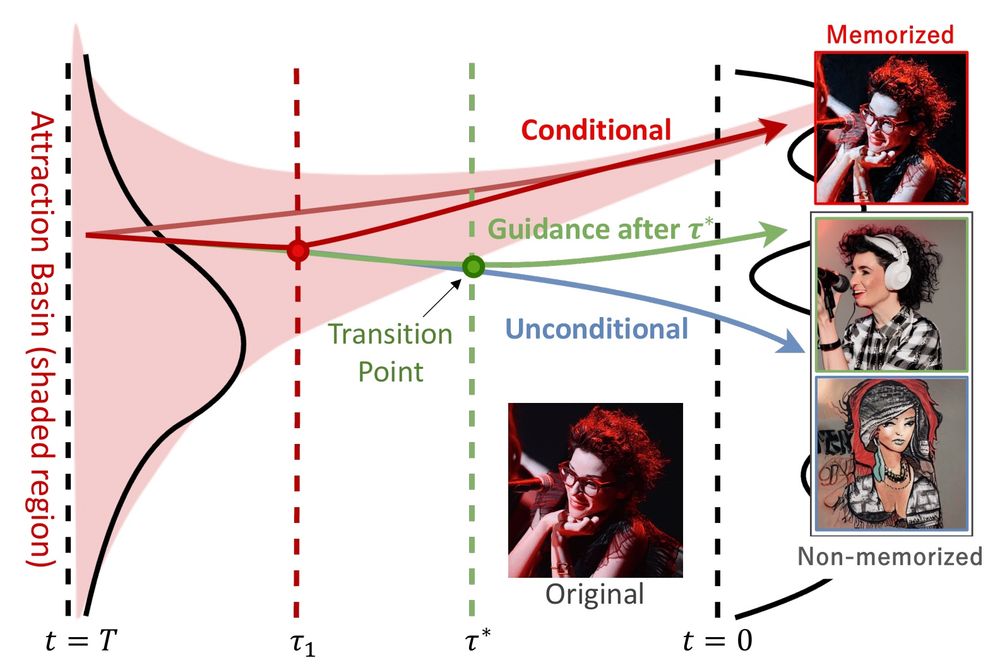

In particular, from a privacy perspective, "was training data memorized?" is a yes/no question; we aren't trying to quantify how much data was memorized beyond "some" vs "none".

In particular, from a privacy perspective, "was training data memorized?" is a yes/no question; we aren't trying to quantify how much data was memorized beyond "some" vs "none".

And the big question is: Is that true?

I found that the best answer lies in between hype and skepticism.

www.vox.com/future-perfe...

And the big question is: Is that true?

I found that the best answer lies in between hype and skepticism.

www.vox.com/future-perfe...

www.nature.com/articles/s41...

www.nature.com/articles/s41...

![Lemma 4.5 from https://arxiv.org/abs/1712.07196

Let X_1, ..., X_k be independent samples from N(0,1). Then

E[max{X_1^2, ..., X_k^2}] <= 2ln(2k).](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:thfnaqgktdwqbm42radc322i/bafkreidkql7bujw7docefdl3shay4r4dn7pw74slmhm3dlhtn4s3li4jyy@jpeg)

@simonwillison.net, especially this analogy between today's datacenter buildout and the 19th century railway boom. The parallels are striking. simonwillison.net/2024/Dec/31/...

@simonwillison.net, especially this analogy between today's datacenter buildout and the 19th century railway boom. The parallels are striking. simonwillison.net/2024/Dec/31/...

That threatens home appliances and aging energy infrastructure, and increases fire risks.

That threatens home appliances and aging energy infrastructure, and increases fire risks.

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

arxiv.org/abs/2411.04118

arxiv.org/abs/2411.04118

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

bsky-follow-finder.theo.io

bsky-follow-finder.theo.io

arxiv.org/abs/2407.11072

TL;DR — An attacker can convince your favorite LLM to suggest vulnerable code with just a minor change to the prompt!

arxiv.org/abs/2407.11072

TL;DR — An attacker can convince your favorite LLM to suggest vulnerable code with just a minor change to the prompt!