https://www.g-k.ai

Mehr Infos zur neuen Professur: hpi.de/artikel/prof...

Mehr Infos zur neuen Professur: hpi.de/artikel/prof...

It's been an incredible journey of research and collaboration. Thank you to everyone who has made this possible. We are very much looking forward to the next years to come!

#AIMAnniversary #AIMNews

It's been an incredible journey of research and collaboration. Thank you to everyone who has made this possible. We are very much looking forward to the next years to come!

#AIMAnniversary #AIMNews

Amer Sinha, Thomas Mesnard, Ryan McKenna, Daogao Liu, Christopher A. Choquette-Choo, Yangsibo Huang, Da Yu, George Kaissis, Zachary Charles, Ruibo Liu, Lynn Chua, Pritish Kamath, Pasin Manurangsi, Steve He, Chiyuan ...

http://arxiv.org/abs/2510.15001

Amer Sinha, Thomas Mesnard, Ryan McKenna, Daogao Liu, Christopher A. Choquette-Choo, Yangsibo Huang, Da Yu, George Kaissis, Zachary Charles, Ruibo Liu, Lynn Chua, Pritish Kamath, Pasin Manurangsi, Steve He, Chiyuan ...

http://arxiv.org/abs/2510.15001

The SaTML 2026 submission site is live 👉 hotcrp.satml.org

🗓️ Deadline: Sept 24, 2025

@satml.org

The SaTML 2026 submission site is live 👉 hotcrp.satml.org

🗓️ Deadline: Sept 24, 2025

@satml.org

Read more in our blog post and on the EurIPS website:

blog.neurips.cc/2025/07/16/n...

eurips.cc

Read more in our blog post and on the EurIPS website:

blog.neurips.cc/2025/07/16/n...

eurips.cc

Bogdan Kulynych, Juan Felipe Gomez, Georgios Kaissis, Jamie Hayes, Borja Balle, Flavio du Pin Calmon, Jean Louis Raisaro

http://arxiv.org/abs/2507.06969

Meenatchi Sundaram Muthu Selva Annamalai, Borja Balle, Jamie Hayes, Georgios Kaissis, Emiliano De Cristofaro

http://arxiv.org/abs/2506.16666

Meenatchi Sundaram Muthu Selva Annamalai, Borja Balle, Jamie Hayes, Georgios Kaissis, Emiliano De Cristofaro

http://arxiv.org/abs/2506.16666

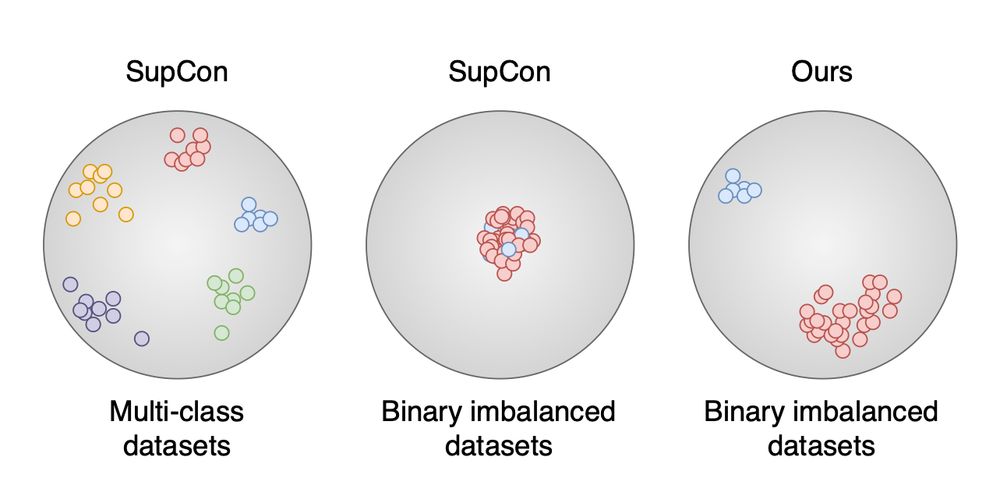

📄 Read the paper: tinyurl.com/k9fz456a

🎥 Watch the video: tinyurl.com/2jx528m5

📄 Read the paper: tinyurl.com/k9fz456a

🎥 Watch the video: tinyurl.com/2jx528m5

arxiv.org/abs/2503.17024

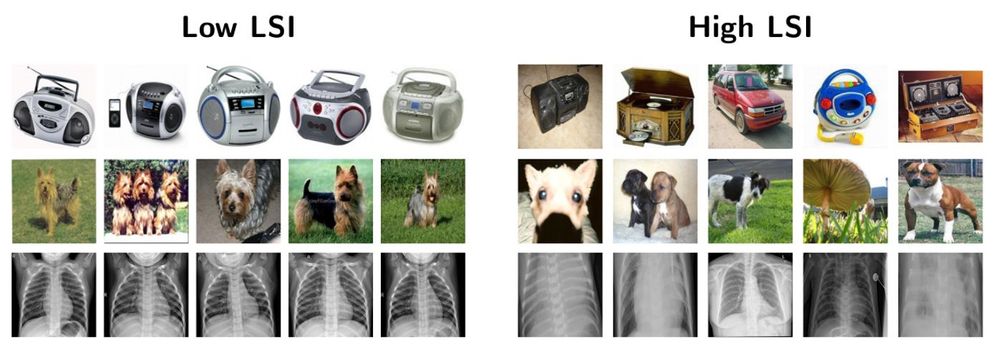

Always happy to meet anyone working on representation learning or tabular DL and medical data

arxiv.org/abs/2503.17024

Always happy to meet anyone working on representation learning or tabular DL and medical data

Zum ersten Mal seit 1992 kommt die Internationale Informatik-Olympiade (IOI) zurück nach Deutschland und wir sind in Kooperation mit Bundesweite Informatikwettbewerbe (BWINF) stolze Gastgeber des renommierten Wettbewerbs.

Zum ersten Mal seit 1992 kommt die Internationale Informatik-Olympiade (IOI) zurück nach Deutschland und wir sind in Kooperation mit Bundesweite Informatikwettbewerbe (BWINF) stolze Gastgeber des renommierten Wettbewerbs.

We are committed to enshrining scientific freedom in EU law, creating a 7-year ‘super grant’ to attract top researchers, and expanding support for the most promising scientists.

More → europa.eu/!TTbWbJ

We are committed to enshrining scientific freedom in EU law, creating a 7-year ‘super grant’ to attract top researchers, and expanding support for the most promising scientists.

More → europa.eu/!TTbWbJ

But many methods aren't mathematically sound and don’t scale.

But how could we improve this for large models?

1/n

But many methods aren't mathematically sound and don’t scale.

But how could we improve this for large models?

1/n

Paper: lnkd.in/esKRqF5p

Code: lnkd.in/eZFvDA5Q

(Thread incoming 👇)

Paper: lnkd.in/esKRqF5p

Code: lnkd.in/eZFvDA5Q

(Thread incoming 👇)

Juan Felipe Gomez, Bogdan Kulynych, Georgios Kaissis, Jamie Hayes, Borja Balle, Antti Honkela

http://arxiv.org/abs/2503.10945

Juan Felipe Gomez, Bogdan Kulynych, Georgios Kaissis, Jamie Hayes, Borja Balle, Antti Honkela

http://arxiv.org/abs/2503.10945

👉Learn more:

t1p.de/2rgfh

👉Check out the interview with Prof. Julia Schnabel & Dr. Georgios Kaissis:

t1p.de/e9g6a

#TrustworthyAI

👉Learn more:

t1p.de/2rgfh

👉Check out the interview with Prof. Julia Schnabel & Dr. Georgios Kaissis:

t1p.de/e9g6a

#TrustworthyAI