3rd and final year PhD Student

Researching on the applications and limitations of multimodal transformer encoder and decoder models.

Join us for #KnitTogether25 ⛰️✨ – a week to focus on “Bias and Social Factors in NLP”!

Lets explore and exchange research - together. But we also cook and explore nature - together. 🚀💡

🔗 knittogether.github.io/kt25

#NLPProc

Join us for #KnitTogether25 ⛰️✨ – a week to focus on “Bias and Social Factors in NLP”!

Lets explore and exchange research - together. But we also cook and explore nature - together. 🚀💡

🔗 knittogether.github.io/kt25

#NLPProc

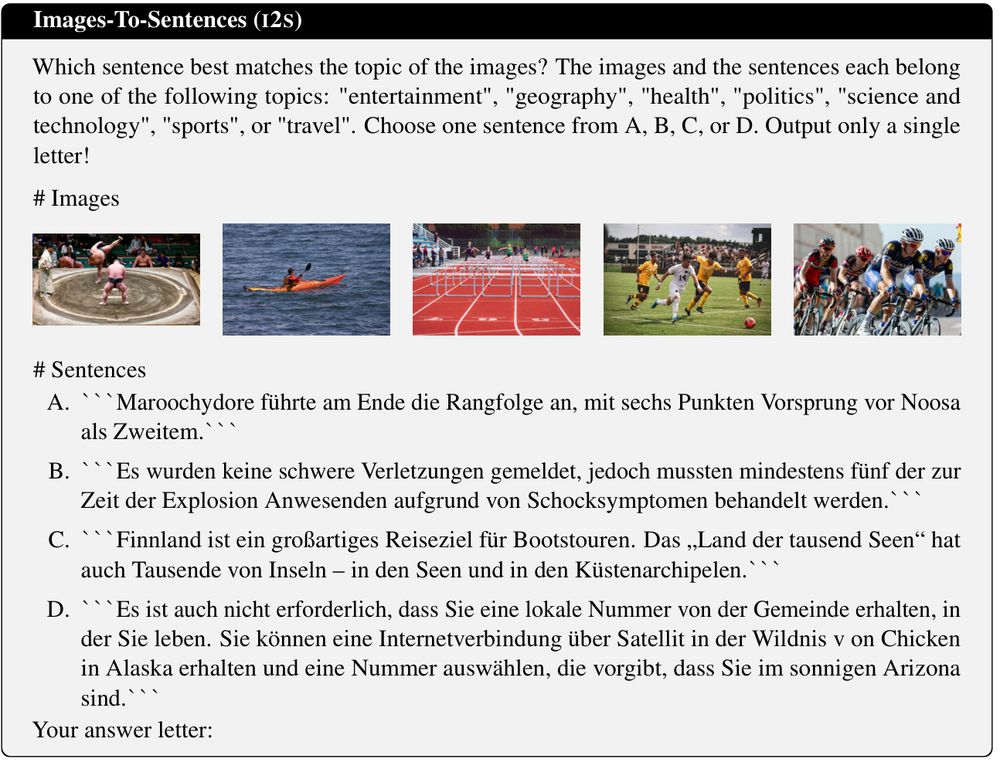

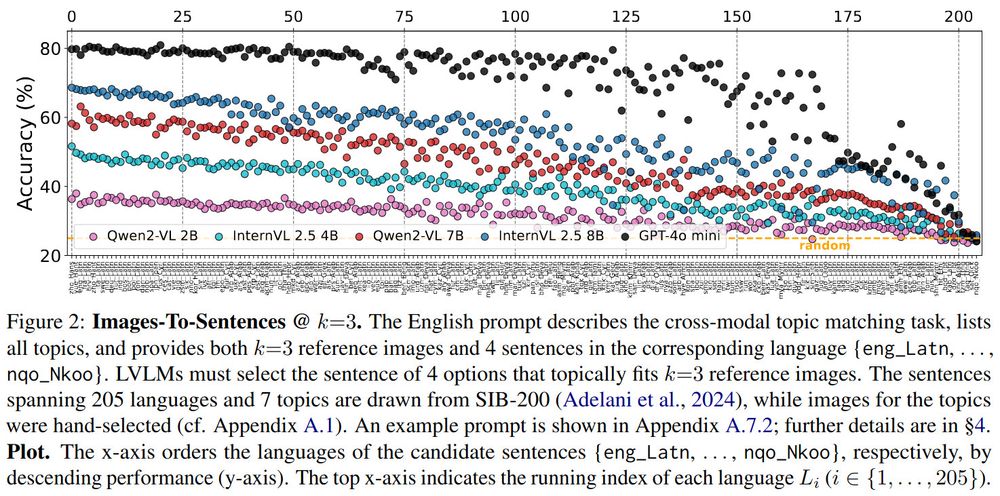

🤔Tasks: Given images (sentences), select topically matching sentence (image).

Arxiv: arxiv.org/abs/2502.12852

HF: huggingface.co/datasets/Wue...

Details👇

🤔Tasks: Given images (sentences), select topically matching sentence (image).

Arxiv: arxiv.org/abs/2502.12852

HF: huggingface.co/datasets/Wue...

Details👇

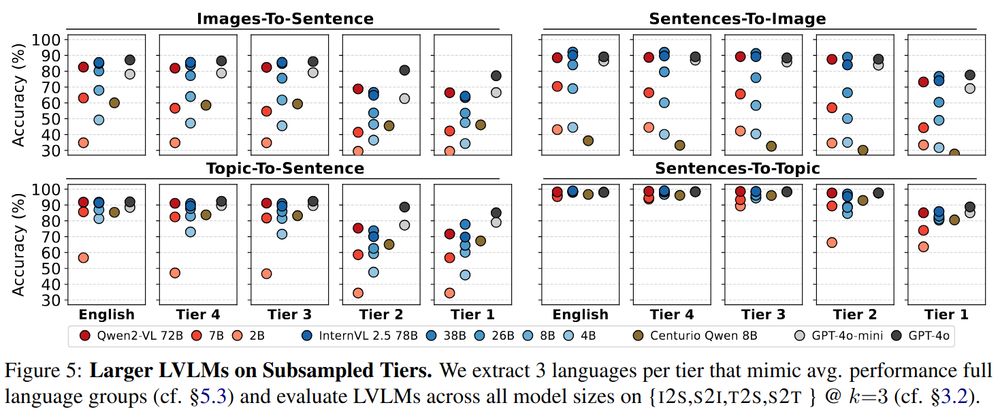

Images/Topic→Sentence (for I/T, pick S): narrows with less textual support (left)

Sentences→Image/Topic (for S, pick I/T): increases with less VL support worse (right)

Images/Topic→Sentence (for I/T, pick S): narrows with less textual support (left)

Sentences→Image/Topic (for S, pick I/T): increases with less VL support worse (right)

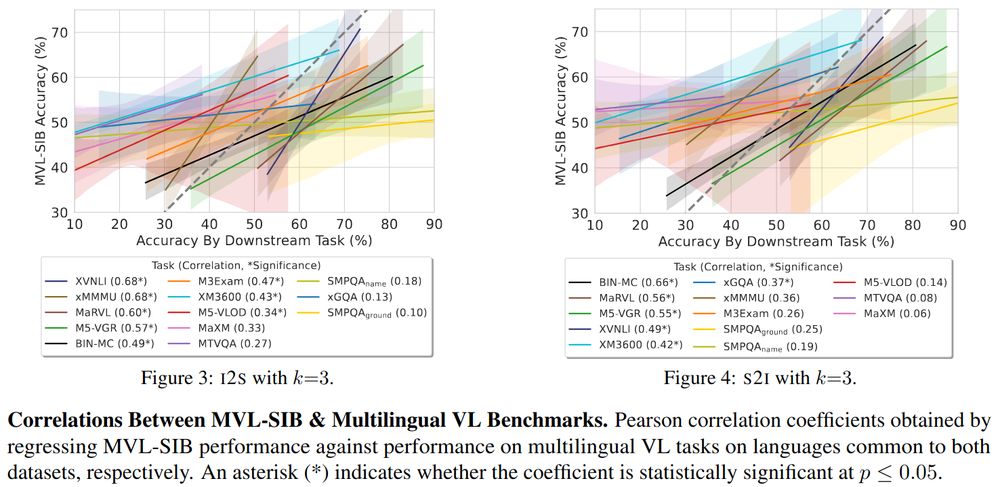

🤗Images-To-Sentence (given Images, select topically fitting sentence) & Sentences-To-Image (given Sentences, pick topically matching image) probe complementary aspects in VLU

🤗Images-To-Sentence (given Images, select topically fitting sentence) & Sentences-To-Image (given Sentences, pick topically matching image) probe complementary aspects in VLU