Excited to share my #NeurIPS 2025 paper with @thuereygroup.bsky.social: "Neural Emulator Superiority"!

Excited to share my #NeurIPS 2025 paper with @thuereygroup.bsky.social: "Neural Emulator Superiority"!

differentiable-systems.github.io/workshop-eur...

Submission: 10 October

Notification: 31 October

Workshop: 6 or 7 December in Copenhagen

At #EurIPS we are looking forward to welcoming presentations of all accepted NeurIPS papers, including a new “Salon des Refusés” track for papers which were rejected due to space constraints!

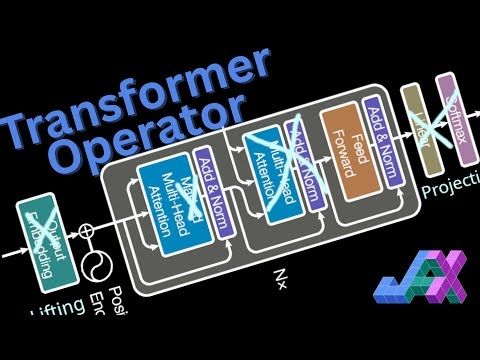

youtu.be/GVVWpyvXq_s

youtu.be/GVVWpyvXq_s

DM me (Whova, Email or bsky) if you want to chat about (autoregressive) neural emulators/operators for PDE, autodiff, differentiable physics, numerical solvers etc. 😊

DM me (Whova, Email or bsky) if you want to chat about (autoregressive) neural emulators/operators for PDE, autodiff, differentiable physics, numerical solvers etc. 😊

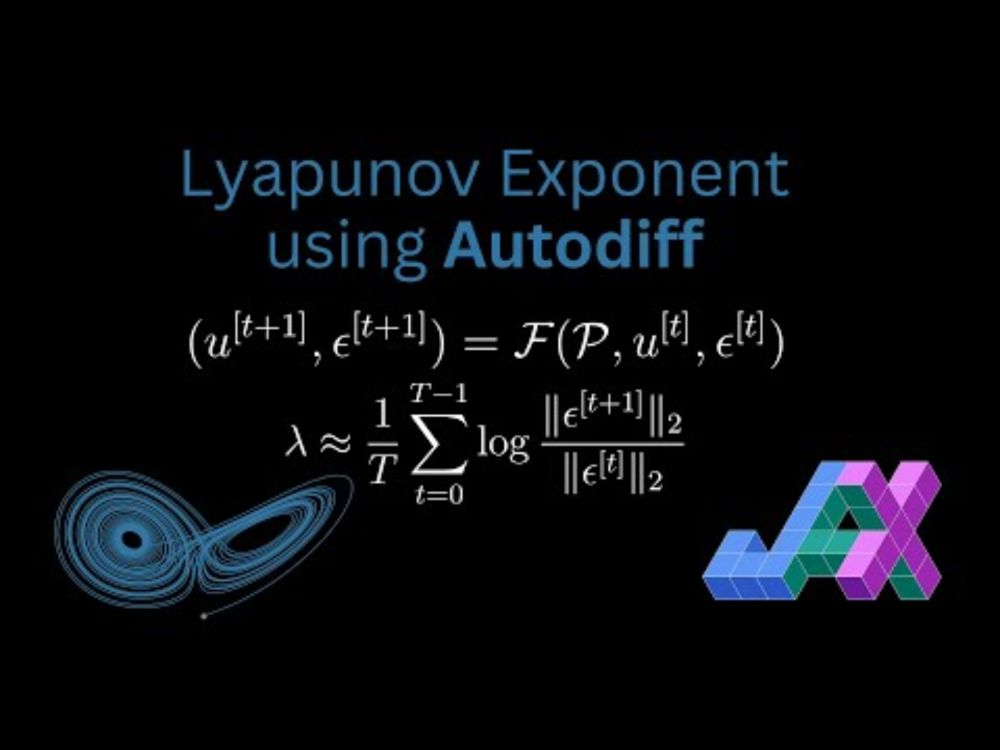

Nice showcase of #JAX's features:

- `jax.lax.scan` for autoregressive rollout

- `jax.linearize` repeated jvp

- `jax.vmap`: automatic vectorization

Nice showcase of #JAX's features:

- `jax.lax.scan` for autoregressive rollout

- `jax.linearize` repeated jvp

- `jax.vmap`: automatic vectorization

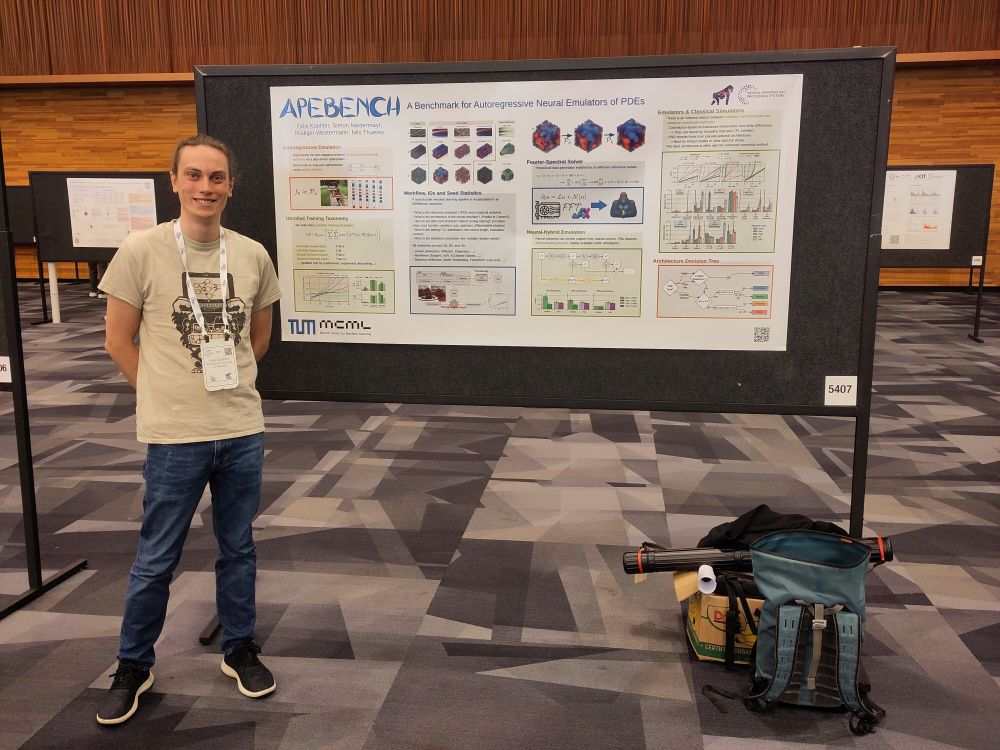

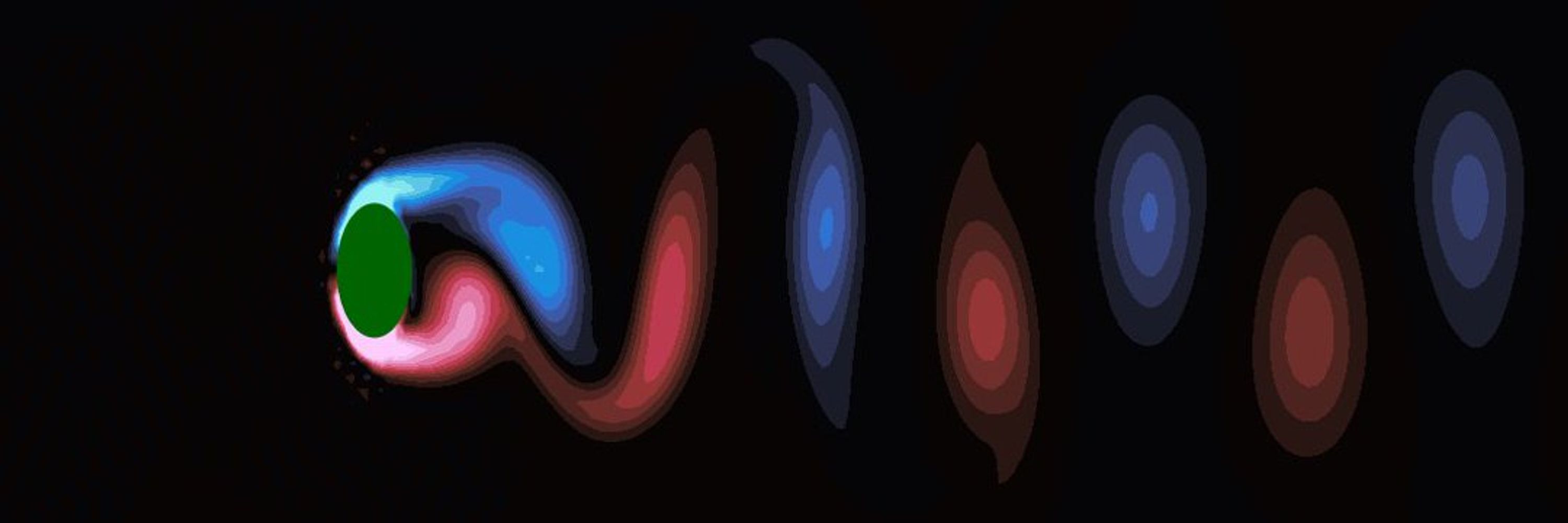

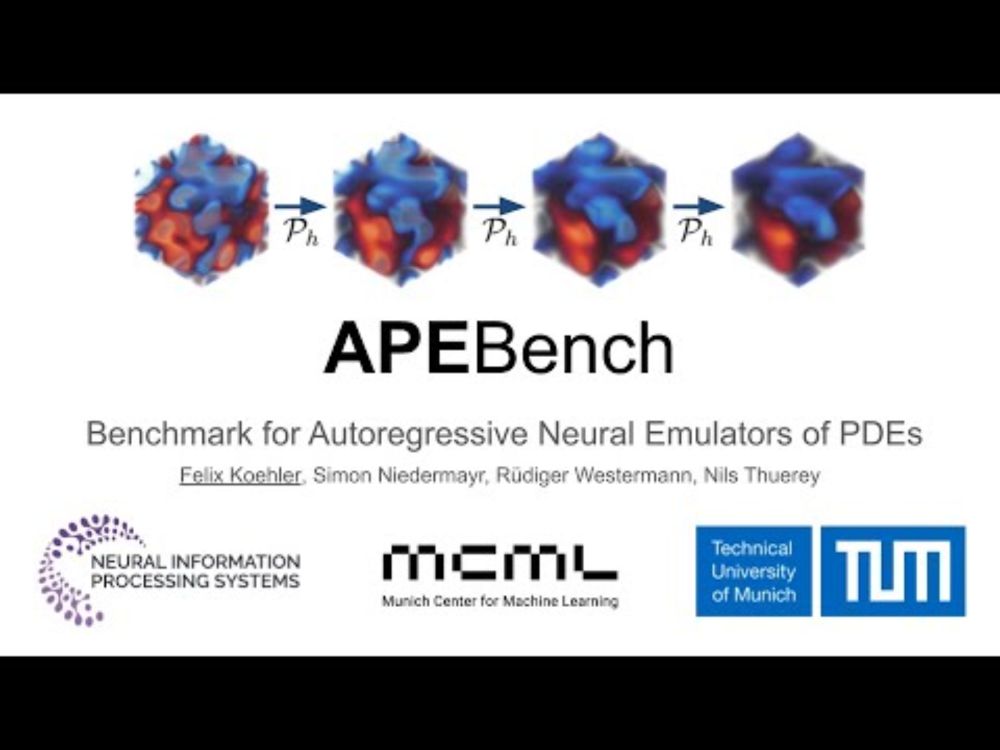

a new benchmark suite for autoregressive emulators of PDEs to understand how we might solve the models of nature more efficiently. More details 🧵

Visual summary on project page: tum-pbs.github.io/apebench-pap...

a new benchmark suite for autoregressive emulators of PDEs to understand how we might solve the models of nature more efficiently. More details 🧵

Visual summary on project page: tum-pbs.github.io/apebench-pap...

We hope that it helps you make the most of your computing resources. Enjoy!

We hope that it helps you make the most of your computing resources. Enjoy!

Very neat use-case of forward-mode AD for efficient Lyap approximation.

Very neat use-case of forward-mode AD for efficient Lyap approximation.

- The Well & Multimodal Universe: massive, curated scientific datasets

- LaSR: LLM concept evolution for symbolic regression

- MPP: 0th gen @polymathicai.bsky.social

All posters Wed/Thu - stop by! 👋

- The Well & Multimodal Universe: massive, curated scientific datasets

- LaSR: LLM concept evolution for symbolic regression

- MPP: 0th gen @polymathicai.bsky.social

All posters Wed/Thu - stop by! 👋

@franck-iutzeler.bsky.social & E. Pauwels

We show that differentiating the iterates of SGD leads to convergent sequences (in different regimes) for strg cvx functions.

arxiv.org/abs/2405.15894

This was done as part of my PhD with @thuereygroup.bsky.social in collaboration with my talented co-author, Simon Niedermayr, who is supervised by Rüdiger Westermann.

This was done as part of my PhD with @thuereygroup.bsky.social in collaboration with my talented co-author, Simon Niedermayr, who is supervised by Rüdiger Westermann.