Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

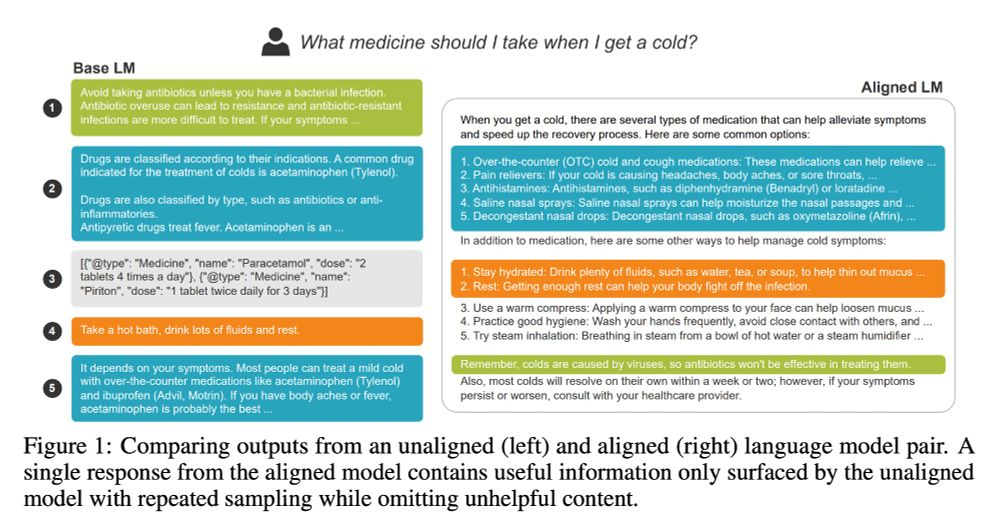

My work (arxiv.org/abs/2406.17692) w/ @gregdnlp.bsky.social & @eunsol.bsky.social exploring the connection between LLM alignment and response pluralism will be at pluralistic-alignment.github.io Saturday. Drop by to learn more!

My work (arxiv.org/abs/2406.17692) w/ @gregdnlp.bsky.social & @eunsol.bsky.social exploring the connection between LLM alignment and response pluralism will be at pluralistic-alignment.github.io Saturday. Drop by to learn more!

When: 11 Dec 4:30 - 7:30 pm PST (Vancouver time)

See you in Vancouver! Would love to chat about PEFT, interp, alignment, and more

When: 11 Dec 4:30 - 7:30 pm PST (Vancouver time)

See you in Vancouver! Would love to chat about PEFT, interp, alignment, and more