Standing on the shoulders of giants

TLDR; 🇨🇦🐧🧑🏼💻🚴🏎️🧗🏼 💩posting.

Haver of opinions that are all my own.

I mainly do #HPC #BLAS #AI #RVV and #clusters

Proud French Canadian, you’ll hear about it

(I help with HPC.social)

I think it's relevant to add some high level context on *why* we vendors decrease FP64; it's not just chasing AI, it's the shear *cost* of FP64.

The short ...

I think it's relevant to add some high level context on *why* we vendors decrease FP64; it's not just chasing AI, it's the shear *cost* of FP64.

The short ...

-Me, looking at us relearning the same thing again

-Me, looking at us relearning the same thing again

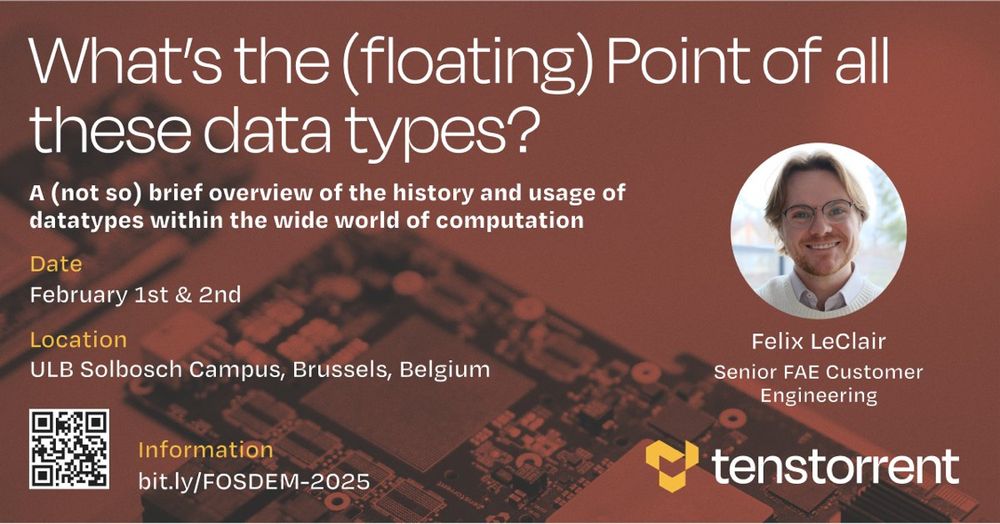

#fosdem

#fosdem

One implies V length min is 256, the other implies it’s 128.

Spec lawyer in me says you go by CPUID

One implies V length min is 256, the other implies it’s 128.

Spec lawyer in me says you go by CPUID

CC @jeffgeerling.com

CC @jeffgeerling.com

No, you need to optimize for the workloads you will see, and the ones you expect to see emerge.

CNNs have massively different inference requirements than LLMs, which in turn are different from diffusion models.

No, you need to optimize for the workloads you will see, and the ones you expect to see emerge.

CNNs have massively different inference requirements than LLMs, which in turn are different from diffusion models.

#HPC

#HPC