1️⃣ The alignment problem -- foundations & present-day challenges

2️⃣ Frontier alignment methods & evaluation (RLHF, Constitutional AI, etc.)

3️⃣ Interpretability & monitoring incl. hands-on mech interp labs

4️⃣ Sociotechnical aspects of alignment, governance, risks, Economics of AI and policy

1️⃣ The alignment problem -- foundations & present-day challenges

2️⃣ Frontier alignment methods & evaluation (RLHF, Constitutional AI, etc.)

3️⃣ Interpretability & monitoring incl. hands-on mech interp labs

4️⃣ Sociotechnical aspects of alignment, governance, risks, Economics of AI and policy

@aims_oxford

!

I’m thrilled to launch a new called AI Safety & Alignment (AISAA) course on the foundations & frontier research of making advanced AI systems safe and aligned at

@UniofOxford

what to expect 👇

robots.ox.ac.uk/~fazl/aisaa/

@aims_oxford

!

I’m thrilled to launch a new called AI Safety & Alignment (AISAA) course on the foundations & frontier research of making advanced AI systems safe and aligned at

@UniofOxford

what to expect 👇

robots.ox.ac.uk/~fazl/aisaa/

Today’s AI doesn’t just assist decisions; it makes them. Governments use it for surveillance, prediction, and control — often with no oversight.

Technical safeguards aren’t enough on their own — but they’re essential for AI to serve society.

Today’s AI doesn’t just assist decisions; it makes them. Governments use it for surveillance, prediction, and control — often with no oversight.

Technical safeguards aren’t enough on their own — but they’re essential for AI to serve society.

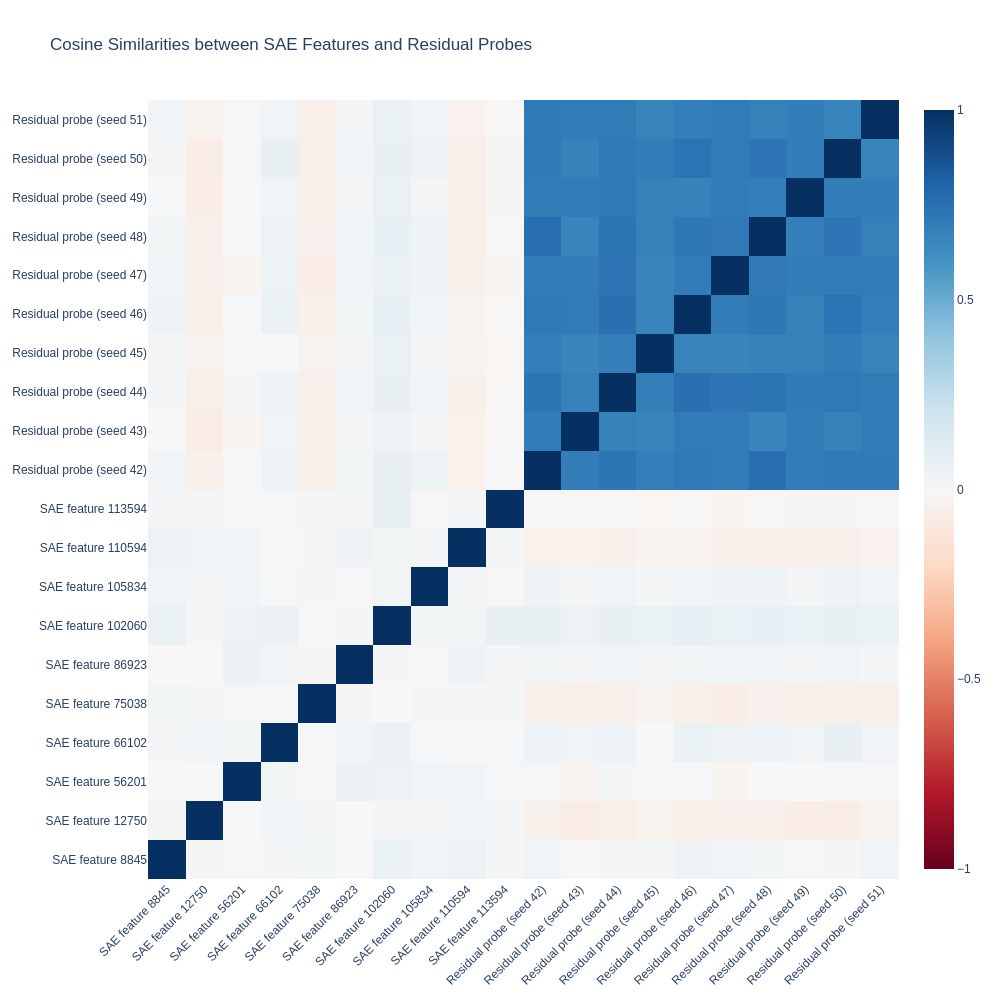

Cosine similarities reveal that linear probes learn highly similar features, while high-performing SAE features are often dissimilar. While individual SAE features are also dissimilar to the probes, combinations of SAE features are increasingly similar.

6/

Cosine similarities reveal that linear probes learn highly similar features, while high-performing SAE features are often dissimilar. While individual SAE features are also dissimilar to the probes, combinations of SAE features are increasingly similar.

6/

We analyzed combinations of SAE features. While increasing the number of features improves the in-domain performance (SQUAD), it doesn’t consistently improve generalization performance when compared to the best single SAE features.

5/

We analyzed combinations of SAE features. While increasing the number of features improves the in-domain performance (SQUAD), it doesn’t consistently improve generalization performance when compared to the best single SAE features.

5/

Performance varies across domains:

• We find SAE features with strong generalization to the Equation, Celebrity, or IDK dataset, but no good generalization to BoolQ

• The probe trained on BoolQ fails to generalize, although decent SAE features exist

4/

Performance varies across domains:

• We find SAE features with strong generalization to the Equation, Celebrity, or IDK dataset, but no good generalization to BoolQ

• The probe trained on BoolQ fails to generalize, although decent SAE features exist

4/

We compare two probing methods at different layers (notably Layer 31):

• Linear probes trained on the residual stream, hitting ~90% accuracy in-domain

• Individual SAE features, reaching ~80% accuracy

3/

We compare two probing methods at different layers (notably Layer 31):

• Linear probes trained on the residual stream, hitting ~90% accuracy in-domain

• Individual SAE features, reaching ~80% accuracy

3/

LLMs sometimes confidently answer questions—even ones they can’t truly answer. We use three existing datasets (SQuAD, IDK, BoolQ), and two newly created synthetic datasets (Equations, and Celebrity Names) to quantify this behavior.

2/

LLMs sometimes confidently answer questions—even ones they can’t truly answer. We use three existing datasets (SQuAD, IDK, BoolQ), and two newly created synthetic datasets (Equations, and Celebrity Names) to quantify this behavior.

2/

Important question: Do SAEs generalise?

We explore the answerability detection in LLMs by comparing SAE features vs. linear residual stream probes.

Answer:

probes outperform SAE features in-domain, out-of-domain generalization varies sharply between features and datasets. 🧵

Important question: Do SAEs generalise?

We explore the answerability detection in LLMs by comparing SAE features vs. linear residual stream probes.

Answer:

probes outperform SAE features in-domain, out-of-domain generalization varies sharply between features and datasets. 🧵

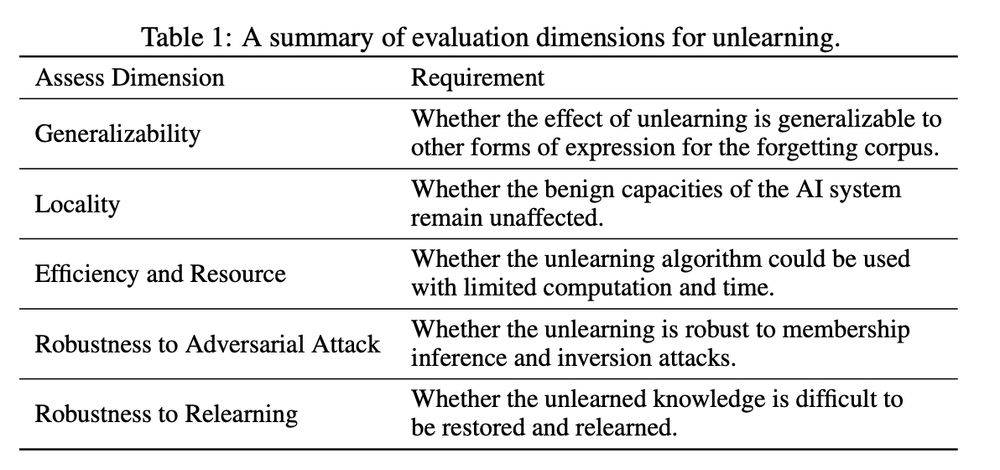

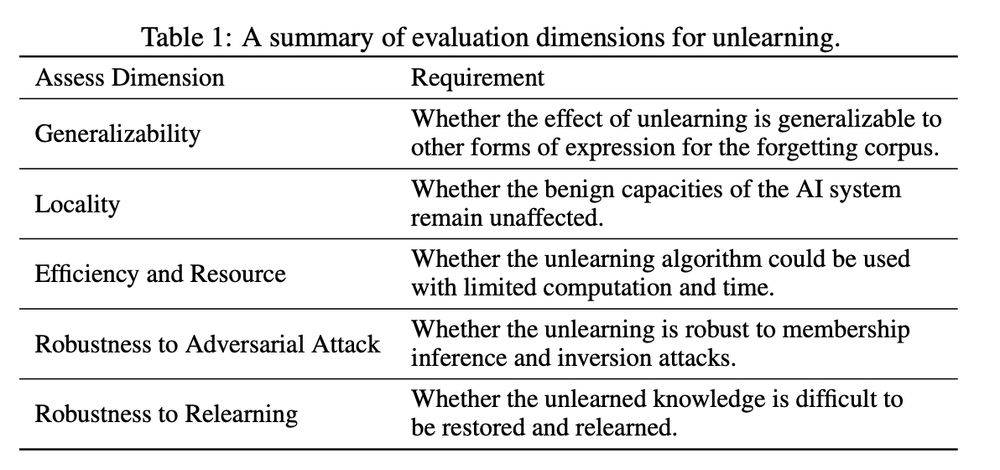

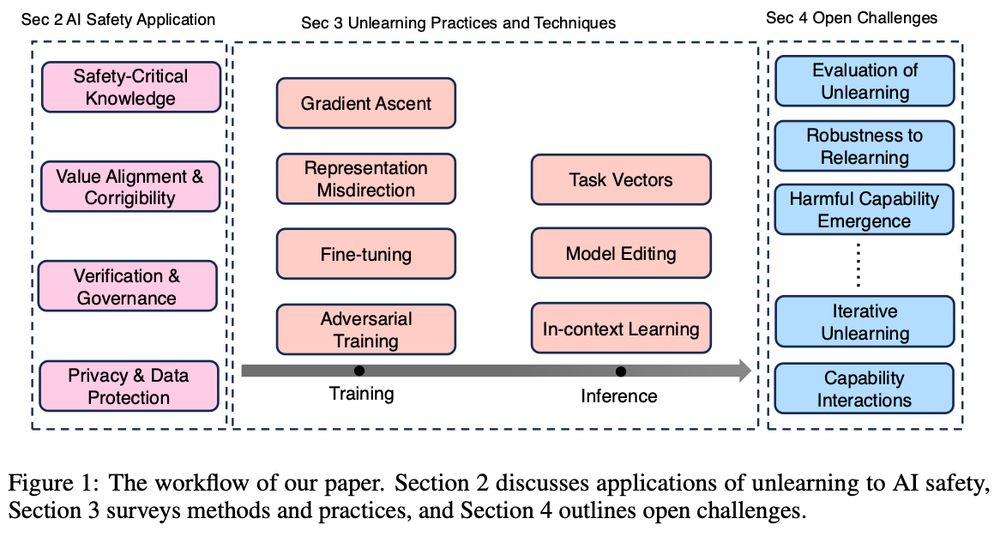

⚠️ Current unlearning tests fall short.

Models can pass tests yet retain harmful, emergent capabilities. These risks often surface in subtle, unexpected ways during extended interactions.

How can we evaluate major concerns e.g. the dual-use capabilities can emerge from beneficial data?

4/8

⚠️ Current unlearning tests fall short.

Models can pass tests yet retain harmful, emergent capabilities. These risks often surface in subtle, unexpected ways during extended interactions.

How can we evaluate major concerns e.g. the dual-use capabilities can emerge from beneficial data?

4/8

Managing safety-critical knowledge

Mitigating jailbreaks

Correcting value alignment

Ensuring privacy/legal compliance

But: Removing facts is easy; removing dangerous capabilities is HARD. Capabilities emerge from complex knowledge interactions.

3/8

Managing safety-critical knowledge

Mitigating jailbreaks

Correcting value alignment

Ensuring privacy/legal compliance

But: Removing facts is easy; removing dangerous capabilities is HARD. Capabilities emerge from complex knowledge interactions.

3/8

Real world example:

2a/8

Real world example:

2a/8

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨

Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇

Paper: arxiv.org/pdf/2501.04952

1/8

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨

Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇

Paper: arxiv.org/pdf/2501.04952

1/8

We are launching the AI Luminate AI Safety Benchmark @MLCommons @PeterMattson100 @tangenticAI

The first step towards global standard benchmark for AI PRODUCT safety!

We are launching the AI Luminate AI Safety Benchmark @MLCommons @PeterMattson100 @tangenticAI

The first step towards global standard benchmark for AI PRODUCT safety!