fanegg.github.io

Just input a RGB video, we online reconstruct 4D humans and scene in 𝗢𝗻𝗲 model and 𝗢𝗻𝗲 stage.

Training this versatile model is easier than you think – it just takes 𝗢𝗻𝗲 day using 𝗢𝗻𝗲 GPU!

🔗Page: fanegg.github.io/Human3R/

Just input a RGB video, we online reconstruct 4D humans and scene in 𝗢𝗻𝗲 model and 𝗢𝗻𝗲 stage.

Training this versatile model is easier than you think – it just takes 𝗢𝗻𝗲 day using 𝗢𝗻𝗲 GPU!

🔗Page: fanegg.github.io/Human3R/

TTT3R offers a simple state update rule to enhance length generalization for #CUT3R — No fine-tuning required!

🔗Page: rover-xingyu.github.io/TTT3R

We rebuilt @taylorswift13’s "22" live at the 2013 Billboard Music Awards - in 3D!

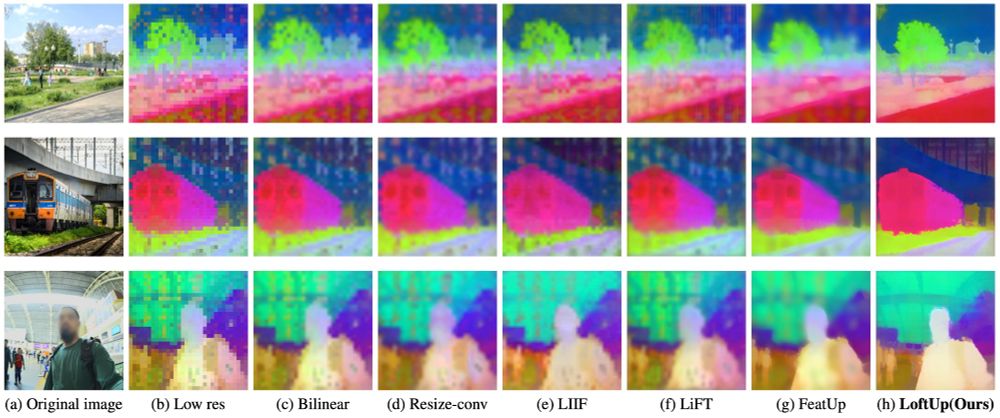

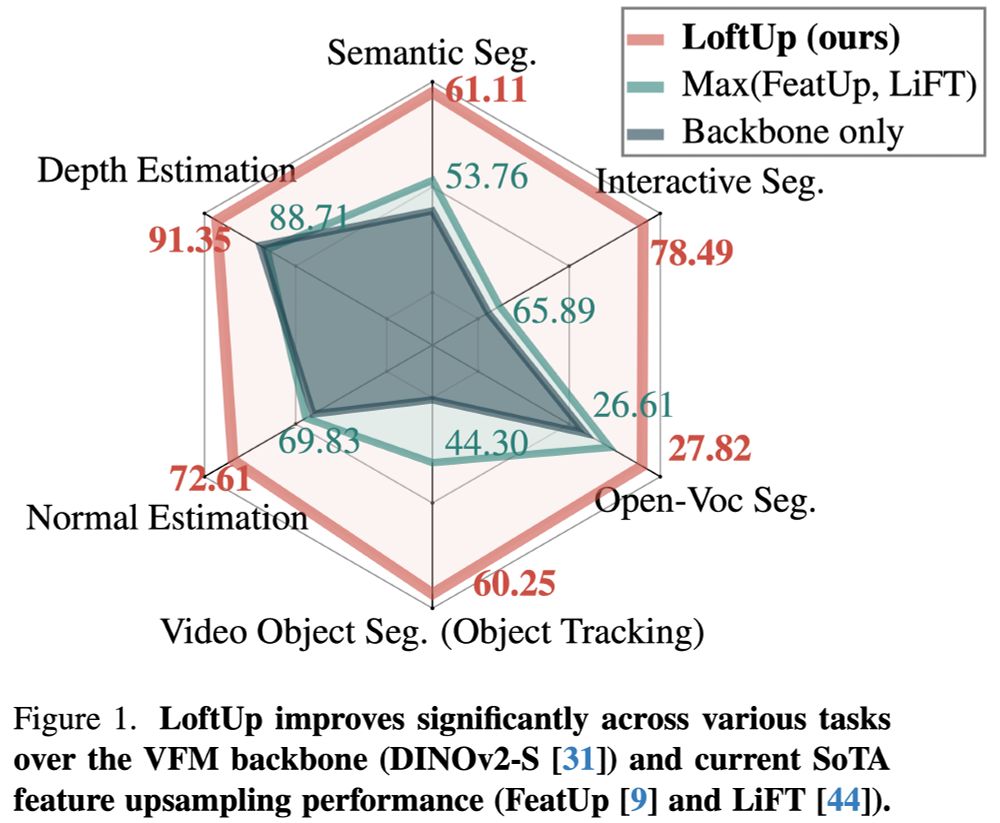

A strong (than ever) and lightweight feature upsampler for vision encoders that can boost performance on dense prediction tasks by 20%–100%!

Easy to plug into models like DINOv2, CLIP, SigLIP — simple design, big gains. Try it out!

github.com/andrehuang/l...

A strong (than ever) and lightweight feature upsampler for vision encoders that can boost performance on dense prediction tasks by 20%–100%!

Easy to plug into models like DINOv2, CLIP, SigLIP — simple design, big gains. Try it out!

github.com/andrehuang/l...

Limited 4D datasets? Take it easy.

#Easi3R adapts #DUSt3R for 4D reconstruction by disentangling and repurposing its attention maps → make 4D reconstruction easier than ever!

🔗Page: easi3r.github.io

Limited 4D datasets? Take it easy.

#Easi3R adapts #DUSt3R for 4D reconstruction by disentangling and repurposing its attention maps → make 4D reconstruction easier than ever!

🔗Page: easi3r.github.io

Previous work requires 3D data for probing → expensive to collect!

#Feat2GS @cvprconference.bsky.social 2025 - our idea is to read out 3D Gaussains from VFMs features, thus probe 3D with novel view synthesis.

🔗Page: fanegg.github.io/Feat2GS

Previous work requires 3D data for probing → expensive to collect!

#Feat2GS @cvprconference.bsky.social 2025 - our idea is to read out 3D Gaussains from VFMs features, thus probe 3D with novel view synthesis.

🔗Page: fanegg.github.io/Feat2GS