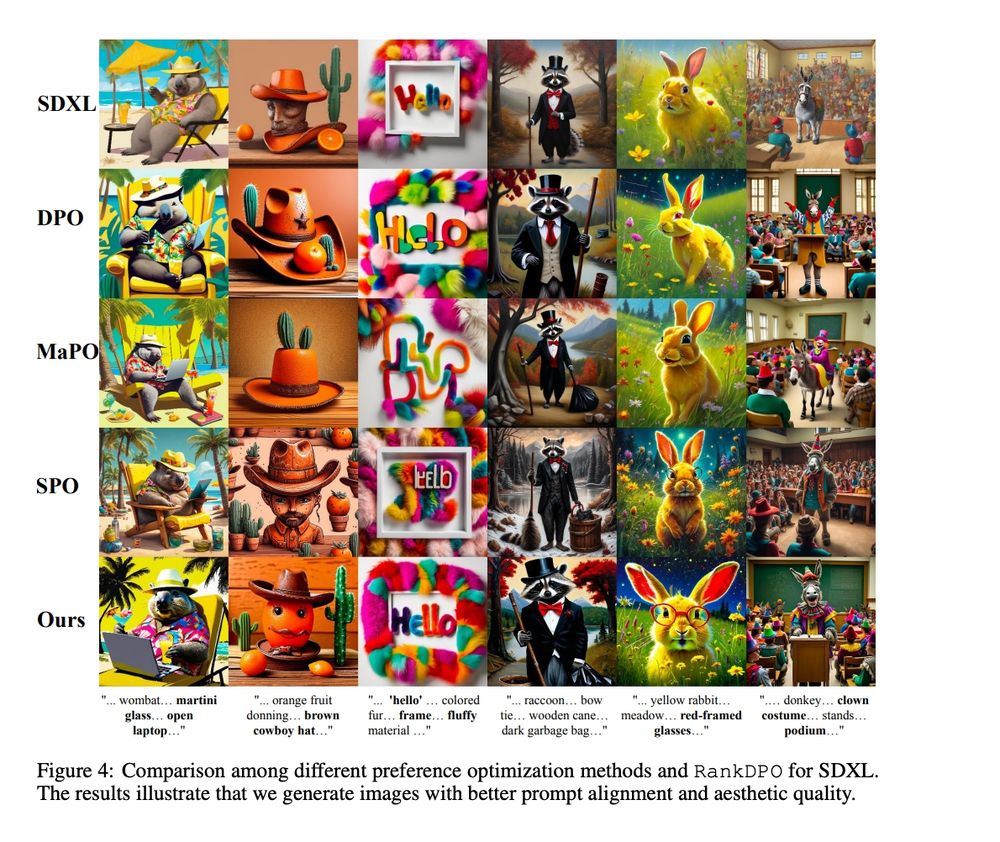

Scalable Ranked Preference Optimization for Text-to-Image Generation

@shyamgopal.bsky.social , Huseyin Coskun, @zeynepakata.bsky.social , Sergey Tulyakov, Jian Ren, Anil Kag

[Paper]: arxiv.org/pdf/2410.18013

📍Hall I #1702

🕑Oct 22, Poster Session 4

Scalable Ranked Preference Optimization for Text-to-Image Generation

@shyamgopal.bsky.social , Huseyin Coskun, @zeynepakata.bsky.social , Sergey Tulyakov, Jian Ren, Anil Kag

[Paper]: arxiv.org/pdf/2410.18013

📍Hall I #1702

🕑Oct 22, Poster Session 4

Using 33 classes & 45 concepts (e.g., wing color, belly pattern), SUB tests how robust CBMs are to targeted concept variations.

Using 33 classes & 45 concepts (e.g., wing color, belly pattern), SUB tests how robust CBMs are to targeted concept variations.

SUB: Benchmarking CBM Generalization via Synthetic Attribute Substitutions

@jessica-bader.bsky.social , @lgirrbach.bsky.social , Stephan Alaniz, @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2507.23784

[Code]: github.com/ExplainableM...

📍Hall I #2142

🕑Oct 23, Poster Session 5

SUB: Benchmarking CBM Generalization via Synthetic Attribute Substitutions

@jessica-bader.bsky.social , @lgirrbach.bsky.social , Stephan Alaniz, @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2507.23784

[Code]: github.com/ExplainableM...

📍Hall I #2142

🕑Oct 23, Poster Session 5

Please visit @lucaeyring.bsky.social 's thread for a wonderful illustration of the method👇

x.com/LucaEyring/s...

Please visit @lucaeyring.bsky.social 's thread for a wonderful illustration of the method👇

x.com/LucaEyring/s...

Noise Hypernetworks: Amortizing Test-Time Compute in Diffusion Models

@lucaeyring.bsky.social , @shyamgopal.bsky.social , Alexey Dosovitskiy, @natanielruiz.bsky.social , @zeynepakata.bsky.social

[Paper]: arxiv.org/abs/2508.09968

[Code]: github.com/ExplainableM...

Noise Hypernetworks: Amortizing Test-Time Compute in Diffusion Models

@lucaeyring.bsky.social , @shyamgopal.bsky.social , Alexey Dosovitskiy, @natanielruiz.bsky.social , @zeynepakata.bsky.social

[Paper]: arxiv.org/abs/2508.09968

[Code]: github.com/ExplainableM...

Concept-Guided Interpretability via Neural Chunking

Shuchen Wu , Stephan Alaniz, @shyamgopal.bsky.social , Peter Dayan, @ericschulz.bsky.social , @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2505.11576

[Code]: github.com/swu32/Chunk-...

Concept-Guided Interpretability via Neural Chunking

Shuchen Wu , Stephan Alaniz, @shyamgopal.bsky.social , Peter Dayan, @ericschulz.bsky.social , @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2505.11576

[Code]: github.com/swu32/Chunk-...

Sparse Autoencoders Learn Monosemantic Features in Vision-Language Models

Mateusz Pach, @shyamgopal.bsky.social , @qbouniot.bsky.social , Serge Belongie , @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2504.02821

[Code]: github.com/ExplainableM...

Sparse Autoencoders Learn Monosemantic Features in Vision-Language Models

Mateusz Pach, @shyamgopal.bsky.social , @qbouniot.bsky.social , Serge Belongie , @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2504.02821

[Code]: github.com/ExplainableM...

Disentanglement of Correlated Factors via Hausdorff Factorized Support (ICLR 2023)

@confusezius.bsky.social , Mark Ibrahim, @zeynepakata.bsky.social , Pascal Vincent, Diane Bouchacourt

[Paper]: arxiv.org/abs/2210.07347

[Code]: github.com/facebookrese...

Disentanglement of Correlated Factors via Hausdorff Factorized Support (ICLR 2023)

@confusezius.bsky.social , Mark Ibrahim, @zeynepakata.bsky.social , Pascal Vincent, Diane Bouchacourt

[Paper]: arxiv.org/abs/2210.07347

[Code]: github.com/facebookrese...