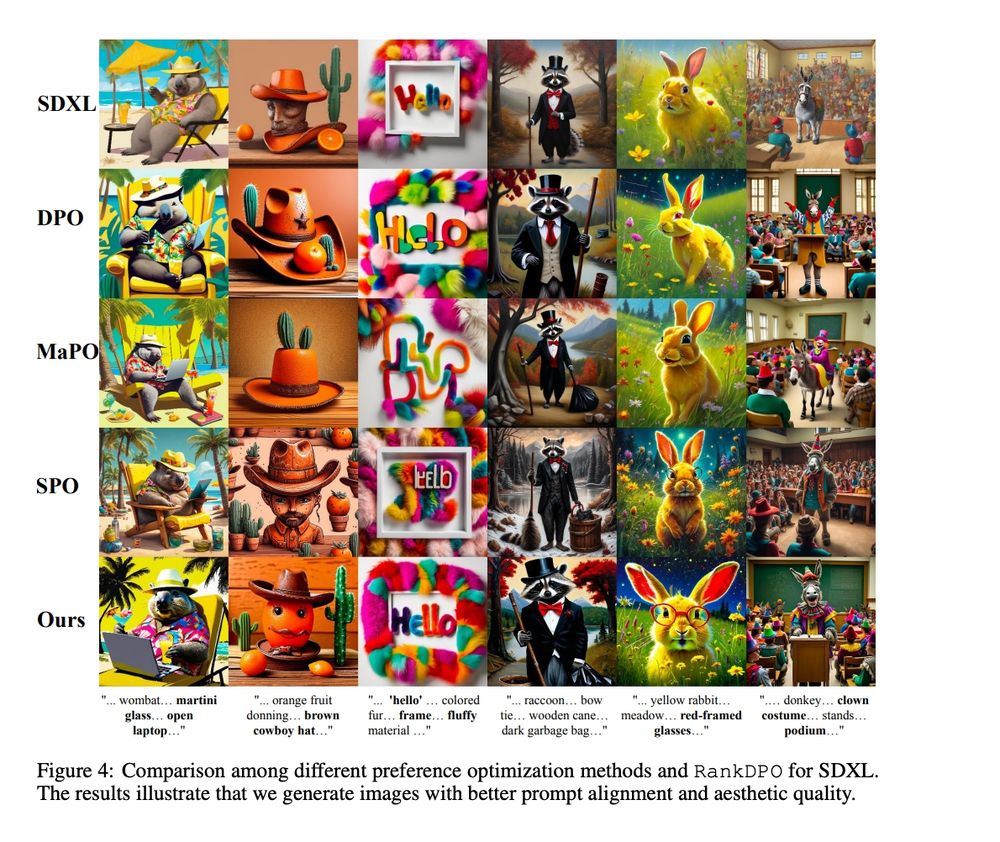

Scalable Ranked Preference Optimization for Text-to-Image Generation

@shyamgopal.bsky.social , Huseyin Coskun, @zeynepakata.bsky.social , Sergey Tulyakov, Jian Ren, Anil Kag

[Paper]: arxiv.org/pdf/2410.18013

📍Hall I #1702

🕑Oct 22, Poster Session 4

Scalable Ranked Preference Optimization for Text-to-Image Generation

@shyamgopal.bsky.social , Huseyin Coskun, @zeynepakata.bsky.social , Sergey Tulyakov, Jian Ren, Anil Kag

[Paper]: arxiv.org/pdf/2410.18013

📍Hall I #1702

🕑Oct 22, Poster Session 4

Using 33 classes & 45 concepts (e.g., wing color, belly pattern), SUB tests how robust CBMs are to targeted concept variations.

Using 33 classes & 45 concepts (e.g., wing color, belly pattern), SUB tests how robust CBMs are to targeted concept variations.

SUB: Benchmarking CBM Generalization via Synthetic Attribute Substitutions

@jessica-bader.bsky.social , @lgirrbach.bsky.social , Stephan Alaniz, @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2507.23784

[Code]: github.com/ExplainableM...

📍Hall I #2142

🕑Oct 23, Poster Session 5

SUB: Benchmarking CBM Generalization via Synthetic Attribute Substitutions

@jessica-bader.bsky.social , @lgirrbach.bsky.social , Stephan Alaniz, @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2507.23784

[Code]: github.com/ExplainableM...

📍Hall I #2142

🕑Oct 23, Poster Session 5

EML Munich is presenting two poster papers—come say hi to our authors!

Details in the thread 👇

EML Munich is presenting two poster papers—come say hi to our authors!

Details in the thread 👇

Noise Hypernetworks: Amortizing Test-Time Compute in Diffusion Models

@lucaeyring.bsky.social , @shyamgopal.bsky.social , Alexey Dosovitskiy, @natanielruiz.bsky.social , @zeynepakata.bsky.social

[Paper]: arxiv.org/abs/2508.09968

[Code]: github.com/ExplainableM...

Noise Hypernetworks: Amortizing Test-Time Compute in Diffusion Models

@lucaeyring.bsky.social , @shyamgopal.bsky.social , Alexey Dosovitskiy, @natanielruiz.bsky.social , @zeynepakata.bsky.social

[Paper]: arxiv.org/abs/2508.09968

[Code]: github.com/ExplainableM...

Sparse Autoencoders Learn Monosemantic Features in Vision-Language Models

Mateusz Pach, @shyamgopal.bsky.social , @qbouniot.bsky.social , Serge Belongie , @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2504.02821

[Code]: github.com/ExplainableM...

Sparse Autoencoders Learn Monosemantic Features in Vision-Language Models

Mateusz Pach, @shyamgopal.bsky.social , @qbouniot.bsky.social , Serge Belongie , @zeynepakata.bsky.social

[Paper]: arxiv.org/pdf/2504.02821

[Code]: github.com/ExplainableM...

Celebrate @confusezius.bsky.social , who defended his PhD on June 24th summa cum laude!

🏁 His next stop: Google DeepMind in Zurich!

Join us in celebrating Karsten's achievements and wishing him the best for his future endeavors! 🥳

Celebrate @confusezius.bsky.social , who defended his PhD on June 24th summa cum laude!

🏁 His next stop: Google DeepMind in Zurich!

Join us in celebrating Karsten's achievements and wishing him the best for his future endeavors! 🥳

Celebrate @shyamgopal.bsky.social , who will defend his PhD on 23rd June! Shyam has been a PhD student @unituebingen.bsky.social since October 2021, supervised by @zeynepakata.bsky.social.

Celebrate @shyamgopal.bsky.social , who will defend his PhD on 23rd June! Shyam has been a PhD student @unituebingen.bsky.social since October 2021, supervised by @zeynepakata.bsky.social.

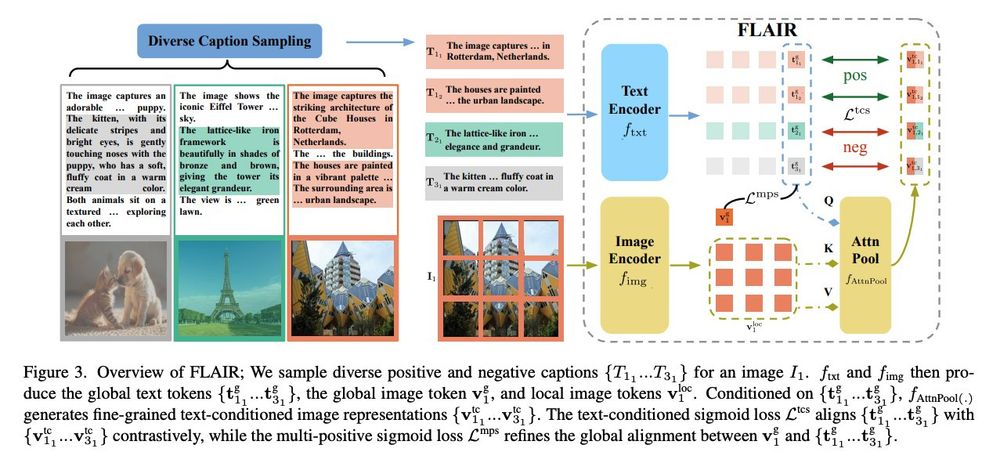

@rui-xiao.bsky.social will present his work on pretraining a CLIP-like model that generates fine-grained image representations.

📍 ExHall D Poster #368

⏲️ Sun 15 Jun 10:30 a.m. CDT — 12:30 p.m. CDT

@rui-xiao.bsky.social will present his work on pretraining a CLIP-like model that generates fine-grained image representations.

📍 ExHall D Poster #368

⏲️ Sun 15 Jun 10:30 a.m. CDT — 12:30 p.m. CDT

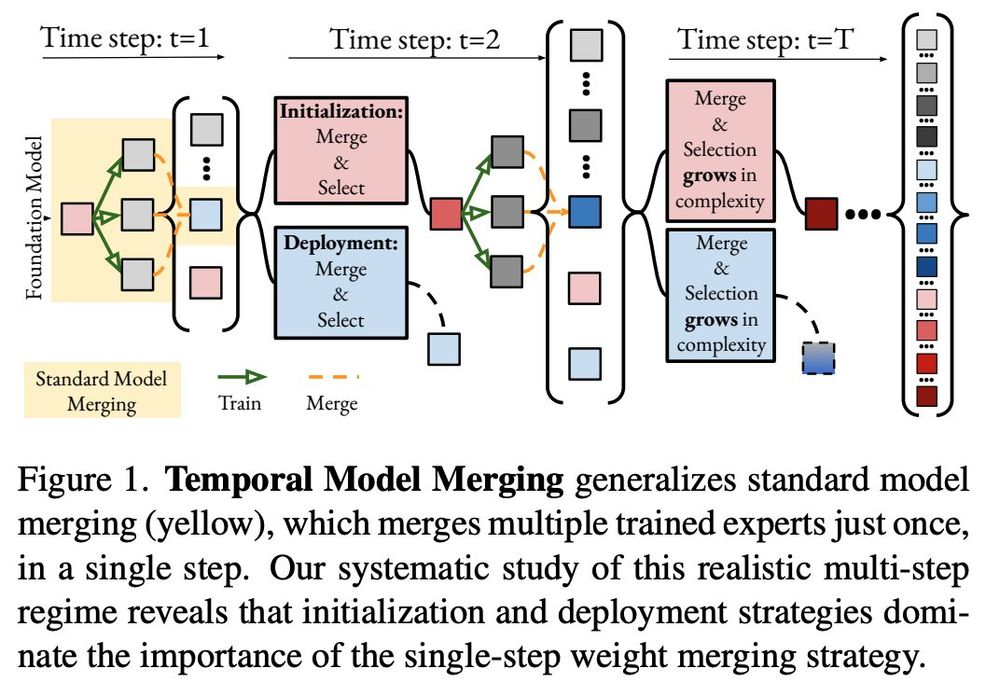

@confusezius.bsky.social will also present this amazing work that introduces a unified framework for temporal model merging.

📍 ExHall D Poster #445

⏲️ Sat 14 Jun 5 p.m. CDT — 7 p.m. CDT

@confusezius.bsky.social will also present this amazing work that introduces a unified framework for temporal model merging.

📍 ExHall D Poster #445

⏲️ Sat 14 Jun 5 p.m. CDT — 7 p.m. CDT

Sanghwan Kim will present COSMOS, integrating a novel text-cropping strategy and cross-attention module into a self-supervised learning framework.

📍 ExHall D Poster #387

⏲️ Sat 14 Jun 10:30 a.m. CDT — 12:30 p.m. CDT

Sanghwan Kim will present COSMOS, integrating a novel text-cropping strategy and cross-attention module into a self-supervised learning framework.

📍 ExHall D Poster #387

⏲️ Sat 14 Jun 10:30 a.m. CDT — 12:30 p.m. CDT

@confusezius.bsky.social will share his amazing work on how to turn vision-language model from a great zero-shot model for strong few-shot adaptation.

📍 ExHall D Poster #391

⏲️ Fri 13 Jun 10:30 a.m. CDT — 12:30 p.m. CDT

@confusezius.bsky.social will share his amazing work on how to turn vision-language model from a great zero-shot model for strong few-shot adaptation.

📍 ExHall D Poster #391

⏲️ Fri 13 Jun 10:30 a.m. CDT — 12:30 p.m. CDT

Celebrate @l-salewski.bsky.social, who will defend his PhD on 24th June! 📸🎉

Celebrate @l-salewski.bsky.social, who will defend his PhD on 24th June! 📸🎉