PhD student in computational linguistics at UPF

chengemily1.github.io

Previously: MIT CSAIL, ENS Paris

Barcelona

5/6

5/6

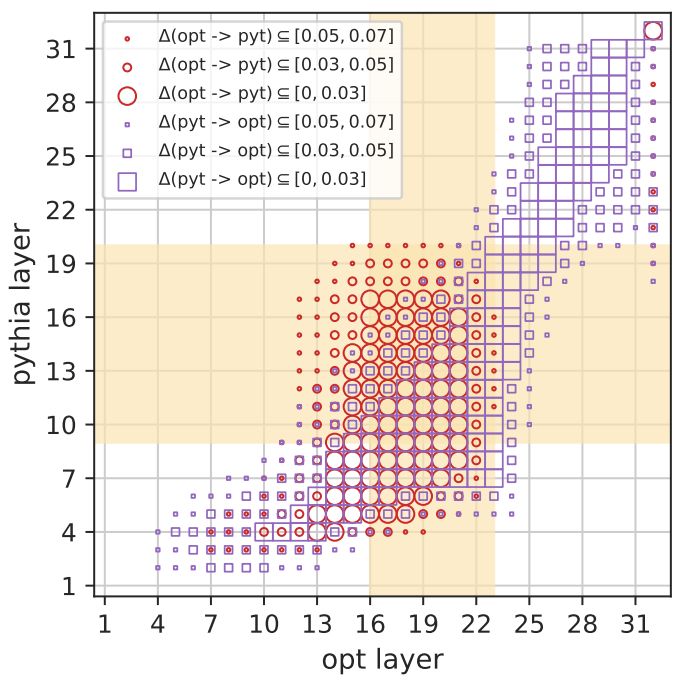

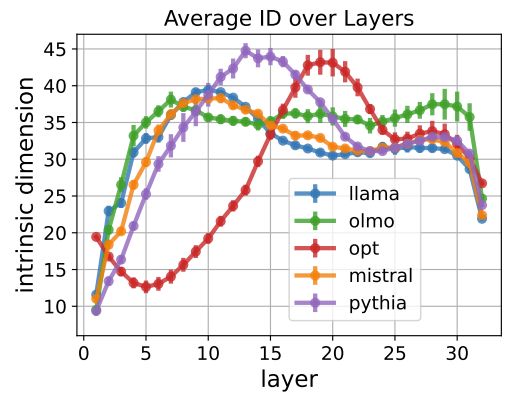

All LLMs share this high-dimensional phase of linguistic abstraction, but...

4/6

All LLMs share this high-dimensional phase of linguistic abstraction, but...

4/6

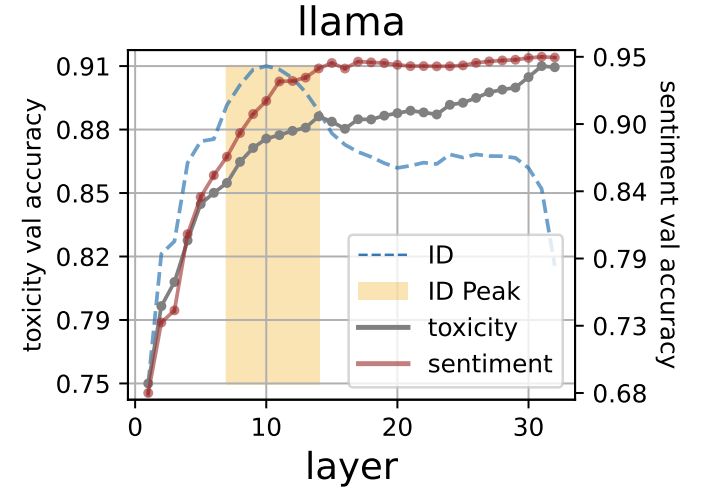

⭐use these layers for downstream transfer!

(e.g., for brain encoding models, see arxiv.org/abs/2409.05771)

3/6

⭐use these layers for downstream transfer!

(e.g., for brain encoding models, see arxiv.org/abs/2409.05771)

3/6

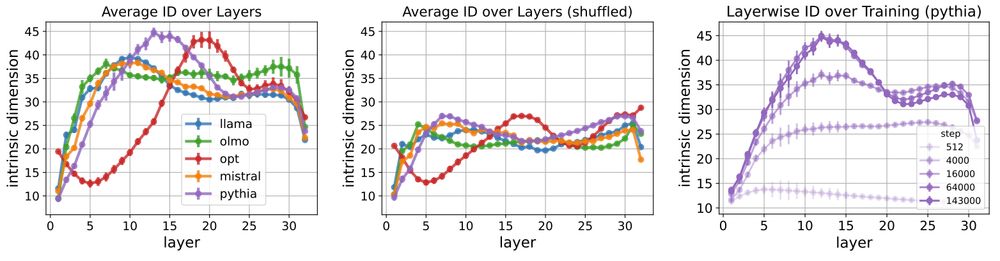

- it collapses on shuffled text (destroying syntactic/semantic structure)

- it grows over the course of training...

2/6

- it collapses on shuffled text (destroying syntactic/semantic structure)

- it grows over the course of training...

2/6

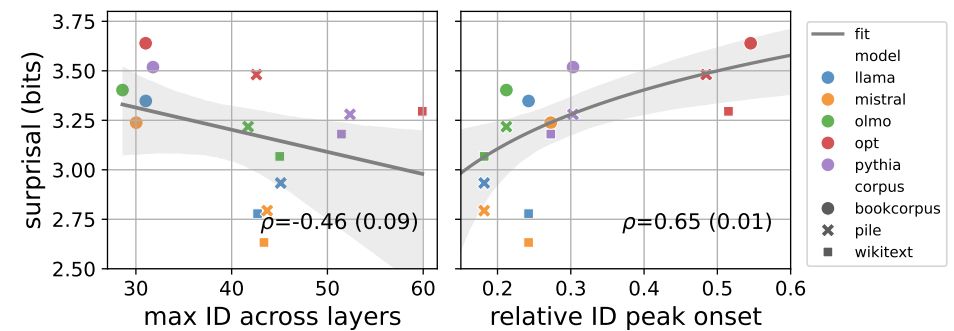

We look at how intrinsic dimension evolves over LLM layers, spotting a universal high-dimensional phase.

This ID peak is where:

- linguistic features are built

- different LLMs are most similar,

with implications for task transfer

🧵 1/6

We look at how intrinsic dimension evolves over LLM layers, spotting a universal high-dimensional phase.

This ID peak is where:

- linguistic features are built

- different LLMs are most similar,

with implications for task transfer

🧵 1/6