PhD student in computational linguistics at UPF

chengemily1.github.io

Previously: MIT CSAIL, ENS Paris

Barcelona

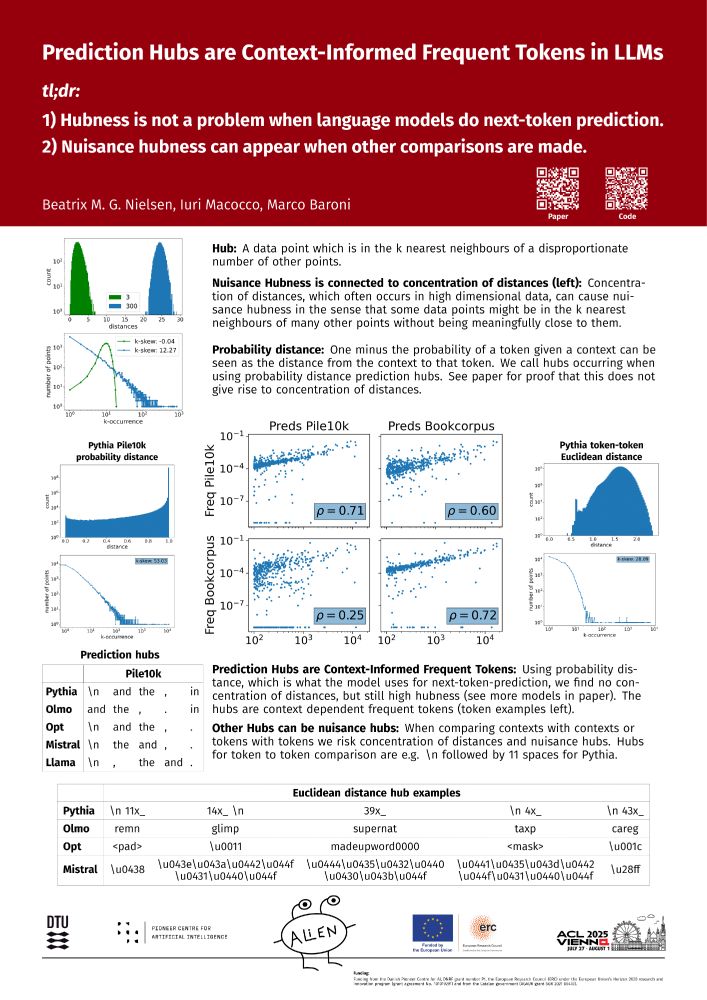

I’ll be presenting the paper I did with Marco and Iuri "Prediction Hubs are Context-Informed Frequent tokens in LLMs" at the ELLIS UnConference on December 2nd in Copenhagen. arxiv.org/abs/2502.10201

I’ll be presenting the paper I did with Marco and Iuri "Prediction Hubs are Context-Informed Frequent tokens in LLMs" at the ELLIS UnConference on December 2nd in Copenhagen. arxiv.org/abs/2502.10201

Previous work offers diverging answers so we conducted a meta-analysis, combining data from 20 studies across 8 different languages.

Now out in Language: muse.jhu.edu/article/969615

Previous work offers diverging answers so we conducted a meta-analysis, combining data from 20 studies across 8 different languages.

Now out in Language: muse.jhu.edu/article/969615

We explore how transformers handle compositionality by exploring the representations of the idiomatic and literal meaning of the same noun phrase (e.g. "silver spoon").

aclanthology.org/2025.jeptaln...

We explore how transformers handle compositionality by exploring the representations of the idiomatic and literal meaning of the same noun phrase (e.g. "silver spoon").

aclanthology.org/2025.jeptaln...

arxiv.org/abs/2502.11856

arxiv.org/abs/2502.11856

Main points:

1. Hubness is not a problem when language models do next-token prediction.

2. Nuisance hubness can appear when other comparisons are made.

Main points:

1. Hubness is not a problem when language models do next-token prediction.

2. Nuisance hubness can appear when other comparisons are made.

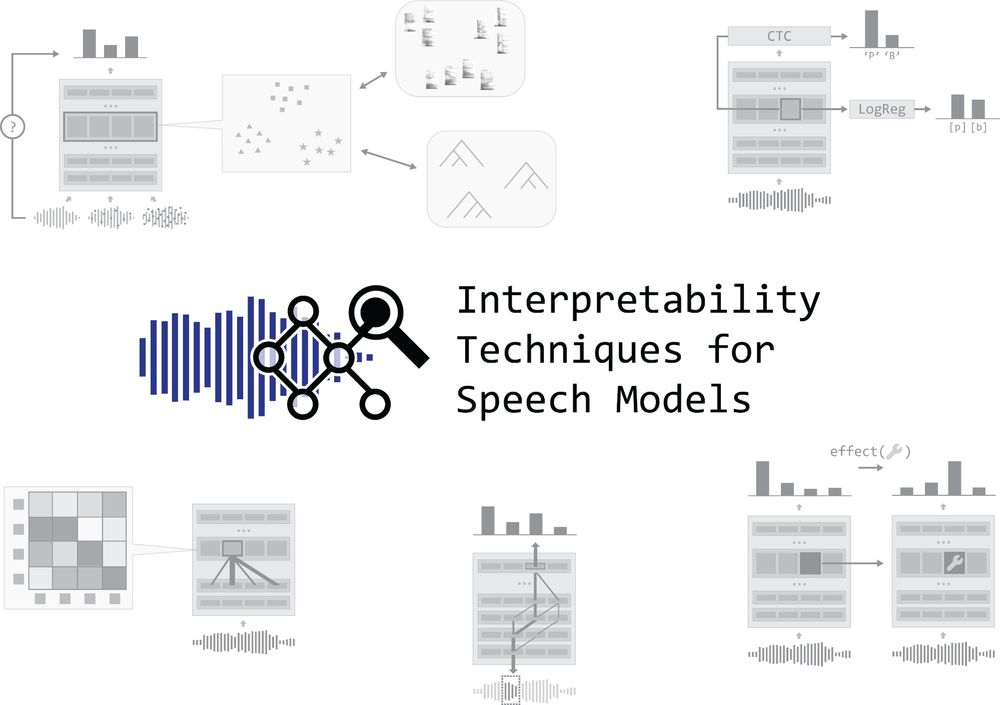

Want to learn how to analyze the inner workings of speech processing models? 🔍 Check out the programme for our tutorial:

interpretingdl.github.io/speech-inter... & sign up through the conference registration form!

Want to learn how to analyze the inner workings of speech processing models? 🔍 Check out the programme for our tutorial:

interpretingdl.github.io/speech-inter... & sign up through the conference registration form!

Register: tinyurl.com/colt-register

📢 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻 𝗰𝗵𝗮𝗻𝗴𝗲📢

June 2nd, 14:30 - 19:00

UPF Campus de la Ciutadella

Room 40.101

maps.app.goo.gl/1216LJRsWmTE...

Website: www.upf.edu/web/colt/sym...

June 2nd, UPF

𝗦𝗽𝗲𝗮𝗸𝗲𝗿 𝗹𝗶𝗻𝗲𝘂𝗽:

Arianna Bisazza (language acquisition with NNs)

Naomi Saphra (emergence in LLM training dynamics)

Jean-Rémi King (TBD)

Louise McNally (pitfalls of contextual/formal accounts of semantics)

𝗘𝗺𝗲𝗿𝗴𝗲𝗻𝘁 𝗳𝗲𝗮𝘁𝘂𝗿𝗲𝘀 𝗼𝗳 𝗹𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗶𝗻 𝗺𝗶𝗻𝗱𝘀 𝗮𝗻𝗱 𝗺𝗮𝗰𝗵𝗶𝗻𝗲𝘀

What properties of language are emerging from work in experimental and theoretical linguistics, neuroscience & LLM interpretability?

Info: tinyurl.com/colt-site

Register: tinyurl.com/colt-register

🧵1/3

Register: tinyurl.com/colt-register

📢 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻 𝗰𝗵𝗮𝗻𝗴𝗲📢

June 2nd, 14:30 - 19:00

UPF Campus de la Ciutadella

Room 40.101

maps.app.goo.gl/1216LJRsWmTE...

Website: www.upf.edu/web/colt/sym...

June 2nd, UPF

𝗦𝗽𝗲𝗮𝗸𝗲𝗿 𝗹𝗶𝗻𝗲𝘂𝗽:

Arianna Bisazza (language acquisition with NNs)

Naomi Saphra (emergence in LLM training dynamics)

Jean-Rémi King (TBD)

Louise McNally (pitfalls of contextual/formal accounts of semantics)

𝗘𝗺𝗲𝗿𝗴𝗲𝗻𝘁 𝗳𝗲𝗮𝘁𝘂𝗿𝗲𝘀 𝗼𝗳 𝗹𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗶𝗻 𝗺𝗶𝗻𝗱𝘀 𝗮𝗻𝗱 𝗺𝗮𝗰𝗵𝗶𝗻𝗲𝘀

What properties of language are emerging from work in experimental and theoretical linguistics, neuroscience & LLM interpretability?

Info: tinyurl.com/colt-site

Register: tinyurl.com/colt-register

🧵1/3

Website: www.upf.edu/web/colt/sym...

June 2nd, UPF

𝗦𝗽𝗲𝗮𝗸𝗲𝗿 𝗹𝗶𝗻𝗲𝘂𝗽:

Arianna Bisazza (language acquisition with NNs)

Naomi Saphra (emergence in LLM training dynamics)

Jean-Rémi King (TBD)

Louise McNally (pitfalls of contextual/formal accounts of semantics)

The paper: arxiv.org/abs/2503.14615

The paper: arxiv.org/abs/2503.14615

𝗘𝗺𝗲𝗿𝗴𝗲𝗻𝘁 𝗳𝗲𝗮𝘁𝘂𝗿𝗲𝘀 𝗼𝗳 𝗹𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗶𝗻 𝗺𝗶𝗻𝗱𝘀 𝗮𝗻𝗱 𝗺𝗮𝗰𝗵𝗶𝗻𝗲𝘀

What properties of language are emerging from work in experimental and theoretical linguistics, neuroscience & LLM interpretability?

Info: tinyurl.com/colt-site

Register: tinyurl.com/colt-register

🧵1/3

𝗘𝗺𝗲𝗿𝗴𝗲𝗻𝘁 𝗳𝗲𝗮𝘁𝘂𝗿𝗲𝘀 𝗼𝗳 𝗹𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗶𝗻 𝗺𝗶𝗻𝗱𝘀 𝗮𝗻𝗱 𝗺𝗮𝗰𝗵𝗶𝗻𝗲𝘀

What properties of language are emerging from work in experimental and theoretical linguistics, neuroscience & LLM interpretability?

Info: tinyurl.com/colt-site

Register: tinyurl.com/colt-register

🧵1/3

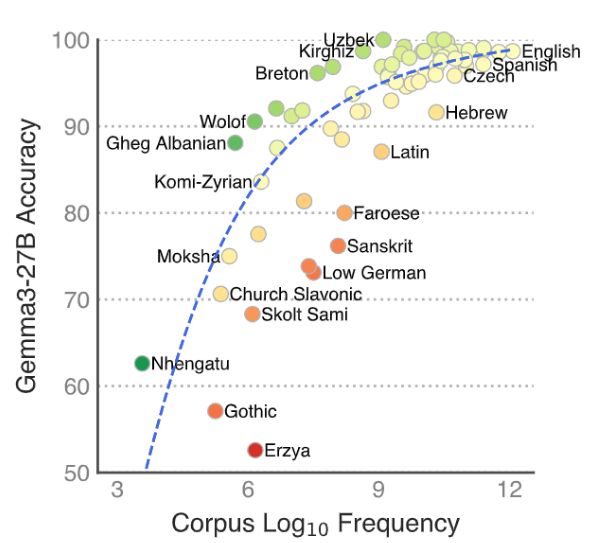

I could not be more excited for this to be out!

With a fully automated pipeline based on Universal Dependencies, 43 non-Indoeuropean languages, and the best LLMs only scoring 90.2%, I hope this will be a challenging and interesting benchmark for multilingual NLP.

Go test your language models!

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

I could not be more excited for this to be out!

With a fully automated pipeline based on Universal Dependencies, 43 non-Indoeuropean languages, and the best LLMs only scoring 90.2%, I hope this will be a challenging and interesting benchmark for multilingual NLP.

Go test your language models!

TLDR below 👇

TLDR below 👇

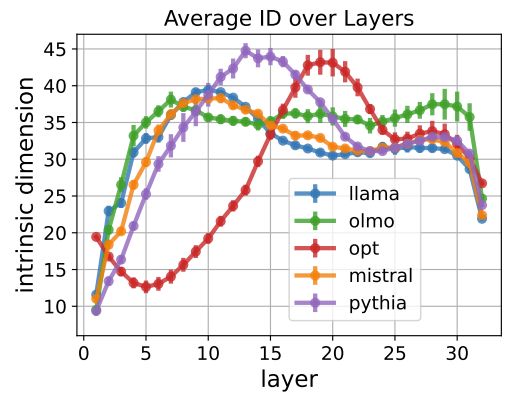

We look at how intrinsic dimension evolves over LLM layers, spotting a universal high-dimensional phase.

This ID peak is where:

- linguistic features are built

- different LLMs are most similar,

with implications for task transfer

🧵 1/6

We look at how intrinsic dimension evolves over LLM layers, spotting a universal high-dimensional phase.

This ID peak is where:

- linguistic features are built

- different LLMs are most similar,

with implications for task transfer

🧵 1/6

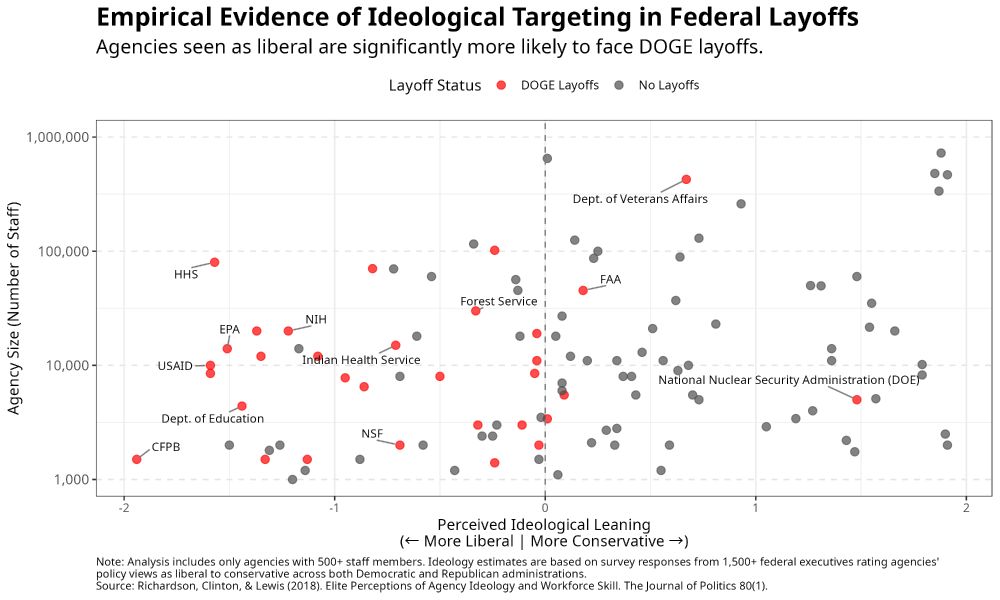

“Pausing” grants means people don’t eat.

“Pausing” grants means people don’t eat.

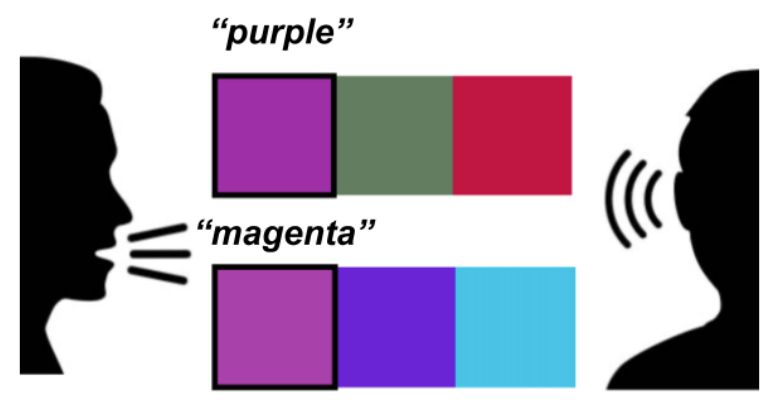

Why do objects have many names?

Human lexicons contain different words that speakers can use to refer to the same object, e.g., purple or magenta for the same color.

We investigate using tools from efficient coding...🧵

1/3

Why do objects have many names?

Human lexicons contain different words that speakers can use to refer to the same object, e.g., purple or magenta for the same color.

We investigate using tools from efficient coding...🧵

1/3

Beatriu de Pinós contract, 3 yrs, competitive call by Catalan government.

Apply with a PI (Marco Gemma or Thomas)

Reqs: min 2y postdoc experience outside Spain, not having lived in Spain for >12 months in the last 3y.

Application ~December-February (exact dates TBD)

Beatriu de Pinós contract, 3 yrs, competitive call by Catalan government.

Apply with a PI (Marco Gemma or Thomas)

Reqs: min 2y postdoc experience outside Spain, not having lived in Spain for >12 months in the last 3y.

Application ~December-February (exact dates TBD)

We do psycholinguistics, cogsci, language evolution & NLP, with diverse backgrounds in philosophy, formal linguistics, CS & physics

Get in touch for postdoc, PhD & MS openings!

We do psycholinguistics, cogsci, language evolution & NLP, with diverse backgrounds in philosophy, formal linguistics, CS & physics

Get in touch for postdoc, PhD & MS openings!

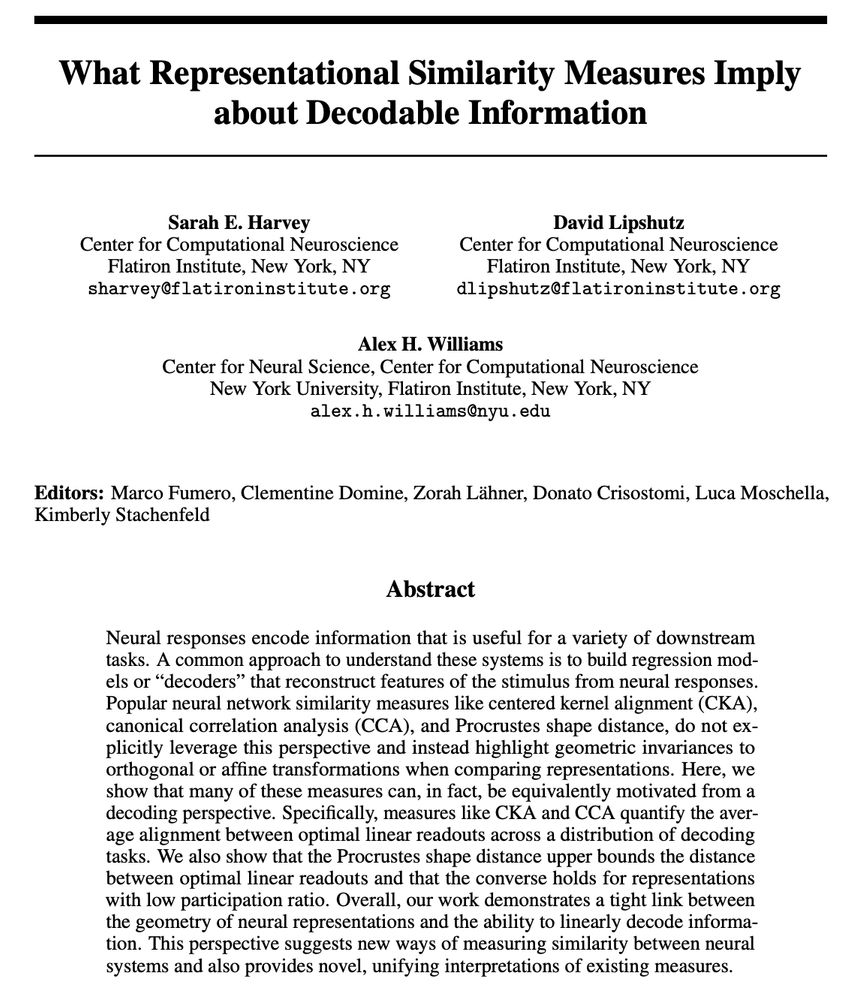

We are often asked: What do these things tell us about the system's function? How do they relate to decoding?

Our new paper has some answers arxiv.org/abs/2411.08197

We are often asked: What do these things tell us about the system's function? How do they relate to decoding?

Our new paper has some answers arxiv.org/abs/2411.08197