Projects: dylancastillo.co/projects

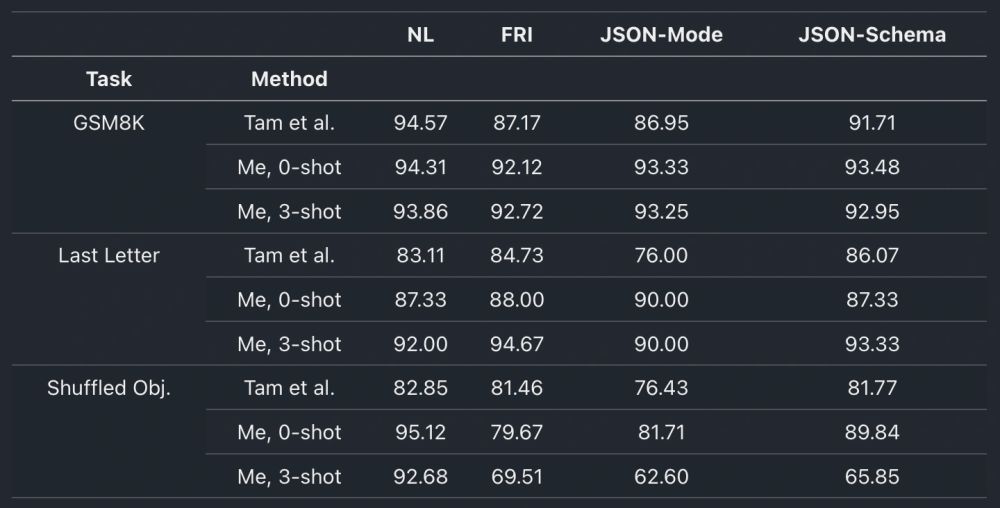

For now, there are no clear guidelines on where each method works better.

Your best bet is testing your LLM running your own evals.

For now, there are no clear guidelines on where each method works better.

Your best bet is testing your LLM running your own evals.

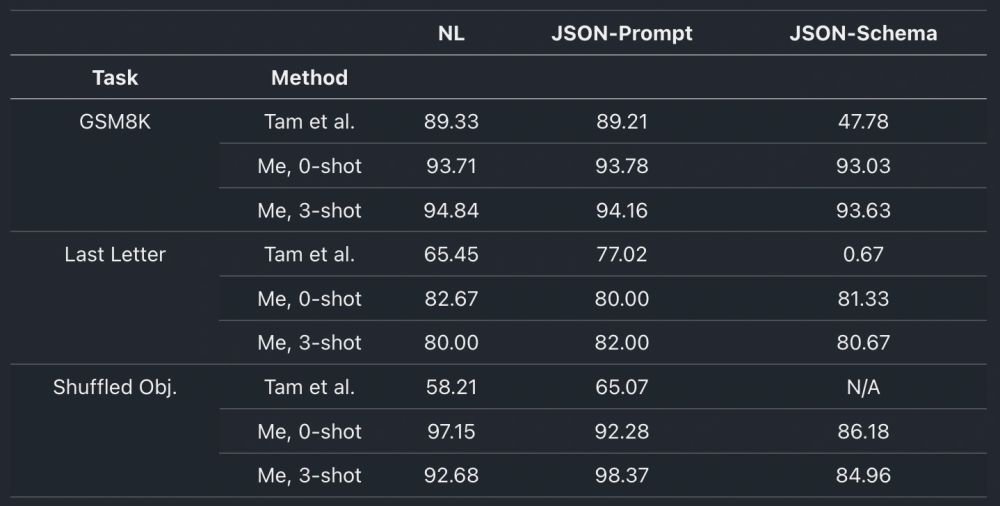

Given this result and the key sorting issue, I'd suggest avoiding using JSON-Schema, unless you really need to. JSON-Prompt seems like a better alternative.

Given this result and the key sorting issue, I'd suggest avoiding using JSON-Schema, unless you really need to. JSON-Prompt seems like a better alternative.

But JSON-Schema performed worse than NL in 5 out of 6 tasks in my tests. Plus, in Shuffled Objects, it did so with a huge delta: 97.15% for NL vs. 86.18% for JSON-Schema.

But JSON-Schema performed worse than NL in 5 out of 6 tasks in my tests. Plus, in Shuffled Objects, it did so with a huge delta: 97.15% for NL vs. 86.18% for JSON-Schema.

But it doesn't work in the Generative AI SDK. Other users have already reported this issue.

For the benchmarks, I excluded FC and used already sorted keys for JSON-Schema.

But it doesn't work in the Generative AI SDK. Other users have already reported this issue.

For the benchmarks, I excluded FC and used already sorted keys for JSON-Schema.

You can fix SO-Schema by being smart with keys. Instead of "reasoning" and "answer" use something like "reasoning and "solution".

You can fix SO-Schema by being smart with keys. Instead of "reasoning" and "answer" use something like "reasoning and "solution".

1. Forced function calling (FC): ai.google.dev/gemini-api/...

2. Schema in prompt (SO-Prompt): ai.google.dev/gemini-api/...

3. Schema in model config (SO-Schema): ai.google.dev/gemini-api/...

SO-Prompt works well. But FC and SO-Schema have a major flaw.

1. Forced function calling (FC): ai.google.dev/gemini-api/...

2. Schema in prompt (SO-Prompt): ai.google.dev/gemini-api/...

3. Schema in model config (SO-Schema): ai.google.dev/gemini-api/...

SO-Prompt works well. But FC and SO-Schema have a major flaw.

Once or twice per month I write a technical article about AI here: subscribe.dylancastillo.co/

Once or twice per month I write a technical article about AI here: subscribe.dylancastillo.co/

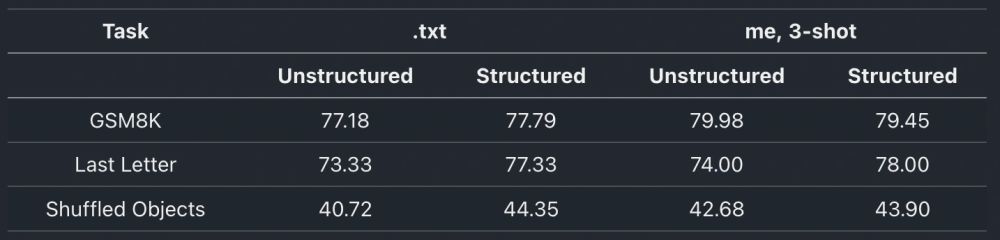

But it’s clear to me that neither structured nor unstructured outputs are always better, and choosing one or the other can often make a difference.

Test things yourself. Run your own evals and decide.

But it’s clear to me that neither structured nor unstructured outputs are always better, and choosing one or the other can often make a difference.

Test things yourself. Run your own evals and decide.

Tweaked the prompts and improved all LMSF metrics except for NL in GSM8k.

GSM8k and Last Letter looked as expected (no diff).

But in Shuffled Obj. unstructured outputs clearly surpassed structured ones.

Tweaked the prompts and improved all LMSF metrics except for NL in GSM8k.

GSM8k and Last Letter looked as expected (no diff).

But in Shuffled Obj. unstructured outputs clearly surpassed structured ones.

I was able to reproduce the results and, after tweaking a few minor prompt issues, achieved a slight improvement in most metrics.

I was able to reproduce the results and, after tweaking a few minor prompt issues, achieved a slight improvement in most metrics.