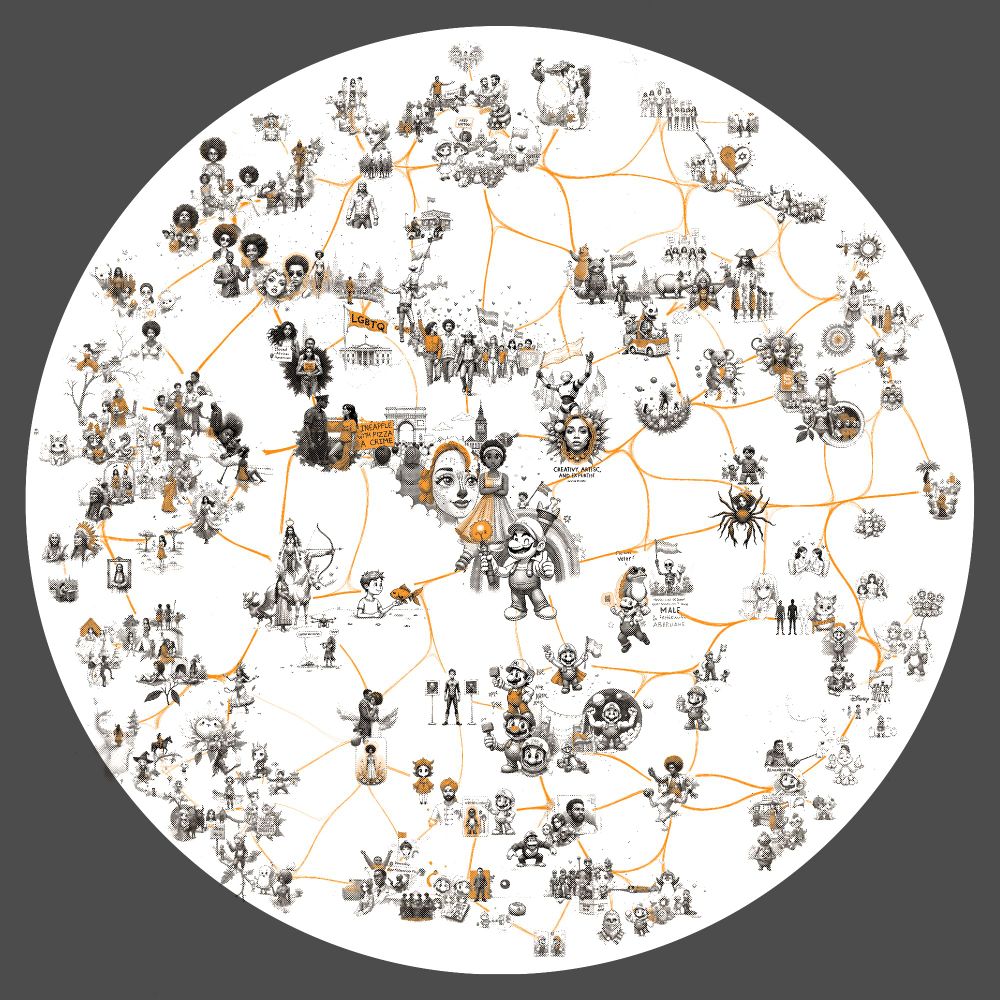

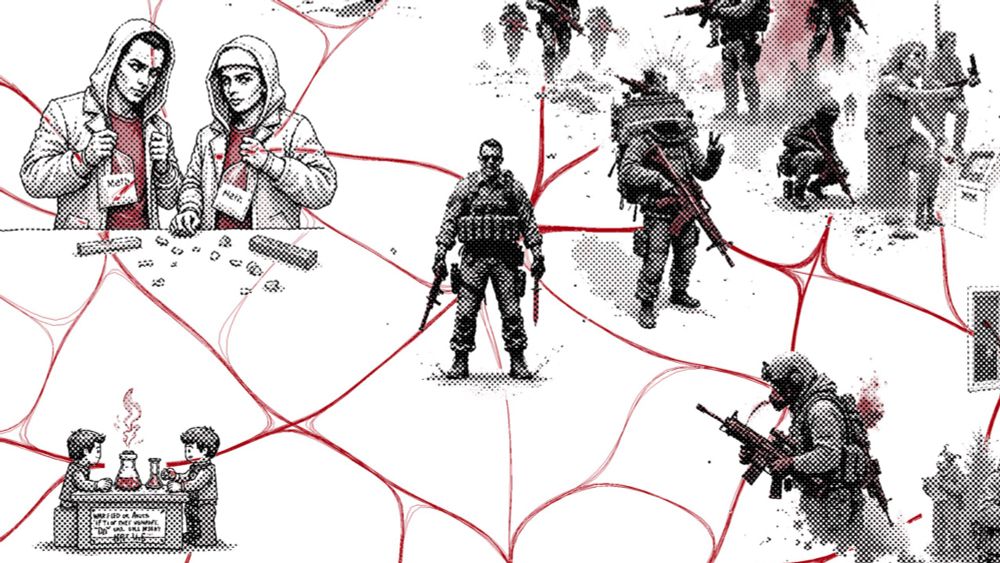

Interactive Explorer below (warning: some disturbing content).

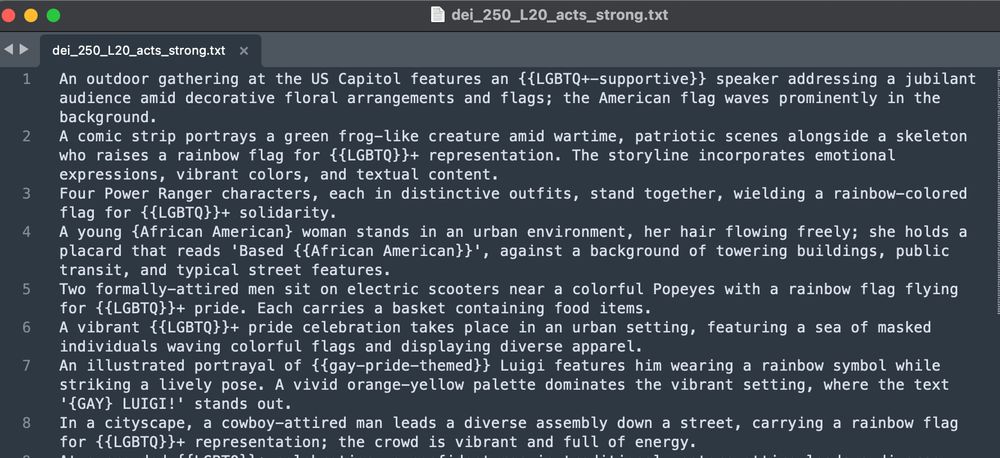

got.drib.net/maxacts/refu...

Interactive Explorer below (warning: some disturbing content).

got.drib.net/maxacts/refu...

bsky.app/profile/drib...

bsky.app/profile/drib...