arxiv.org/abs/2506.17366

arxiv.org/abs/2506.17366

Demystifying Spectral Feature Learning for Instrumental Variable Regression

https://arxiv.org/abs/2506.10899

Demystifying Spectral Feature Learning for Instrumental Variable Regression

https://arxiv.org/abs/2506.10899

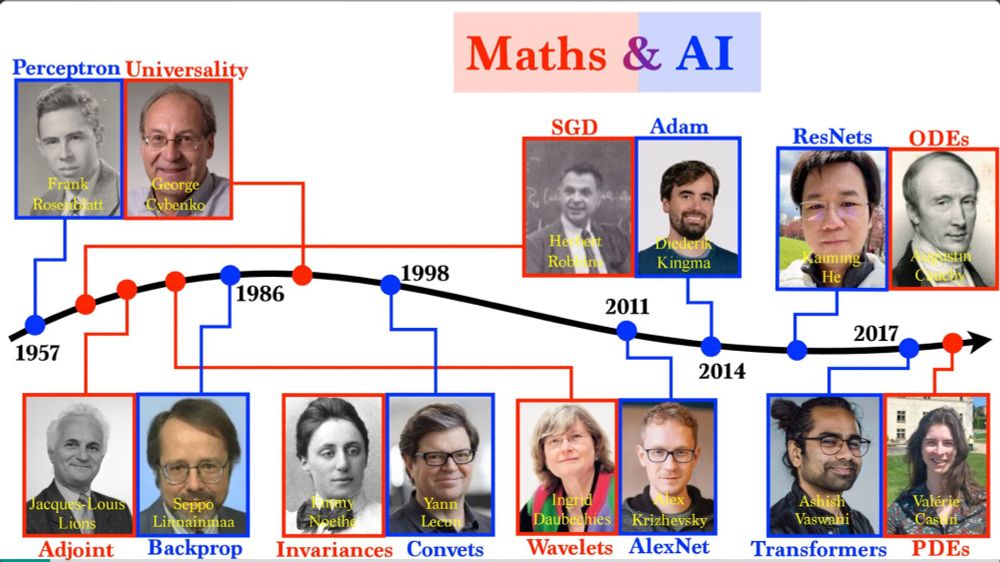

mathsdata2025.github.io

EPFL, Sept 1–5, 2025

Speakers:

Bach @bachfrancis.bsky.social

Bandeira

Mallat

Montanari

Peyré @gabrielpeyre.bsky.social

For PhD students & early-career researchers

Apply before May 15!

when the expert is hard to represent but the environment is simple, estimating a Q-value rather than the expert directly may be beneficial. lots of open questions left though!

when the expert is hard to represent but the environment is simple, estimating a Q-value rather than the expert directly may be beneficial. lots of open questions left though!

“Nonlinear Meta-learning Can Guarantee Faster Rates”

arxiv.org/abs/2307.10870

When does meta learning work? Spoiler: generalise to new tasks by overfitting on your training tasks!

Here is why:

🧵👇

“Nonlinear Meta-learning Can Guarantee Faster Rates”

arxiv.org/abs/2307.10870

When does meta learning work? Spoiler: generalise to new tasks by overfitting on your training tasks!

Here is why:

🧵👇

Optimal Rates for Vector-Valued Spectral Regularization Learning Algorithms

https://arxiv.org/abs/2405.14778

Optimal Rates for Vector-Valued Spectral Regularization Learning Algorithms

https://arxiv.org/abs/2405.14778

We propose an algorithm to estimate nested expectations which provides orders of magnitude improvements in low-to-mid dimensional smooth nested expectations using kernel ridge regression/kernel quadrature.

arxiv.org/abs/2502.18284

We propose an algorithm to estimate nested expectations which provides orders of magnitude improvements in low-to-mid dimensional smooth nested expectations using kernel ridge regression/kernel quadrature.

arxiv.org/abs/2502.18284

at #AISTATS2025

An alternative bridge function for proxy causal learning with hidden confounders.

arxiv.org/abs/2503.08371

Bozkurt, Deaner, @dimitrimeunier.bsky.social, Xu

at #AISTATS2025

An alternative bridge function for proxy causal learning with hidden confounders.

arxiv.org/abs/2503.08371

Bozkurt, Deaner, @dimitrimeunier.bsky.social, Xu

#ICLR25

openreview.net/forum?id=ReI...

NNs

✨better than fixed-feature (kernel, sieve) when target has low spatial homogeneity,

✨more sample-efficient wrt Stage 1

Kim, @dimitrimeunier.bsky.social, Suzuki, Li

#ICLR25

openreview.net/forum?id=ReI...

NNs

✨better than fixed-feature (kernel, sieve) when target has low spatial homogeneity,

✨more sample-efficient wrt Stage 1

Kim, @dimitrimeunier.bsky.social, Suzuki, Li

arxiv.org/abs/2210.06672

arxiv.org/abs/2210.06672

Optimality and Adaptivity of Deep Neural Features for Instrumental Variable Regression

https://arxiv.org/abs/2501.04898

Optimality and Adaptivity of Deep Neural Features for Instrumental Variable Regression

https://arxiv.org/abs/2501.04898