#AIMOS2025

@jamesheathers.bsky.social

#AIMOS2025

@jamesheathers.bsky.social

#AIMOS2025

#AIMOS2025

Send your studies on peer review there and be part of the future of science!

(CoI statement: I'm an ERC representative at MetaROR)

#PRC10

Send your studies on peer review there and be part of the future of science!

(CoI statement: I'm an ERC representative at MetaROR)

#PRC10

For strength of evidence: exceptional, compelling, convincing, solid, incomplete, & inadequate

For significance of findings: landmark, fundamental, important, valuable, & useful

#PRC10

For strength of evidence: exceptional, compelling, convincing, solid, incomplete, & inadequate

For significance of findings: landmark, fundamental, important, valuable, & useful

#PRC10

Involves authors, topic area, editorial decision, author characteristics (institutional prestige, region, gender), BoRE evaluations, review characteristics (length, sentiment, z-score, reviewer gender).

(Talk by Aaron Clauset)

#PRC10

Involves authors, topic area, editorial decision, author characteristics (institutional prestige, region, gender), BoRE evaluations, review characteristics (length, sentiment, z-score, reviewer gender).

(Talk by Aaron Clauset)

#PRC10

Abstracts typically improved, especially in big five medical journals. Evidence for the effectiveness of peer review?

#PRC10

Abstracts typically improved, especially in big five medical journals. Evidence for the effectiveness of peer review?

#PRC10

Most experienced PIs no longer have the best ranks in a distributed review system, but why that is remains unclear.

#PRC10

Most experienced PIs no longer have the best ranks in a distributed review system, but why that is remains unclear.

#PRC10

How do these differences come about? Fundamental differences between fields or chance and inertia?

#PRC10

How do these differences come about? Fundamental differences between fields or chance and inertia?

#PRC10

Good trigger to make an edit in a grant proposal I'm writing.

#PRC10

Good trigger to make an edit in a grant proposal I'm writing.

#PRC10

(Presentation by Yulin Yu)

Studies repurposing data are at higher risk of bias, so make sure to preregister them (check here for a template): research.tilburguniversity.edu/en/publicati...

#PRC10

(Presentation by Yulin Yu)

Studies repurposing data are at higher risk of bias, so make sure to preregister them (check here for a template): research.tilburguniversity.edu/en/publicati...

#PRC10

Findings:

- Much outcome switching but decrease over time

- Industry-sponsored trials most at risk

- Assessing outcome switching may seem trivial but is even hard for human coders

#PRC10

Findings:

- Much outcome switching but decrease over time

- Industry-sponsored trials most at risk

- Assessing outcome switching may seem trivial but is even hard for human coders

#PRC10

Are we going for low hanging fruit too much in research on peer review / publication?

Are we going for low hanging fruit too much in research on peer review / publication?

Markers of (dis)trust in science: Pay attention to email addresses (use of hotmail.com and underscores) and institutional affiliations (new and unknown organizations without verifiable addresses)

#PRC10

Markers of (dis)trust in science: Pay attention to email addresses (use of hotmail.com and underscores) and institutional affiliations (new and unknown organizations without verifiable addresses)

#PRC10

(Talk by Tim Kersjes from Springer Nature)

Could open review reports solve this issue?

#PRC10

(Talk by Tim Kersjes from Springer Nature)

Could open review reports solve this issue?

#PRC10

- Open reviews include more sentences, mainly involving suggestions and solutions indicating more constructive reviews

- Open reviews had higher information content scores

His explanation: There is more accountability in an open system

#PRC10

- Open reviews include more sentences, mainly involving suggestions and solutions indicating more constructive reviews

- Open reviews had higher information content scores

His explanation: There is more accountability in an open system

#PRC10

Shockingly low number for something that should be standard given that reviews are part of the scientific discourse and therefore should be public.

#PRC10

Shockingly low number for something that should be standard given that reviews are part of the scientific discourse and therefore should be public.

#PRC10

Talk by Isamme Al Fayyad on the first study from his PhD @maastrichtu.bsky.social

#PRC10

Talk by Isamme Al Fayyad on the first study from his PhD @maastrichtu.bsky.social

#PRC10

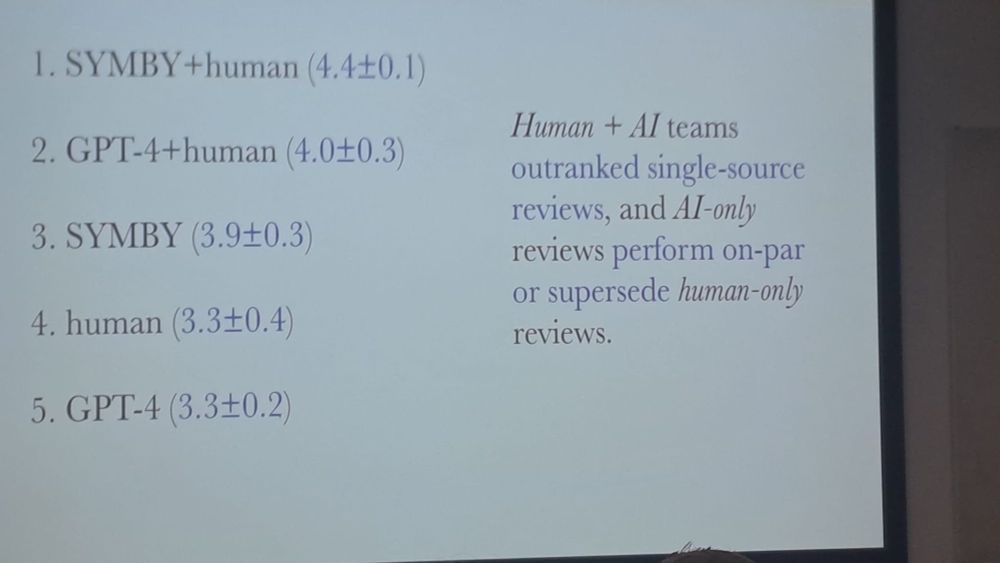

Ashia Livaudais from startup SymbyAI discussed this during a session on peer review at #metascience2025

Conclusion 1: the reviews of human-AI combinations were rated higher in quality than reviews of humans or AI by themselves

Ashia Livaudais from startup SymbyAI discussed this during a session on peer review at #metascience2025

Conclusion 1: the reviews of human-AI combinations were rated higher in quality than reviews of humans or AI by themselves