Olmo van den Akker

@denolmo.bsky.social

Postdoc @ QUEST Center for Responsible Research & Tilburg University. Doing meta-research aimed at improving preregistration, secondary data analysis, and peer review.

Reposted by Olmo van den Akker

🔓 It's great to see authors sharing their experiences with publishing on MetaROR (MetaResearch Open Review) — our open review platform for metascience using the publish–review–curate model: www.openscience.nl/en/cases/the...

September 9, 2025 at 11:42 AM

🔓 It's great to see authors sharing their experiences with publishing on MetaROR (MetaResearch Open Review) — our open review platform for metascience using the publish–review–curate model: www.openscience.nl/en/cases/the...

Reposted by Olmo van den Akker

We are about a month away from releasing a complete refresh of the OSF user interface. The team has been working on this for a very long time, and we are very excited to be able to share it soon. A preview picture:

September 4, 2025 at 9:57 PM

We are about a month away from releasing a complete refresh of the OSF user interface. The team has been working on this for a very long time, and we are very excited to be able to share it soon. A preview picture:

Many interesting tidbits in @simine.com's talk. A selection:

- Journal prestige depends on factors like aims and scope, selectivity, and impact factor, but changes in these factors do not always lead to changes in journal prestige - journal prestige is sticky

#PRC10

- Journal prestige depends on factors like aims and scope, selectivity, and impact factor, but changes in these factors do not always lead to changes in journal prestige - journal prestige is sticky

#PRC10

September 4, 2025 at 10:22 PM

Many interesting tidbits in @simine.com's talk. A selection:

- Journal prestige depends on factors like aims and scope, selectivity, and impact factor, but changes in these factors do not always lead to changes in journal prestige - journal prestige is sticky

#PRC10

- Journal prestige depends on factors like aims and scope, selectivity, and impact factor, but changes in these factors do not always lead to changes in journal prestige - journal prestige is sticky

#PRC10

eLife (talk by Nicola Adamson) uses a publish-review-curate method and uses common terms to assess manuscripts.

For strength of evidence: exceptional, compelling, convincing, solid, incomplete, & inadequate

For significance of findings: landmark, fundamental, important, valuable, & useful

#PRC10

For strength of evidence: exceptional, compelling, convincing, solid, incomplete, & inadequate

For significance of findings: landmark, fundamental, important, valuable, & useful

#PRC10

September 4, 2025 at 7:17 PM

eLife (talk by Nicola Adamson) uses a publish-review-curate method and uses common terms to assess manuscripts.

For strength of evidence: exceptional, compelling, convincing, solid, incomplete, & inadequate

For significance of findings: landmark, fundamental, important, valuable, & useful

#PRC10

For strength of evidence: exceptional, compelling, convincing, solid, incomplete, & inadequate

For significance of findings: landmark, fundamental, important, valuable, & useful

#PRC10

New peer review dataset incoming!

Involves authors, topic area, editorial decision, author characteristics (institutional prestige, region, gender), BoRE evaluations, review characteristics (length, sentiment, z-score, reviewer gender).

(Talk by Aaron Clauset)

#PRC10

Involves authors, topic area, editorial decision, author characteristics (institutional prestige, region, gender), BoRE evaluations, review characteristics (length, sentiment, z-score, reviewer gender).

(Talk by Aaron Clauset)

#PRC10

September 4, 2025 at 7:01 PM

New peer review dataset incoming!

Involves authors, topic area, editorial decision, author characteristics (institutional prestige, region, gender), BoRE evaluations, review characteristics (length, sentiment, z-score, reviewer gender).

(Talk by Aaron Clauset)

#PRC10

Involves authors, topic area, editorial decision, author characteristics (institutional prestige, region, gender), BoRE evaluations, review characteristics (length, sentiment, z-score, reviewer gender).

(Talk by Aaron Clauset)

#PRC10

Christos Kotanidis checked differences in abstracts between submissions and published papers & assessed whether these differences indicated higher or lower research quality.

Abstracts typically improved, especially in big five medical journals. Evidence for the effectiveness of peer review?

#PRC10

Abstracts typically improved, especially in big five medical journals. Evidence for the effectiveness of peer review?

#PRC10

September 4, 2025 at 6:59 PM

Christos Kotanidis checked differences in abstracts between submissions and published papers & assessed whether these differences indicated higher or lower research quality.

Abstracts typically improved, especially in big five medical journals. Evidence for the effectiveness of peer review?

#PRC10

Abstracts typically improved, especially in big five medical journals. Evidence for the effectiveness of peer review?

#PRC10

Andrea Corvillon on distributed vs. panel peer review at the ALMA Observatory:

Most experienced PIs no longer have the best ranks in a distributed review system, but why that is remains unclear.

#PRC10

Most experienced PIs no longer have the best ranks in a distributed review system, but why that is remains unclear.

#PRC10

September 4, 2025 at 4:33 PM

Andrea Corvillon on distributed vs. panel peer review at the ALMA Observatory:

Most experienced PIs no longer have the best ranks in a distributed review system, but why that is remains unclear.

#PRC10

Most experienced PIs no longer have the best ranks in a distributed review system, but why that is remains unclear.

#PRC10

Interesting to see that the conference review process (and publishing norms) are do different in the field of computer science compared to other fields.

How do these differences come about? Fundamental differences between fields or chance and inertia?

#PRC10

How do these differences come about? Fundamental differences between fields or chance and inertia?

#PRC10

September 4, 2025 at 4:20 PM

Interesting to see that the conference review process (and publishing norms) are do different in the field of computer science compared to other fields.

How do these differences come about? Fundamental differences between fields or chance and inertia?

#PRC10

How do these differences come about? Fundamental differences between fields or chance and inertia?

#PRC10

Di Girolamo explains why the use of the phrase "to our knowledge" lacks reproducibility and accountability.

Good trigger to make an edit in a grant proposal I'm writing.

#PRC10

Good trigger to make an edit in a grant proposal I'm writing.

#PRC10

September 4, 2025 at 3:04 PM

Di Girolamo explains why the use of the phrase "to our knowledge" lacks reproducibility and accountability.

Good trigger to make an edit in a grant proposal I'm writing.

#PRC10

Good trigger to make an edit in a grant proposal I'm writing.

#PRC10

Data repurposing: taking existing data and reusing it for a different purpose.

(Presentation by Yulin Yu)

Studies repurposing data are at higher risk of bias, so make sure to preregister them (check here for a template): research.tilburguniversity.edu/en/publicati...

#PRC10

(Presentation by Yulin Yu)

Studies repurposing data are at higher risk of bias, so make sure to preregister them (check here for a template): research.tilburguniversity.edu/en/publicati...

#PRC10

September 4, 2025 at 2:40 PM

Data repurposing: taking existing data and reusing it for a different purpose.

(Presentation by Yulin Yu)

Studies repurposing data are at higher risk of bias, so make sure to preregister them (check here for a template): research.tilburguniversity.edu/en/publicati...

#PRC10

(Presentation by Yulin Yu)

Studies repurposing data are at higher risk of bias, so make sure to preregister them (check here for a template): research.tilburguniversity.edu/en/publicati...

#PRC10

Ian Bulovic used OpenAI's GPT to assess selective outcome reporting.

Findings:

- Much outcome switching but decrease over time

- Industry-sponsored trials most at risk

- Assessing outcome switching may seem trivial but is even hard for human coders

#PRC10

Findings:

- Much outcome switching but decrease over time

- Industry-sponsored trials most at risk

- Assessing outcome switching may seem trivial but is even hard for human coders

#PRC10

September 4, 2025 at 2:19 PM

Ian Bulovic used OpenAI's GPT to assess selective outcome reporting.

Findings:

- Much outcome switching but decrease over time

- Industry-sponsored trials most at risk

- Assessing outcome switching may seem trivial but is even hard for human coders

#PRC10

Findings:

- Much outcome switching but decrease over time

- Industry-sponsored trials most at risk

- Assessing outcome switching may seem trivial but is even hard for human coders

#PRC10

A meta-perspective by Malcolm Macleod on the presentations at #PRC10.

Are we going for low hanging fruit too much in research on peer review / publication?

Are we going for low hanging fruit too much in research on peer review / publication?

September 4, 2025 at 1:23 PM

A meta-perspective by Malcolm Macleod on the presentations at #PRC10.

Are we going for low hanging fruit too much in research on peer review / publication?

Are we going for low hanging fruit too much in research on peer review / publication?

A question to kickstart day 2 of #PRC10:

How would you measure the quality of peer reviews in a scientific study?

Single question? Scale? How many raters? AI?

How would you measure the quality of peer reviews in a scientific study?

Single question? Scale? How many raters? AI?

September 4, 2025 at 12:32 PM

A question to kickstart day 2 of #PRC10:

How would you measure the quality of peer reviews in a scientific study?

Single question? Scale? How many raters? AI?

How would you measure the quality of peer reviews in a scientific study?

Single question? Scale? How many raters? AI?

Leslie McIntosh:

Markers of (dis)trust in science: Pay attention to email addresses (use of hotmail.com and underscores) and institutional affiliations (new and unknown organizations without verifiable addresses)

#PRC10

Markers of (dis)trust in science: Pay attention to email addresses (use of hotmail.com and underscores) and institutional affiliations (new and unknown organizations without verifiable addresses)

#PRC10

September 3, 2025 at 4:55 PM

Leslie McIntosh:

Markers of (dis)trust in science: Pay attention to email addresses (use of hotmail.com and underscores) and institutional affiliations (new and unknown organizations without verifiable addresses)

#PRC10

Markers of (dis)trust in science: Pay attention to email addresses (use of hotmail.com and underscores) and institutional affiliations (new and unknown organizations without verifiable addresses)

#PRC10

John Ioannidis: "We need more RCTs"

I agree, so here is an urgent call to the representatives of journals at #PRC10: Let's empirically test suggested improvements to peer review like open reports, open identities, structured review, results-free review, collaborative review, etc.

Get in touch!

I agree, so here is an urgent call to the representatives of journals at #PRC10: Let's empirically test suggested improvements to peer review like open reports, open identities, structured review, results-free review, collaborative review, etc.

Get in touch!

September 3, 2025 at 4:42 PM

John Ioannidis: "We need more RCTs"

I agree, so here is an urgent call to the representatives of journals at #PRC10: Let's empirically test suggested improvements to peer review like open reports, open identities, structured review, results-free review, collaborative review, etc.

Get in touch!

I agree, so here is an urgent call to the representatives of journals at #PRC10: Let's empirically test suggested improvements to peer review like open reports, open identities, structured review, results-free review, collaborative review, etc.

Get in touch!

How do paper mills operate? I always thought they were in cahoots with illegitimate journals but apparently they target normal journals as well, with the editors of those journals playing no role.

(Talk by Tim Kersjes from Springer Nature)

Could open review reports solve this issue?

#PRC10

(Talk by Tim Kersjes from Springer Nature)

Could open review reports solve this issue?

#PRC10

September 3, 2025 at 3:39 PM

How do paper mills operate? I always thought they were in cahoots with illegitimate journals but apparently they target normal journals as well, with the editors of those journals playing no role.

(Talk by Tim Kersjes from Springer Nature)

Could open review reports solve this issue?

#PRC10

(Talk by Tim Kersjes from Springer Nature)

Could open review reports solve this issue?

#PRC10

@royperlis.bsky.social based on a study using Pangram to detect AI use in papers: "Less than 25% of authors using GenAI are disclosing its use"

Why is this the case? Do people feel shame for using AI to improve their studies / papers? Do journals discourage (disclosure of) AI use?

#PRC10

Why is this the case? Do people feel shame for using AI to improve their studies / papers? Do journals discourage (disclosure of) AI use?

#PRC10

September 3, 2025 at 2:57 PM

@royperlis.bsky.social based on a study using Pangram to detect AI use in papers: "Less than 25% of authors using GenAI are disclosing its use"

Why is this the case? Do people feel shame for using AI to improve their studies / papers? Do journals discourage (disclosure of) AI use?

#PRC10

Why is this the case? Do people feel shame for using AI to improve their studies / papers? Do journals discourage (disclosure of) AI use?

#PRC10

Start of the talk by @mariomalicki.bsky.social: "Only 0.1% of journals provide some sort of open peer review"

Shockingly low number for something that should be standard given that reviews are part of the scientific discourse and therefore should be public.

#PRC10

Shockingly low number for something that should be standard given that reviews are part of the scientific discourse and therefore should be public.

#PRC10

September 3, 2025 at 2:11 PM

Start of the talk by @mariomalicki.bsky.social: "Only 0.1% of journals provide some sort of open peer review"

Shockingly low number for something that should be standard given that reviews are part of the scientific discourse and therefore should be public.

#PRC10

Shockingly low number for something that should be standard given that reviews are part of the scientific discourse and therefore should be public.

#PRC10

What do researchers use AI for?

Talk by Isamme Al Fayyad on the first study from his PhD @maastrichtu.bsky.social

#PRC10

Talk by Isamme Al Fayyad on the first study from his PhD @maastrichtu.bsky.social

#PRC10

September 3, 2025 at 1:44 PM

What do researchers use AI for?

Talk by Isamme Al Fayyad on the first study from his PhD @maastrichtu.bsky.social

#PRC10

Talk by Isamme Al Fayyad on the first study from his PhD @maastrichtu.bsky.social

#PRC10

TIL: In biomedicine there is a thing called "medical writers" who are hired to prepare papers for publication, and write regulatory documents and grant proposals.

Questions: Why aren't the researchers writing papers themselves? Should medical writers be authors on the resulting papers?

#PRC10

Questions: Why aren't the researchers writing papers themselves? Should medical writers be authors on the resulting papers?

#PRC10

September 3, 2025 at 1:35 PM

TIL: In biomedicine there is a thing called "medical writers" who are hired to prepare papers for publication, and write regulatory documents and grant proposals.

Questions: Why aren't the researchers writing papers themselves? Should medical writers be authors on the resulting papers?

#PRC10

Questions: Why aren't the researchers writing papers themselves? Should medical writers be authors on the resulting papers?

#PRC10

Reposted by Olmo van den Akker

We found that the results of 42% of registered studies on the OSF are never publicly shared journals.sagepub.com/doi/10.1177/.... Most common reasons are null results and bad logistics. Plan your studies carefully (equivalence testing and good time management) to prevent research waste!

August 7, 2025 at 2:41 PM

We found that the results of 42% of registered studies on the OSF are never publicly shared journals.sagepub.com/doi/10.1177/.... Most common reasons are null results and bad logistics. Plan your studies carefully (equivalence testing and good time management) to prevent research waste!

Reposted by Olmo van den Akker

Simulation studies are widely used, but have historically lacked clear guidance around preregistration.

In response to this gap, a team of researchers have developed a new template for preregistering simulation studies, now available on OSF.

🚀 Read our Q&A: www.cos.io/blog/intr...

In response to this gap, a team of researchers have developed a new template for preregistering simulation studies, now available on OSF.

🚀 Read our Q&A: www.cos.io/blog/intr...

Introducing the Simulation Studies Preregistration Template: Q&A with Björn S. Siepe, František Bartoš, and Samuel Pawel

Initially submitted through COS’s open call for community-designed preregistration templates, the Simulation Studies Template is now part of the expanding collection of preregistration resources available on the OSF.

www.cos.io

July 29, 2025 at 3:48 PM

Simulation studies are widely used, but have historically lacked clear guidance around preregistration.

In response to this gap, a team of researchers have developed a new template for preregistering simulation studies, now available on OSF.

🚀 Read our Q&A: www.cos.io/blog/intr...

In response to this gap, a team of researchers have developed a new template for preregistering simulation studies, now available on OSF.

🚀 Read our Q&A: www.cos.io/blog/intr...

Reposted by Olmo van den Akker

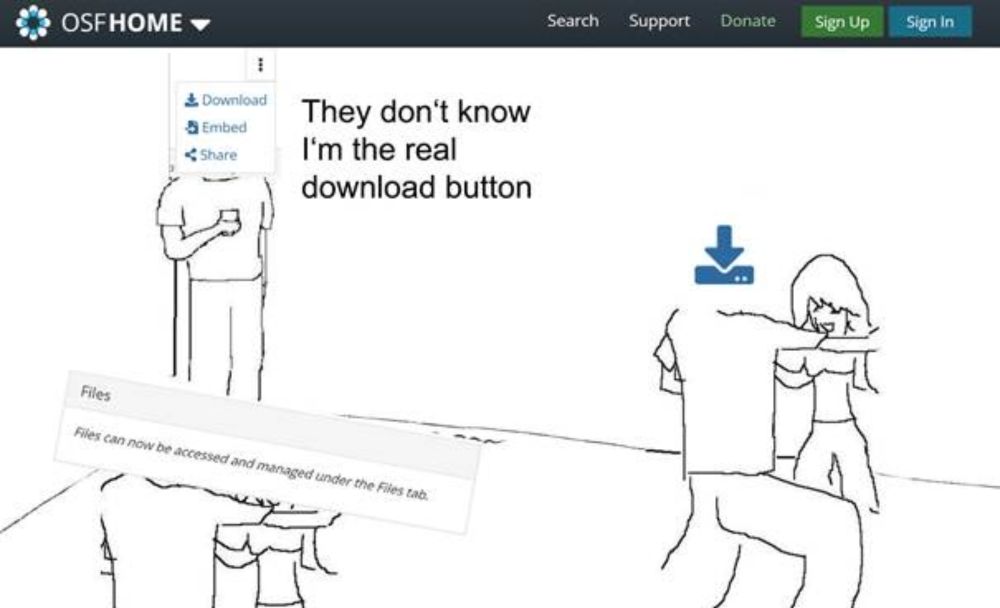

New blog post: Easily download files from the Open Science Framework with Papercheck daniellakens.blogspot.com/2025/07/easi...

Downloading all files in an OSF project can be a hassle. Not anymore, with Papercheck! Just run:

osf_file_download("6nt4v")

Downloading all files in an OSF project can be a hassle. Not anymore, with Papercheck! Just run:

osf_file_download("6nt4v")

Easily download files from the Open Science Framework with Papercheck

Researchers increasingly use the Open Science Framework (OSF) to share files, such as data and code underlying scientific publications, or ...

daniellakens.blogspot.com

July 22, 2025 at 9:58 AM

New blog post: Easily download files from the Open Science Framework with Papercheck daniellakens.blogspot.com/2025/07/easi...

Downloading all files in an OSF project can be a hassle. Not anymore, with Papercheck! Just run:

osf_file_download("6nt4v")

Downloading all files in an OSF project can be a hassle. Not anymore, with Papercheck! Just run:

osf_file_download("6nt4v")

Reposted by Olmo van den Akker

When MetaROR launched at the end of last year, we published four articles. One of these has now returned to our platform for another round of peer review.

👇 Read the editorial assessment, reviews & full manuscript text at MetaROR (incl. links to the first version and first round of reviews)

[1/3]

👇 Read the editorial assessment, reviews & full manuscript text at MetaROR (incl. links to the first version and first round of reviews)

[1/3]

Peer Review at the Crossroads

metaror.org

July 23, 2025 at 8:08 AM

When MetaROR launched at the end of last year, we published four articles. One of these has now returned to our platform for another round of peer review.

👇 Read the editorial assessment, reviews & full manuscript text at MetaROR (incl. links to the first version and first round of reviews)

[1/3]

👇 Read the editorial assessment, reviews & full manuscript text at MetaROR (incl. links to the first version and first round of reviews)

[1/3]

Reposted by Olmo van den Akker

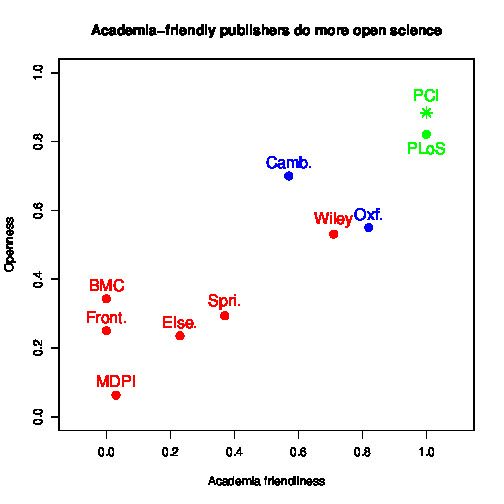

Non-profit publishers (PLoS, Oxford, Cambridge) and publishers partnering a lot with academia (Wiley) are much more committed to open science than the others - MDPI being the worst by large. (4/5)

July 17, 2025 at 7:18 AM

Non-profit publishers (PLoS, Oxford, Cambridge) and publishers partnering a lot with academia (Wiley) are much more committed to open science than the others - MDPI being the worst by large. (4/5)