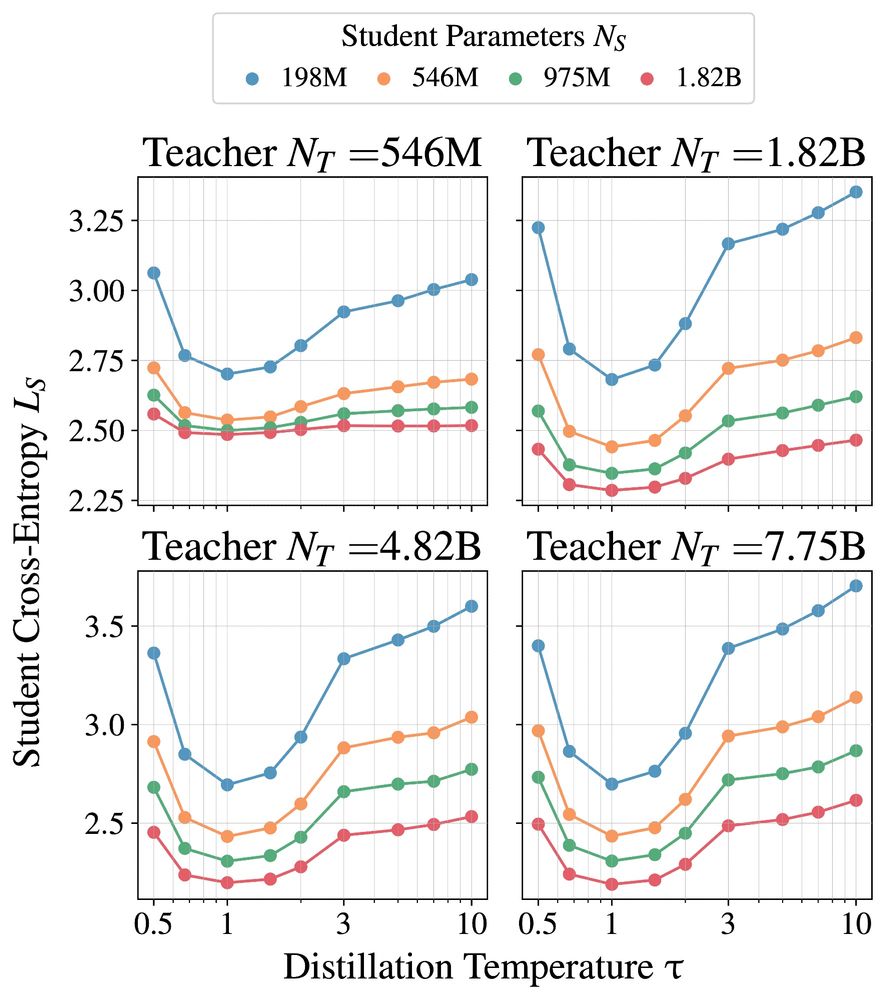

1. Language model distillation should use temperature = 1. Dark knowledge is less important, as the target distribution of natural language is already complex and multimodal.

1. Language model distillation should use temperature = 1. Dark knowledge is less important, as the target distribution of natural language is already complex and multimodal.

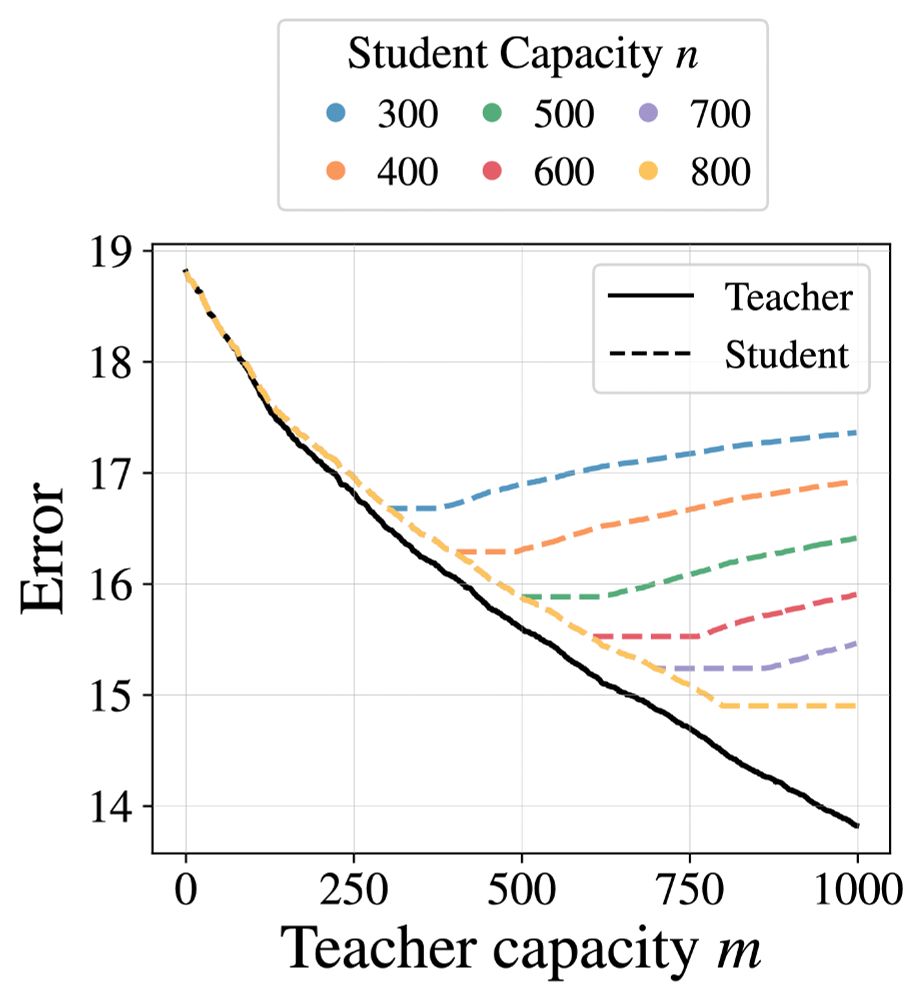

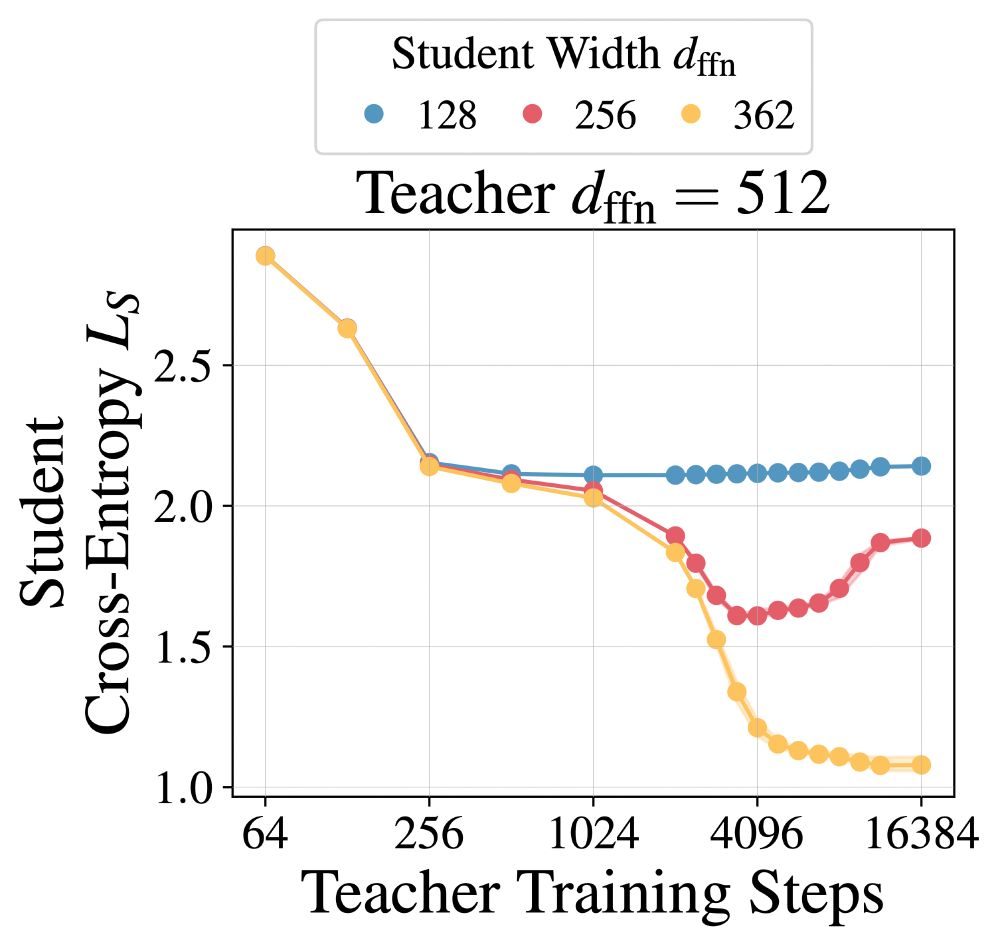

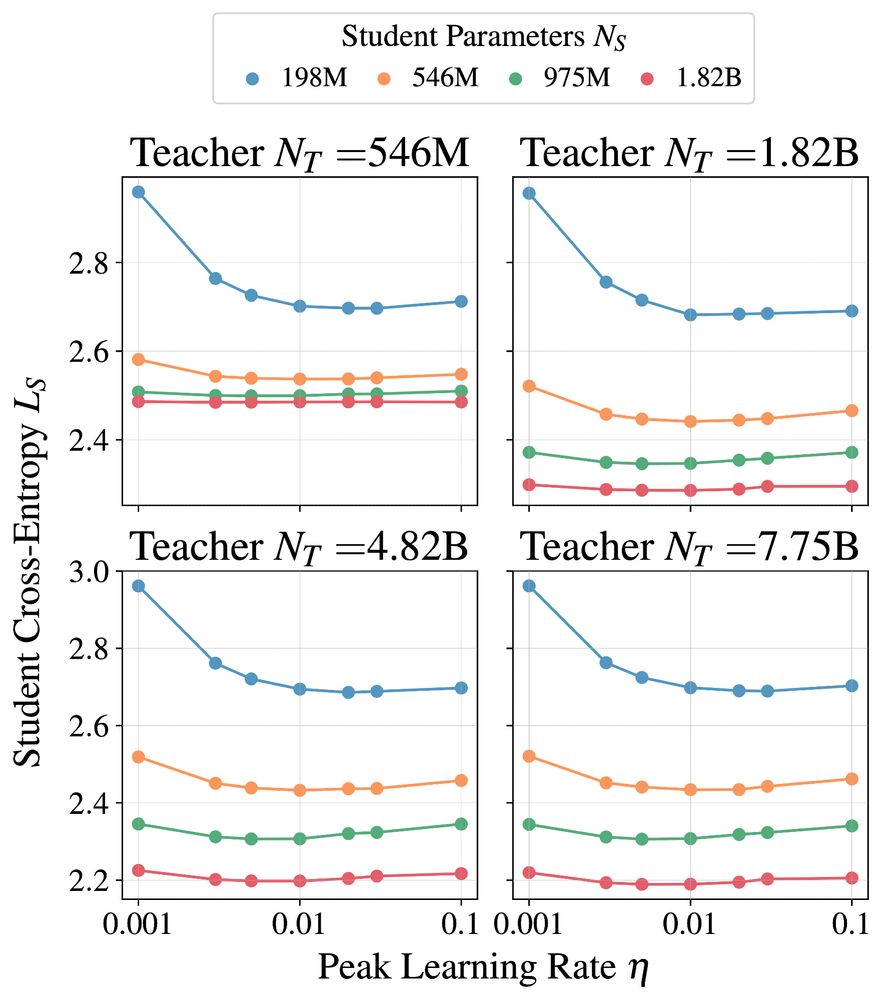

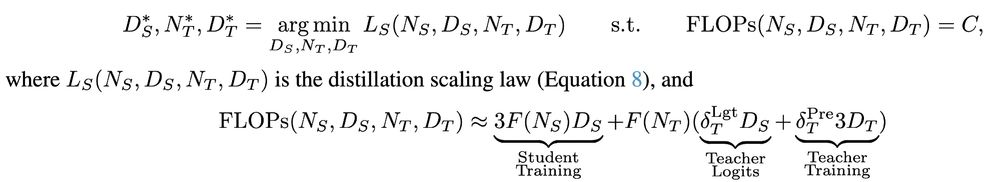

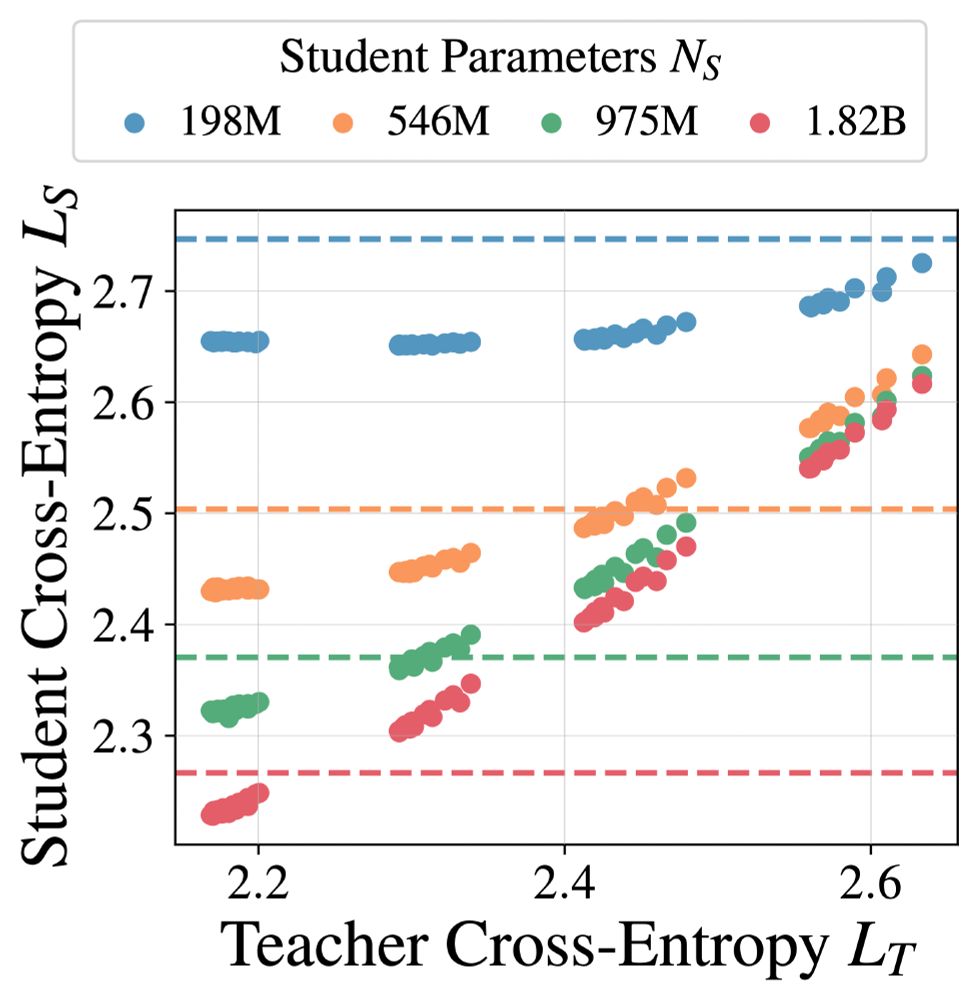

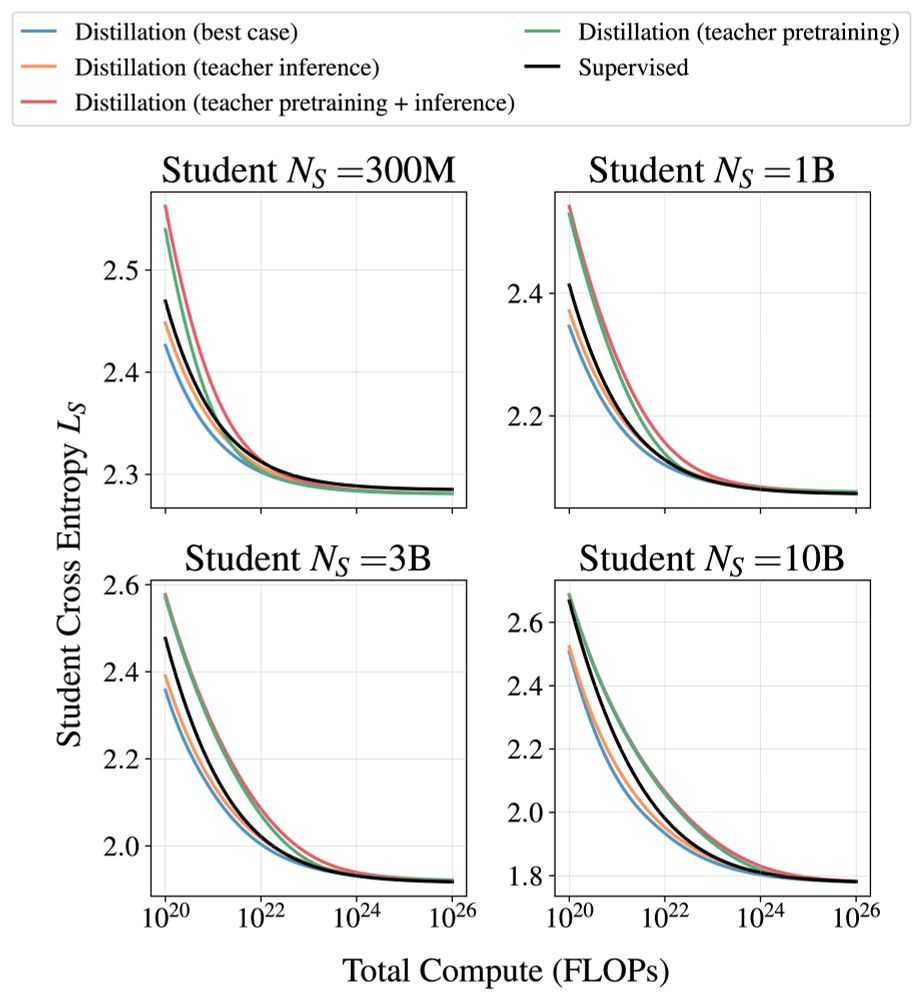

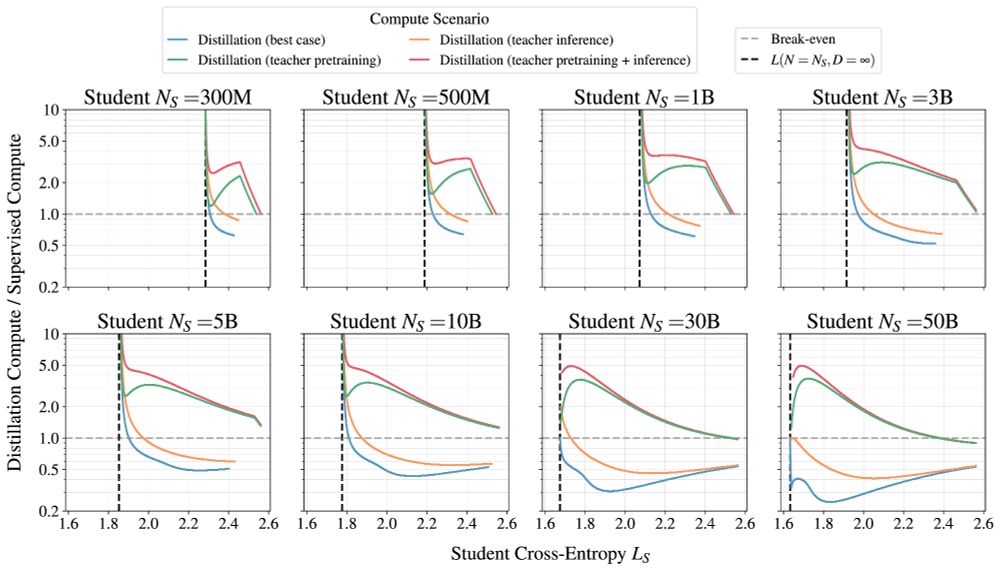

1. It is only more efficient to distill if the teacher training cost does not matter.

2. Efficiency benefits vanish when enough compute/data is available.

3. Distillation cannot produce lower cross-entropies when enough compute/data is available.

1. It is only more efficient to distill if the teacher training cost does not matter.

2. Efficiency benefits vanish when enough compute/data is available.

3. Distillation cannot produce lower cross-entropies when enough compute/data is available.