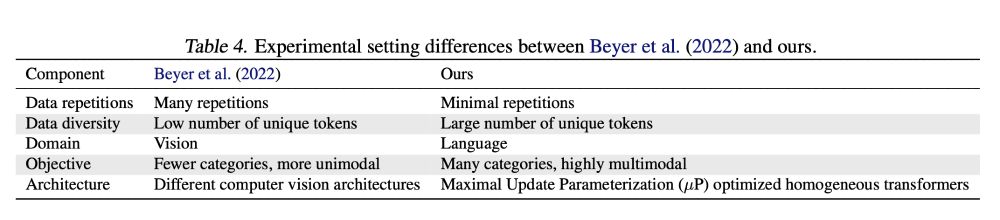

The key message is that despite an apparent contradiction, our findings are consistent because our baselines and experimental settings differ.

The key message is that despite an apparent contradiction, our findings are consistent because our baselines and experimental settings differ.

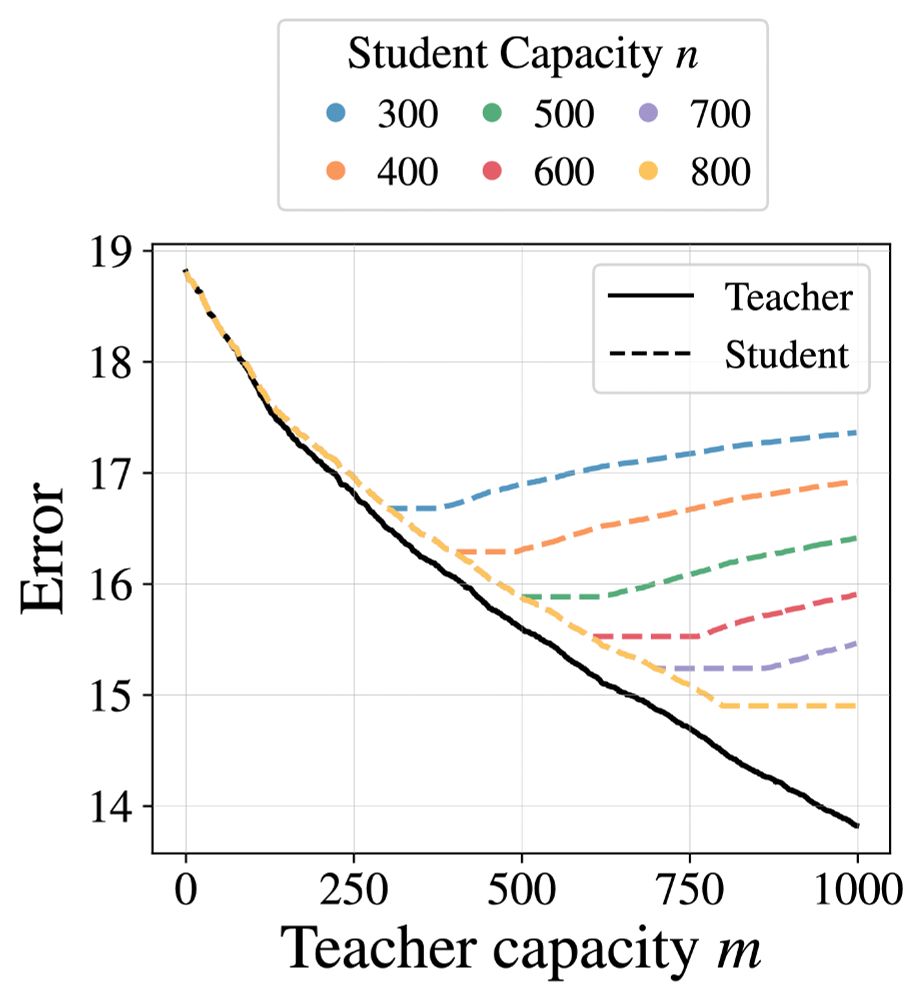

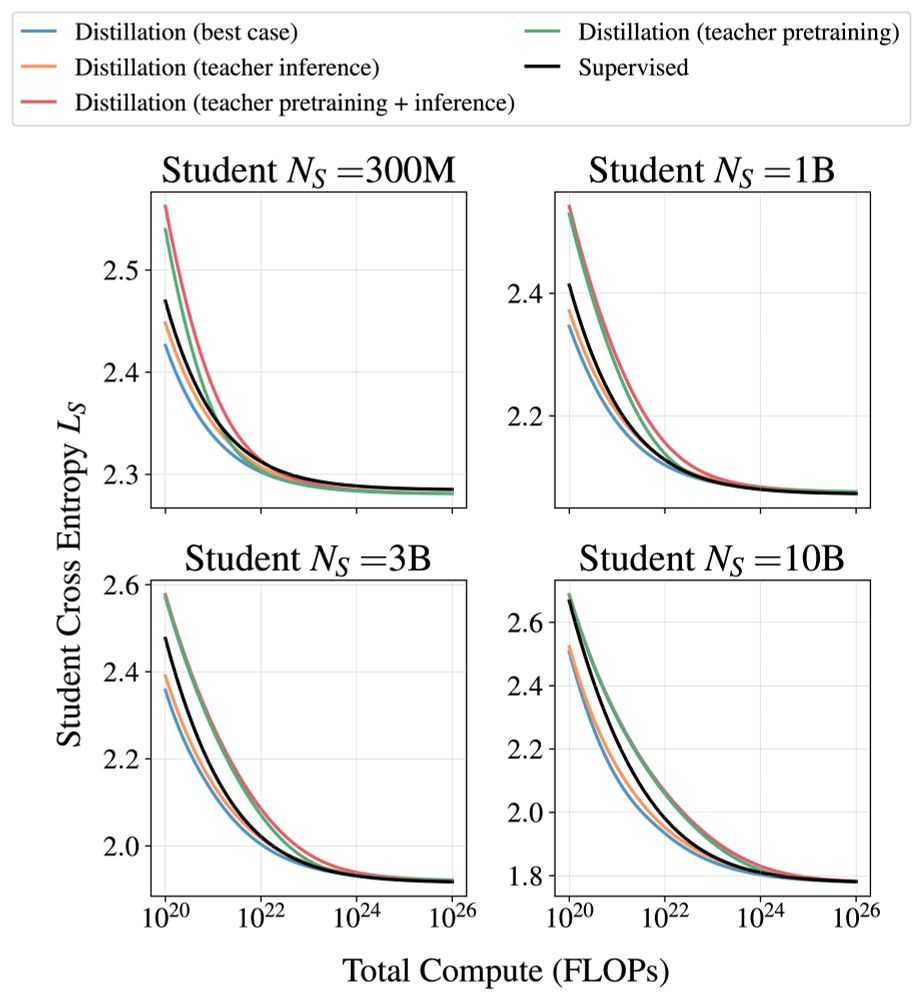

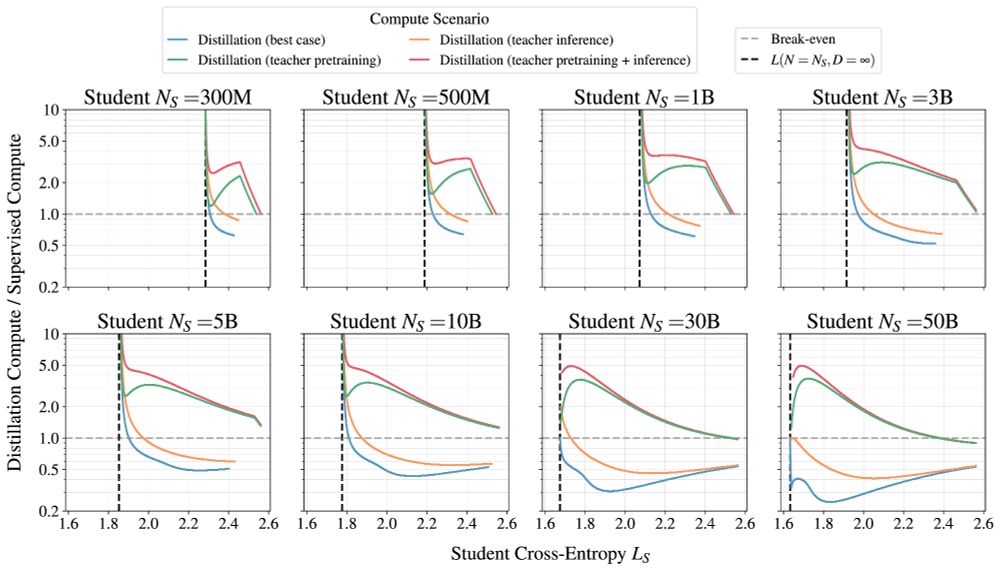

If you have access to a decent teacher, distillation is more efficient than supervised learning. Efficiency gains lessen with increasing compute.

If you need to train a teacher, it may not be worth it, depending on other uses for the teacher.

If you have access to a decent teacher, distillation is more efficient than supervised learning. Efficiency gains lessen with increasing compute.

If you need to train a teacher, it may not be worth it, depending on other uses for the teacher.

arxiv.org/abs/2502.08606

arxiv.org/abs/2502.08606

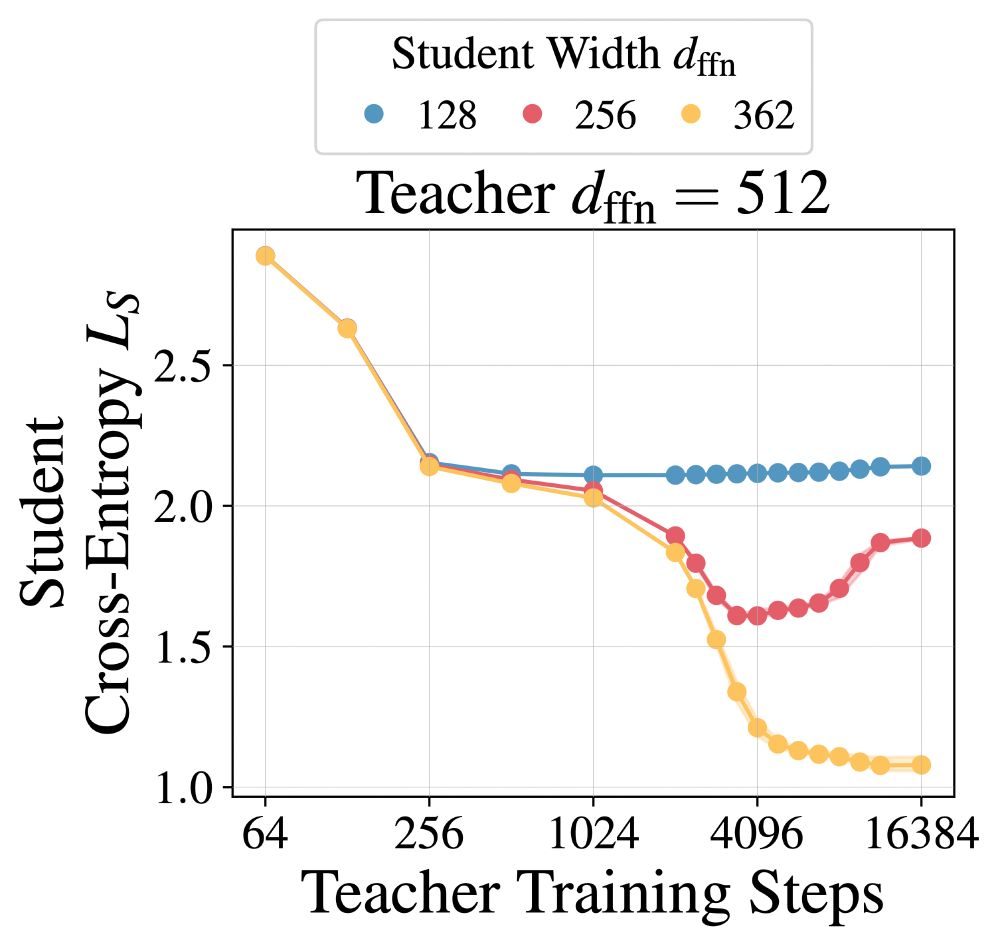

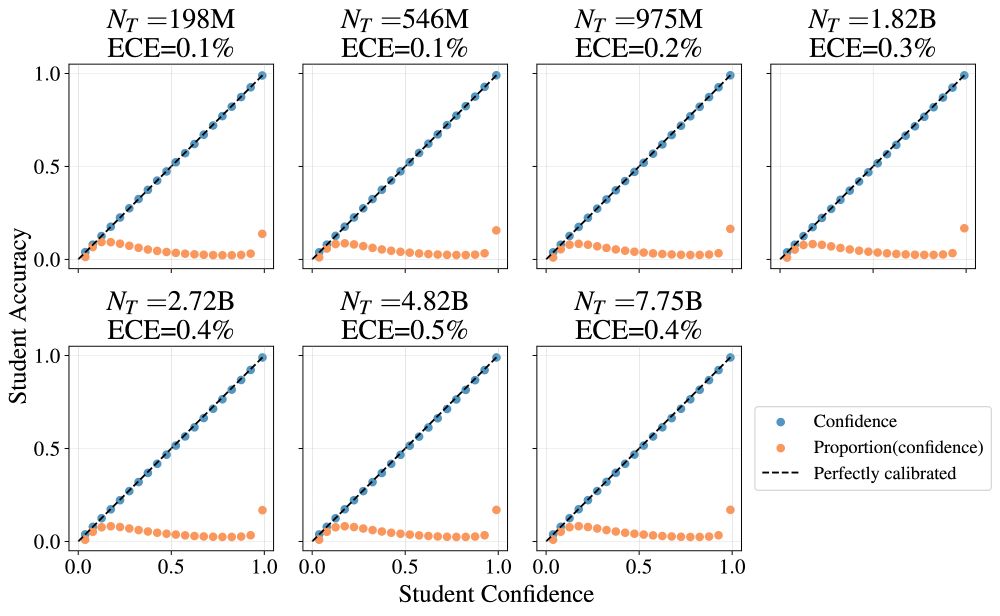

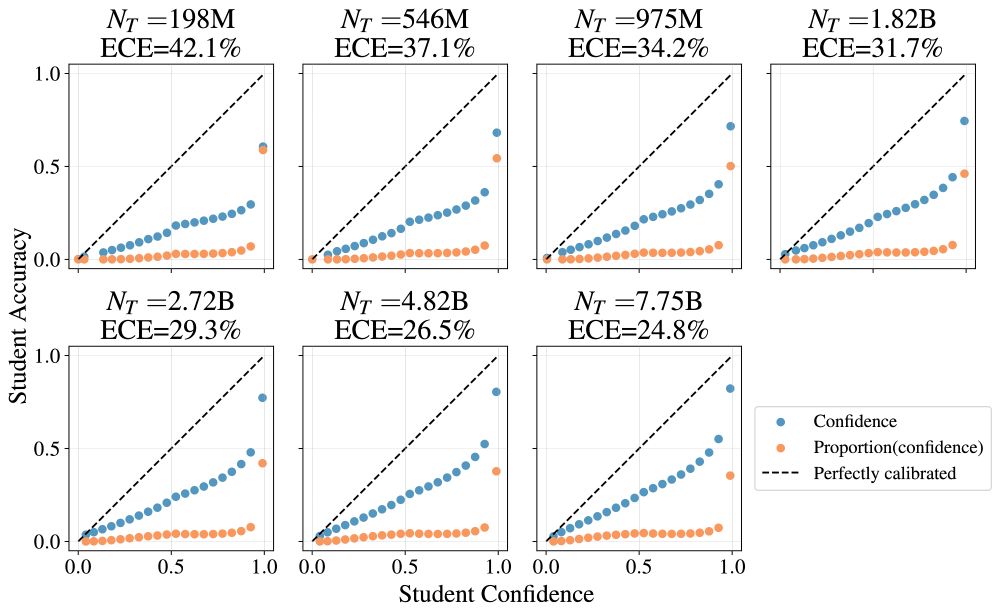

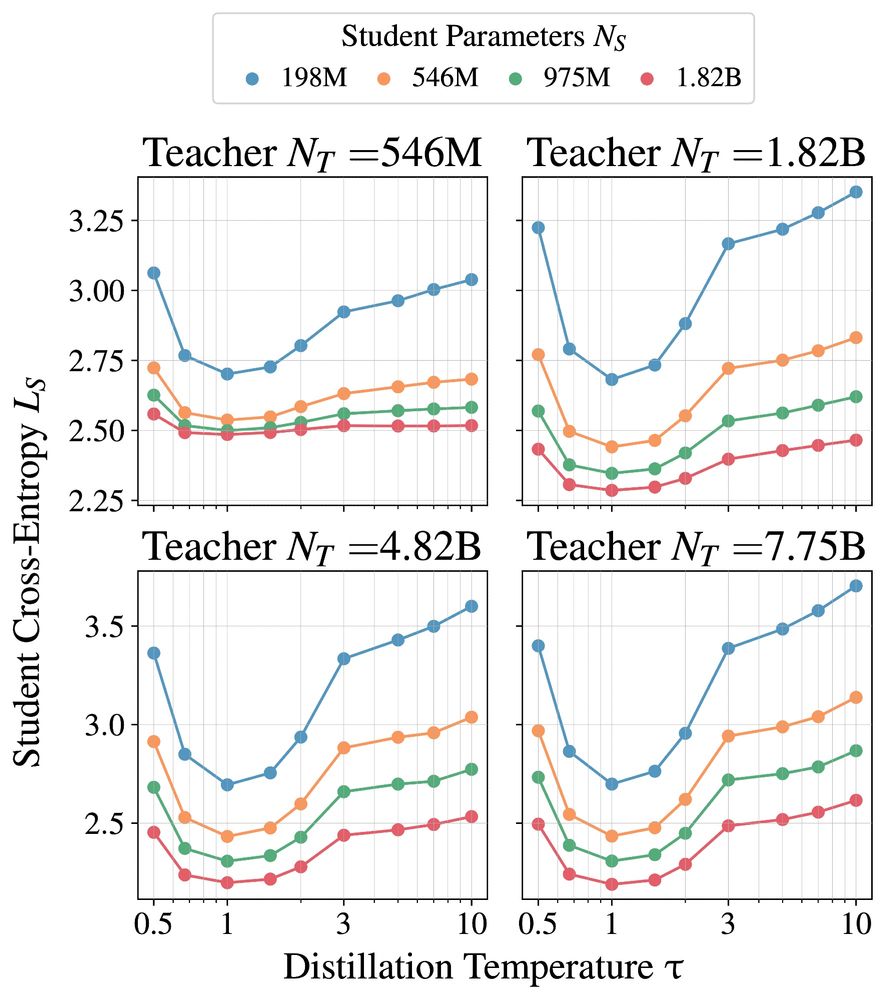

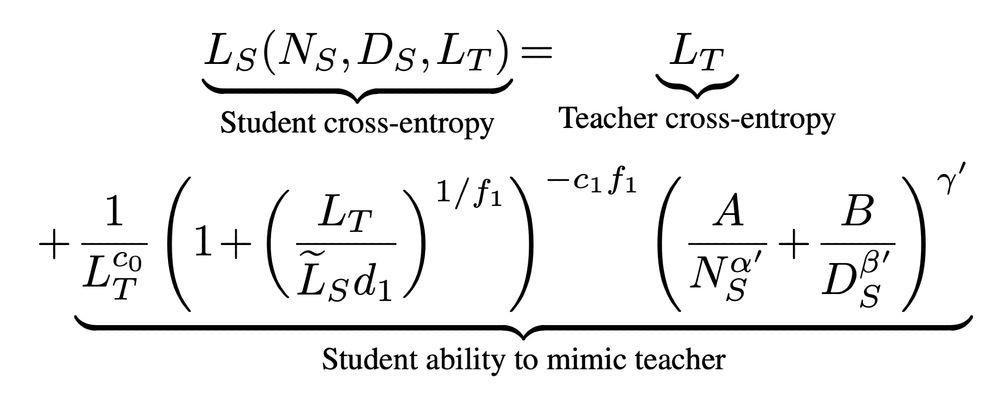

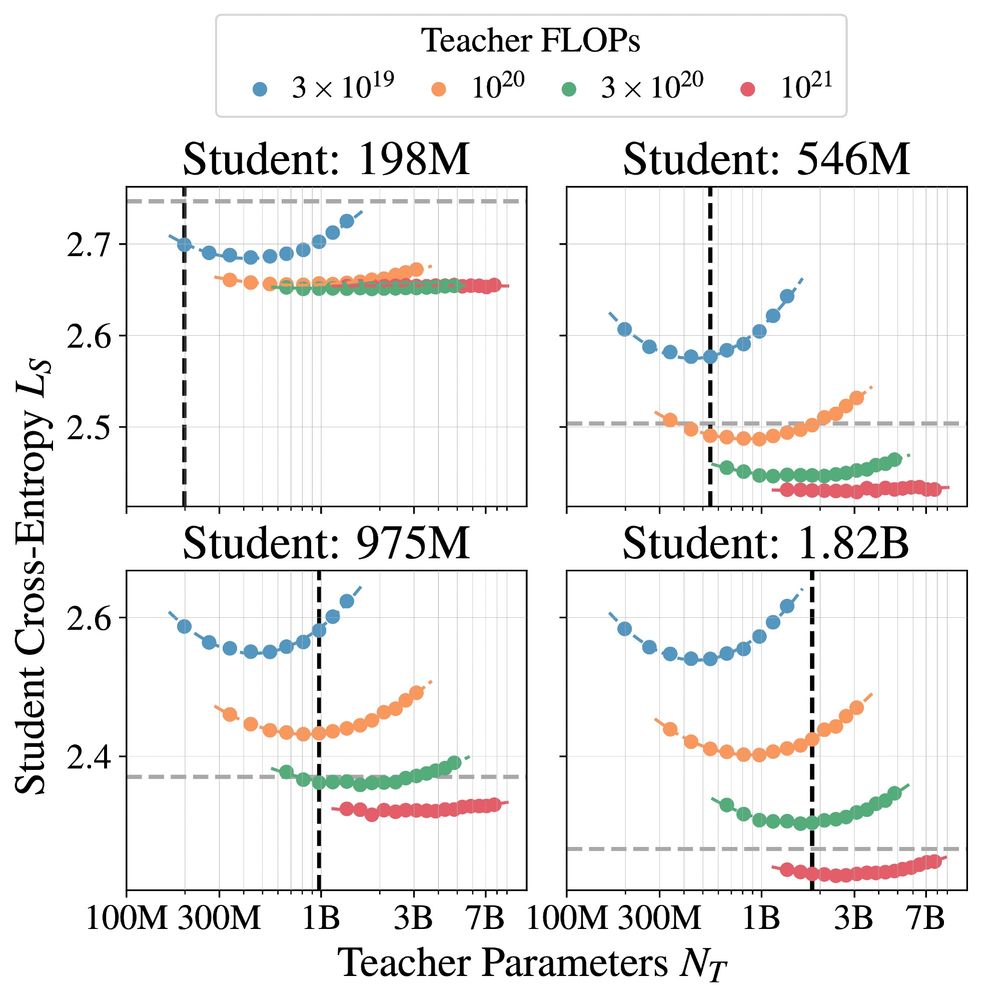

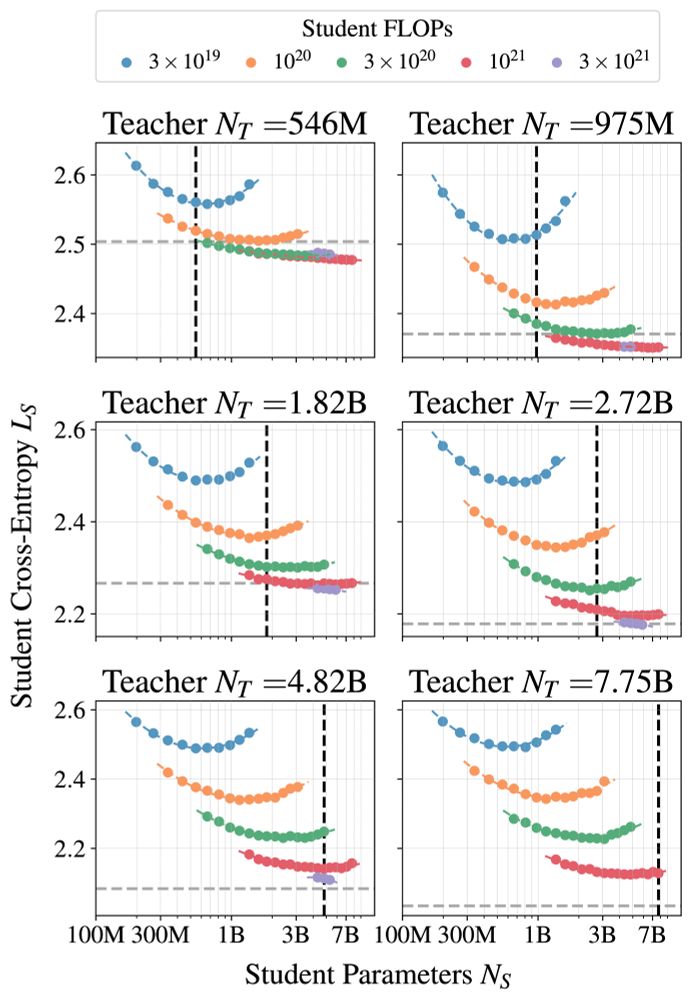

1. Language model distillation should use temperature = 1. Dark knowledge is less important, as the target distribution of natural language is already complex and multimodal.

1. Language model distillation should use temperature = 1. Dark knowledge is less important, as the target distribution of natural language is already complex and multimodal.

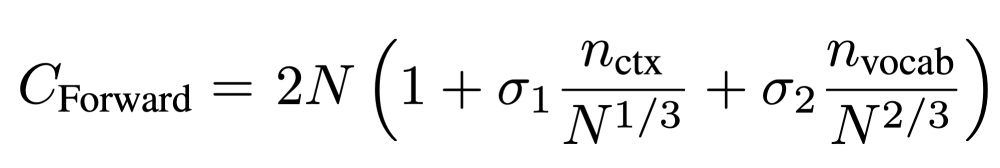

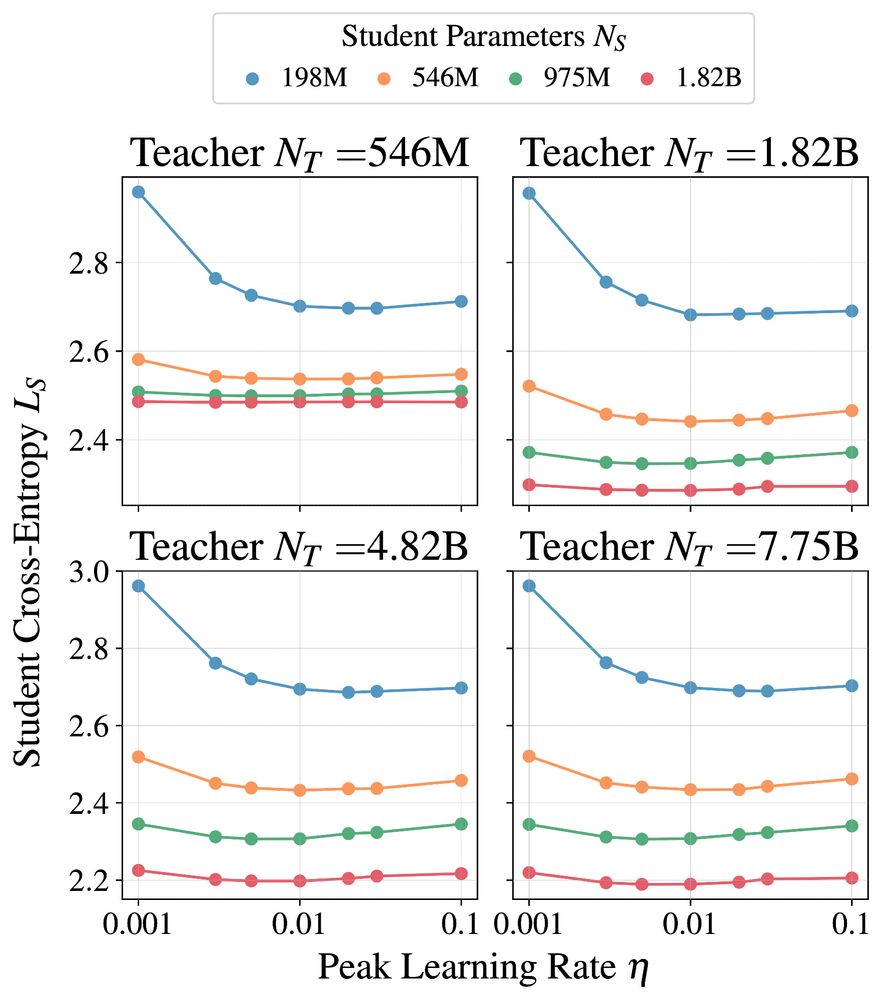

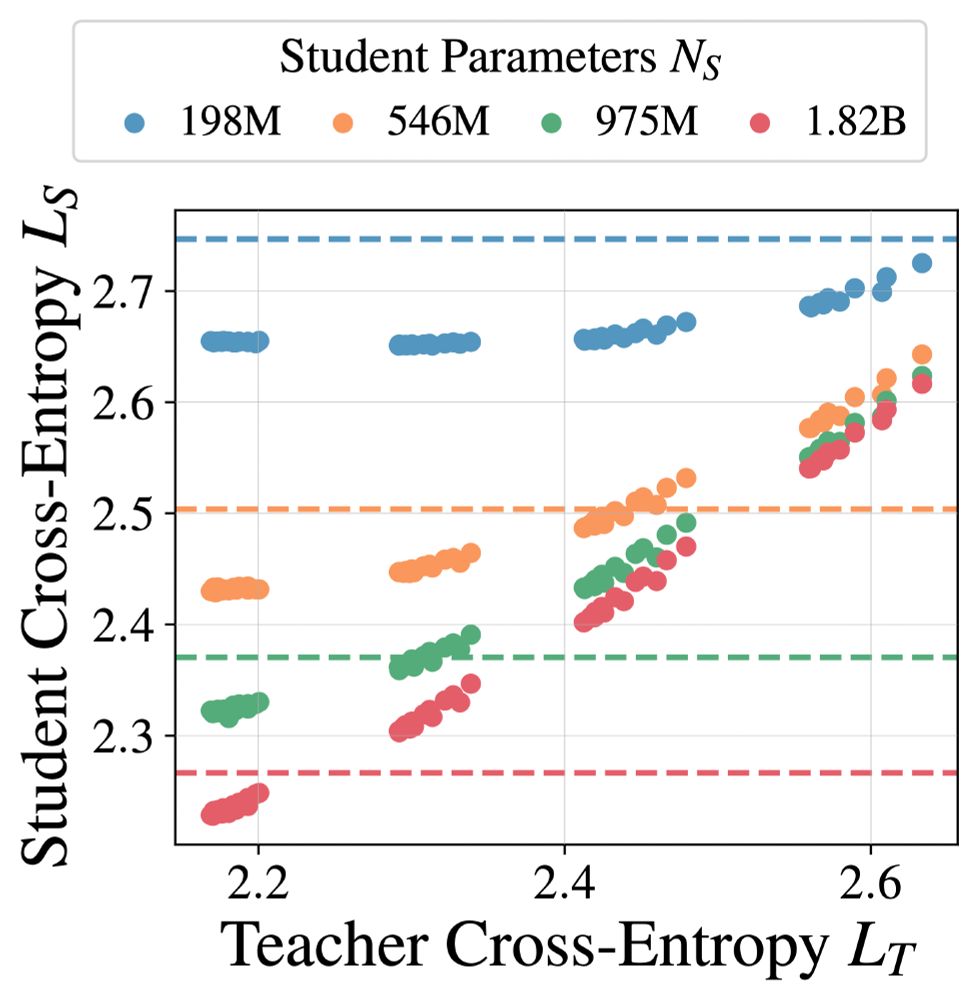

1. It is only more efficient to distill if the teacher training cost does not matter.

2. Efficiency benefits vanish when enough compute/data is available.

3. Distillation cannot produce lower cross-entropies when enough compute/data is available.

1. It is only more efficient to distill if the teacher training cost does not matter.

2. Efficiency benefits vanish when enough compute/data is available.

3. Distillation cannot produce lower cross-entropies when enough compute/data is available.