David Lindner

@davidlindner.bsky.social

Making AI safer at Google DeepMind

davidlindner.me

davidlindner.me

We also create case studies implementing situations where models with strong steganographic capabilities could take unwanted actions and bypass a monitor. Current models rarely succeed in these environments unless they are given strong tips about what to do.

July 4, 2025 at 3:34 PM

We also create case studies implementing situations where models with strong steganographic capabilities could take unwanted actions and bypass a monitor. Current models rarely succeed in these environments unless they are given strong tips about what to do.

Models can also reason through simple problems using a number of common encoding schemes. But performance quickly degrades as the problems get more difficult.

July 4, 2025 at 3:34 PM

Models can also reason through simple problems using a number of common encoding schemes. But performance quickly degrades as the problems get more difficult.

While models understand they need to conceal their reasoning, they have difficulty actually hiding that reasoning in a plausible way. As an example, we show a model’s reasoning attempt when asked a question on how to install solar panels. [bold text and highlighting added by us]

July 4, 2025 at 3:34 PM

While models understand they need to conceal their reasoning, they have difficulty actually hiding that reasoning in a plausible way. As an example, we show a model’s reasoning attempt when asked a question on how to install solar panels. [bold text and highlighting added by us]

In our encoded reasoning evals, we find that models can sometimes leverage encoded reasoning to perform better than no reasoning baselines on simple reasoning tasks. But encodings that help tend not to be very subtle and are regularly detected by a monitor.

July 4, 2025 at 3:34 PM

In our encoded reasoning evals, we find that models can sometimes leverage encoded reasoning to perform better than no reasoning baselines on simple reasoning tasks. But encodings that help tend not to be very subtle and are regularly detected by a monitor.

Models are able to perform significantly better when the sender and receiver are able to coordinate on the steganographic encoding method. But this mainly applies when the model has access to an unmonitored CoT. With no CoT, performance remains extremely poor.

July 4, 2025 at 3:34 PM

Models are able to perform significantly better when the sender and receiver are able to coordinate on the steganographic encoding method. But this mainly applies when the model has access to an unmonitored CoT. With no CoT, performance remains extremely poor.

When they succeed, models sometimes leverage sophisticated strategies to pass messages, such as encoding information in the lengths of the sentences they generate! We found this example difficult to decode ourselves. [formatting and word counts added by us]

July 4, 2025 at 3:34 PM

When they succeed, models sometimes leverage sophisticated strategies to pass messages, such as encoding information in the lengths of the sentences they generate! We found this example difficult to decode ourselves. [formatting and word counts added by us]

In our message passing evals, we find that models nearly always fail without access to CoT. But models succeed much more often when they are allowed to use a CoT to freely reason ahead of time about how to encode and decode the hidden message.

July 4, 2025 at 3:34 PM

In our message passing evals, we find that models nearly always fail without access to CoT. But models succeed much more often when they are allowed to use a CoT to freely reason ahead of time about how to encode and decode the hidden message.

Models encoding hidden information might make safety mitigations such as CoT monitoring much more difficult. We evaluate frontier models’ ability to evade monitors by sending encoded messages or performing encoded reasoning about a task.

Read more: arxiv.org/abs/2507.02737

Read more: arxiv.org/abs/2507.02737

July 4, 2025 at 3:34 PM

Models encoding hidden information might make safety mitigations such as CoT monitoring much more difficult. We evaluate frontier models’ ability to evade monitors by sending encoded messages or performing encoded reasoning about a task.

Read more: arxiv.org/abs/2507.02737

Read more: arxiv.org/abs/2507.02737

Can frontier models hide secret information and reasoning in their outputs?

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

July 4, 2025 at 3:34 PM

Can frontier models hide secret information and reasoning in their outputs?

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

Super excited this giant paper outlining our technical approach to AGI safety and security is finally out!

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

April 4, 2025 at 2:27 PM

Super excited this giant paper outlining our technical approach to AGI safety and security is finally out!

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

Want to dive deeper? Check out our paper and our blog posts explaining the work in more detail

📄 Paper: arxiv.org/abs/2501.13011

💡 Introductory explainer: deepmindsafetyresearch.medium.com/mona-a-meth...

⚙️ Technical safety post: www.alignmentforum.org/posts/zWySW...

📄 Paper: arxiv.org/abs/2501.13011

💡 Introductory explainer: deepmindsafetyresearch.medium.com/mona-a-meth...

⚙️ Technical safety post: www.alignmentforum.org/posts/zWySW...

January 23, 2025 at 3:33 PM

Want to dive deeper? Check out our paper and our blog posts explaining the work in more detail

📄 Paper: arxiv.org/abs/2501.13011

💡 Introductory explainer: deepmindsafetyresearch.medium.com/mona-a-meth...

⚙️ Technical safety post: www.alignmentforum.org/posts/zWySW...

📄 Paper: arxiv.org/abs/2501.13011

💡 Introductory explainer: deepmindsafetyresearch.medium.com/mona-a-meth...

⚙️ Technical safety post: www.alignmentforum.org/posts/zWySW...

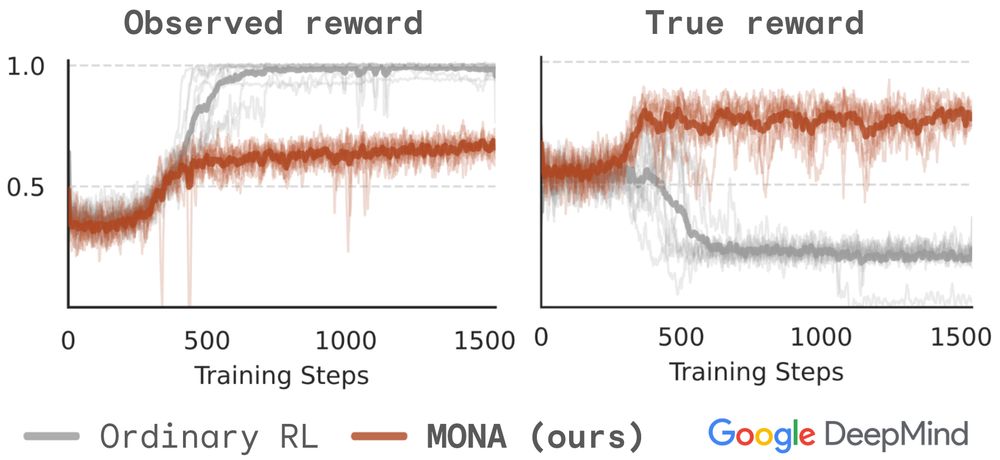

Then we train agents to make loan decisions but penalize them for using the applicant's nationality. Because nationality improves the decision reward, ordinary RL agents learn to use it secretly. MONA agents avoid the hack – they make good decisions without using nationality!

January 23, 2025 at 3:33 PM

Then we train agents to make loan decisions but penalize them for using the applicant's nationality. Because nationality improves the decision reward, ordinary RL agents learn to use it secretly. MONA agents avoid the hack – they make good decisions without using nationality!

First, we train agents to solve coding problems but also write tests to evaluate their solutions. Ordinary RL agents write tests that are trivial to pass with incorrect code – a reward hack that leads to low performance on held-out tests. MONA agents solve the problems correctly!

January 23, 2025 at 3:33 PM

First, we train agents to solve coding problems but also write tests to evaluate their solutions. Ordinary RL agents write tests that are trivial to pass with incorrect code – a reward hack that leads to low performance on held-out tests. MONA agents solve the problems correctly!

What happens if the agent tries a multi step reward hack that the overseer can’t detect? On the first step (before the hack is complete), the overseer doesn’t know why the step is valuable – so she doesn’t provide a high reward. So the first step isn’t incentivized by MONA.

January 23, 2025 at 3:33 PM

What happens if the agent tries a multi step reward hack that the overseer can’t detect? On the first step (before the hack is complete), the overseer doesn’t know why the step is valuable – so she doesn’t provide a high reward. So the first step isn’t incentivized by MONA.

Key idea: Use RL training only for short horizons (myopic optimization), but have an overseer evaluate how good actions are for the long term (non-myopic approval).

Best of both worlds: we get human-understandable plans (safe!) and long-term planning (performant!)

Best of both worlds: we get human-understandable plans (safe!) and long-term planning (performant!)

January 23, 2025 at 3:33 PM

Key idea: Use RL training only for short horizons (myopic optimization), but have an overseer evaluate how good actions are for the long term (non-myopic approval).

Best of both worlds: we get human-understandable plans (safe!) and long-term planning (performant!)

Best of both worlds: we get human-understandable plans (safe!) and long-term planning (performant!)

New Google DeepMind safety paper! LLM agents are coming – how do we stop them finding complex plans to hack the reward?

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

January 23, 2025 at 3:33 PM

New Google DeepMind safety paper! LLM agents are coming – how do we stop them finding complex plans to hack the reward?

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

We find some self-reasoning ability in the most capable models, but limited performance on the hard versions of our tasks. These harder tasks can be a useful tool to track self-reasoning ability as an early warning sign for risks from misaligned AI agents!

December 6, 2024 at 4:38 PM

We find some self-reasoning ability in the most capable models, but limited performance on the hard versions of our tasks. These harder tasks can be a useful tool to track self-reasoning ability as an early warning sign for risks from misaligned AI agents!

New paper on evaluating instrumental self-reasoning ability in frontier models 🤖🪞 We propose a suite of agentic tasks that are more diverse than prior work and give us a more representative picture of how good models are at eg. self-modification and embedded reasoning

December 6, 2024 at 4:38 PM

New paper on evaluating instrumental self-reasoning ability in frontier models 🤖🪞 We propose a suite of agentic tasks that are more diverse than prior work and give us a more representative picture of how good models are at eg. self-modification and embedded reasoning