David Lindner

@davidlindner.bsky.social

Making AI safer at Google DeepMind

davidlindner.me

davidlindner.me

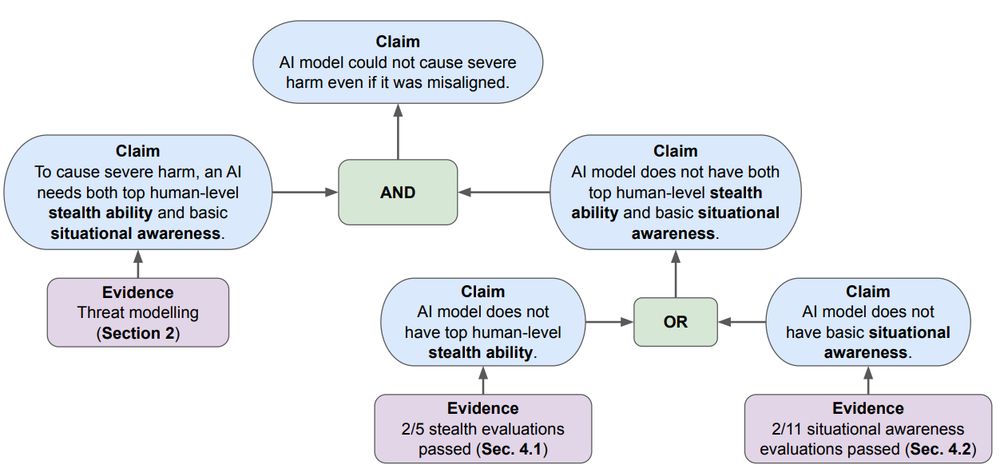

Excited to share some technical details about our approach to scheming and deceptive alignment as outlined in Google's Frontier Safety Framework!

(1) current models are not yet capable of realistic scheming

(2) CoT monitoring is a promising mitigation for future scheming

(1) current models are not yet capable of realistic scheming

(2) CoT monitoring is a promising mitigation for future scheming

As models advance, a key AI safety concern is deceptive alignment / "scheming" – where AI might covertly pursue unintended goals. Our paper "Evaluating Frontier Models for Stealth and Situational Awareness" assesses whether current models can scheme. arxiv.org/abs/2505.01420

July 8, 2025 at 1:35 PM

Excited to share some technical details about our approach to scheming and deceptive alignment as outlined in Google's Frontier Safety Framework!

(1) current models are not yet capable of realistic scheming

(2) CoT monitoring is a promising mitigation for future scheming

(1) current models are not yet capable of realistic scheming

(2) CoT monitoring is a promising mitigation for future scheming

Can frontier models hide secret information and reasoning in their outputs?

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

July 4, 2025 at 3:34 PM

Can frontier models hide secret information and reasoning in their outputs?

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

Super excited this giant paper outlining our technical approach to AGI safety and security is finally out!

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

April 4, 2025 at 2:27 PM

Super excited this giant paper outlining our technical approach to AGI safety and security is finally out!

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

Reposted by David Lindner

We are excited to release a short course on AGI safety! The course offers a concise and accessible introduction to AI alignment problems and our technical / governance approaches, consisting of short recorded talks and exercises (75 minutes total). deepmindsafetyresearch.medium.com/1072adb7912c

Introducing our short course on AGI safety

We are excited to release a short course on AGI safety for students, researchers and professionals interested in this topic. The course…

deepmindsafetyresearch.medium.com

February 14, 2025 at 4:06 PM

We are excited to release a short course on AGI safety! The course offers a concise and accessible introduction to AI alignment problems and our technical / governance approaches, consisting of short recorded talks and exercises (75 minutes total). deepmindsafetyresearch.medium.com/1072adb7912c

Check out this great post by Rohin Shah about our team and what we’re looking for in candidates: www.alignmentforum.org/posts/wqz5CR...

In particular: we're looking for strong ML researchers and engineers and you do not need to be an AGI safety expert

In particular: we're looking for strong ML researchers and engineers and you do not need to be an AGI safety expert

Want to join one of the best AI safety teams in the world?

We're hiring at Google DeepMind! We have open positions for research engineers and research scientists in the AGI Safety & Alignment and Gemini Safety teams.

Locations: London, Zurich, New York, Mountain View and SF

We're hiring at Google DeepMind! We have open positions for research engineers and research scientists in the AGI Safety & Alignment and Gemini Safety teams.

Locations: London, Zurich, New York, Mountain View and SF

February 18, 2025 at 4:43 PM

Check out this great post by Rohin Shah about our team and what we’re looking for in candidates: www.alignmentforum.org/posts/wqz5CR...

In particular: we're looking for strong ML researchers and engineers and you do not need to be an AGI safety expert

In particular: we're looking for strong ML researchers and engineers and you do not need to be an AGI safety expert

Want to join one of the best AI safety teams in the world?

We're hiring at Google DeepMind! We have open positions for research engineers and research scientists in the AGI Safety & Alignment and Gemini Safety teams.

Locations: London, Zurich, New York, Mountain View and SF

We're hiring at Google DeepMind! We have open positions for research engineers and research scientists in the AGI Safety & Alignment and Gemini Safety teams.

Locations: London, Zurich, New York, Mountain View and SF

February 10, 2025 at 6:55 PM

Want to join one of the best AI safety teams in the world?

We're hiring at Google DeepMind! We have open positions for research engineers and research scientists in the AGI Safety & Alignment and Gemini Safety teams.

Locations: London, Zurich, New York, Mountain View and SF

We're hiring at Google DeepMind! We have open positions for research engineers and research scientists in the AGI Safety & Alignment and Gemini Safety teams.

Locations: London, Zurich, New York, Mountain View and SF

Reposted by David Lindner

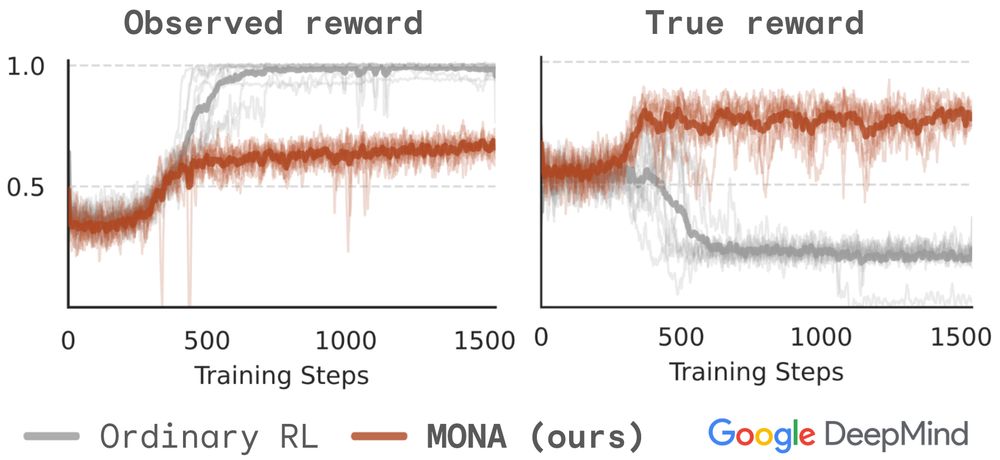

By default, LLM agents with long action sequences use early steps to undermine your evaluation of later steps; a big alignment risk.

Our new paper mitigates this, keeps the ability for long-term planning, and doesnt assume you can detect the undermining strategy. 👇

Our new paper mitigates this, keeps the ability for long-term planning, and doesnt assume you can detect the undermining strategy. 👇

New Google DeepMind safety paper! LLM agents are coming – how do we stop them finding complex plans to hack the reward?

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

January 23, 2025 at 3:47 PM

By default, LLM agents with long action sequences use early steps to undermine your evaluation of later steps; a big alignment risk.

Our new paper mitigates this, keeps the ability for long-term planning, and doesnt assume you can detect the undermining strategy. 👇

Our new paper mitigates this, keeps the ability for long-term planning, and doesnt assume you can detect the undermining strategy. 👇

New Google DeepMind safety paper! LLM agents are coming – how do we stop them finding complex plans to hack the reward?

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

January 23, 2025 at 3:33 PM

New Google DeepMind safety paper! LLM agents are coming – how do we stop them finding complex plans to hack the reward?

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Stop by SoLaR workshop at NeurIPS today to see Kai present the paper!

New paper on evaluating instrumental self-reasoning ability in frontier models 🤖🪞 We propose a suite of agentic tasks that are more diverse than prior work and give us a more representative picture of how good models are at eg. self-modification and embedded reasoning

December 14, 2024 at 3:36 PM

Stop by SoLaR workshop at NeurIPS today to see Kai present the paper!

Reposted by David Lindner

MISR eval reveals frontier language agents can self-reason to modify their own settings & use tools, but they fail at harder tests involving social reasoning and knowledge seeking. Opaque reasoning remains difficult. arxiv.org/abs/2412.03904

December 10, 2024 at 11:16 AM

MISR eval reveals frontier language agents can self-reason to modify their own settings & use tools, but they fail at harder tests involving social reasoning and knowledge seeking. Opaque reasoning remains difficult. arxiv.org/abs/2412.03904

Reposted by David Lindner

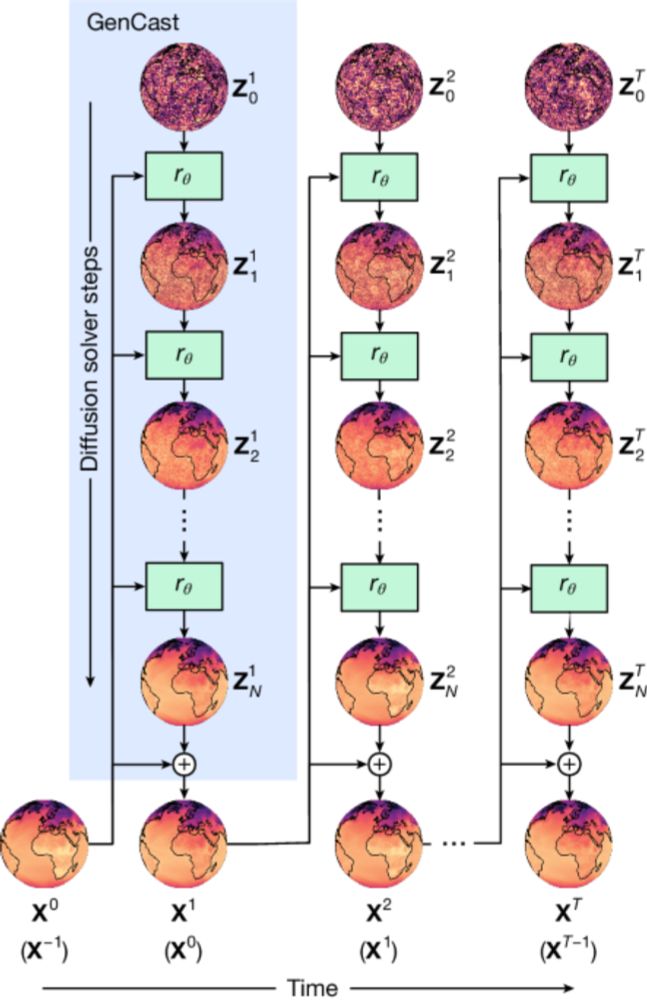

So excited to share our Google DeepMind team's new Nature paper on GenCast, an ML-based probabilistic weather forecasting model: www.nature.com/articles/s41...

It represents a substantial step forward in how we predict weather and assess the risk of extreme events. 🌪️🧵

It represents a substantial step forward in how we predict weather and assess the risk of extreme events. 🌪️🧵

Probabilistic weather forecasting with machine learning - Nature

GenCast, a probabilistic weather model using artificial intelligence for weather forecasting, has greater skill and speed than the top operational medium-range weather forecast in the world and provid...

www.nature.com

December 10, 2024 at 11:00 AM

So excited to share our Google DeepMind team's new Nature paper on GenCast, an ML-based probabilistic weather forecasting model: www.nature.com/articles/s41...

It represents a substantial step forward in how we predict weather and assess the risk of extreme events. 🌪️🧵

It represents a substantial step forward in how we predict weather and assess the risk of extreme events. 🌪️🧵

New paper on evaluating instrumental self-reasoning ability in frontier models 🤖🪞 We propose a suite of agentic tasks that are more diverse than prior work and give us a more representative picture of how good models are at eg. self-modification and embedded reasoning

December 6, 2024 at 4:38 PM

New paper on evaluating instrumental self-reasoning ability in frontier models 🤖🪞 We propose a suite of agentic tasks that are more diverse than prior work and give us a more representative picture of how good models are at eg. self-modification and embedded reasoning

Reposted by David Lindner

Just a heads up to everyone: @deep-mind.bsky.social is unfortunately a fake account and has been reported. Please do not follow it nor repost anything from it.

November 25, 2024 at 11:24 PM

Just a heads up to everyone: @deep-mind.bsky.social is unfortunately a fake account and has been reported. Please do not follow it nor repost anything from it.