It includes 40 open LLMs evaluated on 4 benchmarks, following 5 tasks. And its only growing!

huggingface.co/spaces/HPAI-...

arxiv.org/abs/2504.01986

It includes 40 open LLMs evaluated on 4 benchmarks, following 5 tasks. And its only growing!

huggingface.co/spaces/HPAI-...

arxiv.org/abs/2504.01986

Plus four different evaluation methods (including medical expert).

Plus a risk assessment of healthcare LLMs.

Two years of work condensed in a few pages, figures and tables.

Love open research!

huggingface.co/papers/2505....

Plus four different evaluation methods (including medical expert).

Plus a risk assessment of healthcare LLMs.

Two years of work condensed in a few pages, figures and tables.

Love open research!

huggingface.co/papers/2505....

1. A good open model 🍞

2. A properly tuned RAG pipeline 🍒

And you will be cooking a five star AI system ⭐ ⭐ ⭐ ⭐ ⭐

See you on the AIAI 2025 conference, where we will be presenting this work, done at @bsc-cns.bsky.social and @hpai.bsky.social

1. A good open model 🍞

2. A properly tuned RAG pipeline 🍒

And you will be cooking a five star AI system ⭐ ⭐ ⭐ ⭐ ⭐

See you on the AIAI 2025 conference, where we will be presenting this work, done at @bsc-cns.bsky.social and @hpai.bsky.social

Our research shows it is economically feasible and scalable to achieve O1 level performance at a fraction of the cost.

buff.ly/ji1VHiV

Our research shows it is economically feasible and scalable to achieve O1 level performance at a fraction of the cost.

buff.ly/ji1VHiV

It includes +60K safety questions expanded with jailbreaking prompts.

The four models trained (and released) show strong signs of safety alignment and generalization capacity. Check out the 🤗 HF page and the paper for details!

buff.ly/kxFVyl2

It includes +60K safety questions expanded with jailbreaking prompts.

The four models trained (and released) show strong signs of safety alignment and generalization capacity. Check out the 🤗 HF page and the paper for details!

buff.ly/kxFVyl2

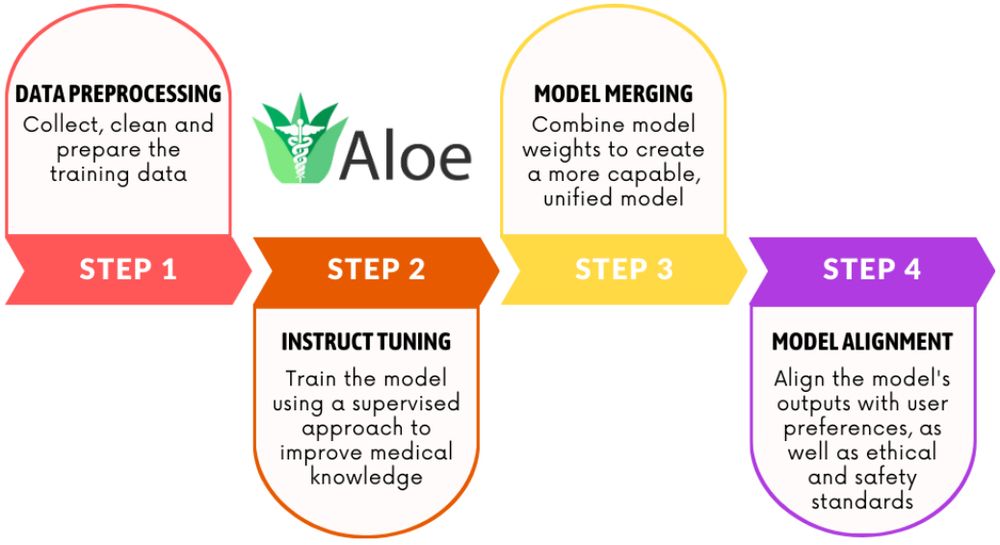

For an incoming paper, did this little visual summary. Would you change anything?

For an incoming paper, did this little visual summary. Would you change anything?

Finishing the journal paper with all the details right now!

huggingface.co/collections/...

Finishing the journal paper with all the details right now!

huggingface.co/collections/...

Thanks to the HPAI team, and the people of the open models community, who made this possible.

Thanks to the HPAI team, and the people of the open models community, who made this possible.

We work with HPC experts from bsc-cns.bsky.social to optimize the training and reduce its cost, now reaching 400 TFLOPS in the 70B version, 460 in the 7B.

We work with HPC experts from bsc-cns.bsky.social to optimize the training and reduce its cost, now reaching 400 TFLOPS in the 70B version, 460 in the 7B.

Check out the entire model family here: huggingface.co/collections/...

New, even better Aloe models based on Qwen 2.5 coming next week ;)

Check out the entire model family here: huggingface.co/collections/...

New, even better Aloe models based on Qwen 2.5 coming next week ;)