It includes 40 open LLMs evaluated on 4 benchmarks, following 5 tasks. And its only growing!

huggingface.co/spaces/HPAI-...

arxiv.org/abs/2504.01986

It includes 40 open LLMs evaluated on 4 benchmarks, following 5 tasks. And its only growing!

huggingface.co/spaces/HPAI-...

arxiv.org/abs/2504.01986

Plus four different evaluation methods (including medical expert).

Plus a risk assessment of healthcare LLMs.

Two years of work condensed in a few pages, figures and tables.

Love open research!

huggingface.co/papers/2505....

Plus four different evaluation methods (including medical expert).

Plus a risk assessment of healthcare LLMs.

Two years of work condensed in a few pages, figures and tables.

Love open research!

huggingface.co/papers/2505....

Our active and young research team needs someone to help sustain and improve our services, including HPC clusters, automated pipelines, artifact managements and much more!

Are you up for the challenge?

www.bsc.es/join-us/job-...

Our active and young research team needs someone to help sustain and improve our services, including HPC clusters, automated pipelines, artifact managements and much more!

Are you up for the challenge?

www.bsc.es/join-us/job-...

A random forest using morphokinetic features of embryo evolution visually annotated by experts, and a CNN directly using static images get similar performance. Separately AND together.

I find it surprising...

A random forest using morphokinetic features of embryo evolution visually annotated by experts, and a CNN directly using static images get similar performance. Separately AND together.

I find it surprising...

Being chased by dinosaurs and writing grants. Same thing.

Being chased by dinosaurs and writing grants. Same thing.

Our research shows it is economically feasible and scalable to achieve O1 level performance at a fraction of the cost.

buff.ly/ji1VHiV

Our research shows it is economically feasible and scalable to achieve O1 level performance at a fraction of the cost.

buff.ly/ji1VHiV

It includes +60K safety questions expanded with jailbreaking prompts.

The four models trained (and released) show strong signs of safety alignment and generalization capacity. Check out the 🤗 HF page and the paper for details!

buff.ly/kxFVyl2

It includes +60K safety questions expanded with jailbreaking prompts.

The four models trained (and released) show strong signs of safety alignment and generalization capacity. Check out the 🤗 HF page and the paper for details!

buff.ly/kxFVyl2

Are you in the Chip Design or EDA business? Wanna know which LLMs are best for the task? By integrating 4 benchmarks, TuRTLe evaluates:

* Syntax

* Functionality

* Synthesizability

* Power, Performance and Area metrics

huggingface.co/spaces/HPAI-...

Are you in the Chip Design or EDA business? Wanna know which LLMs are best for the task? By integrating 4 benchmarks, TuRTLe evaluates:

* Syntax

* Functionality

* Synthesizability

* Power, Performance and Area metrics

huggingface.co/spaces/HPAI-...

We took MIR, '20-'24 to test open LLMs. Llama 3.1 based models, like Aloe, reach +80 in accuracy.

Deepseep R1 reaches +88. Boosted by a RAG system, 92.

buff.ly/4bbbXMw

buff.ly/4hLrhBV

We took MIR, '20-'24 to test open LLMs. Llama 3.1 based models, like Aloe, reach +80 in accuracy.

Deepseep R1 reaches +88. Boosted by a RAG system, 92.

buff.ly/4bbbXMw

buff.ly/4hLrhBV

Skilled warriors adapted to harsh environments, taking over a society they don't want to adopt.

Skilled warriors adapted to harsh environments, taking over a society they don't want to adopt.

* All LLM evals are wrong, some are slightly useful.

* Goodhart's law. All the time. Everywhere.

* Do lots of different evals and hope for the best.

* All LLM evals are wrong, some are slightly useful.

* Goodhart's law. All the time. Everywhere.

* Do lots of different evals and hope for the best.

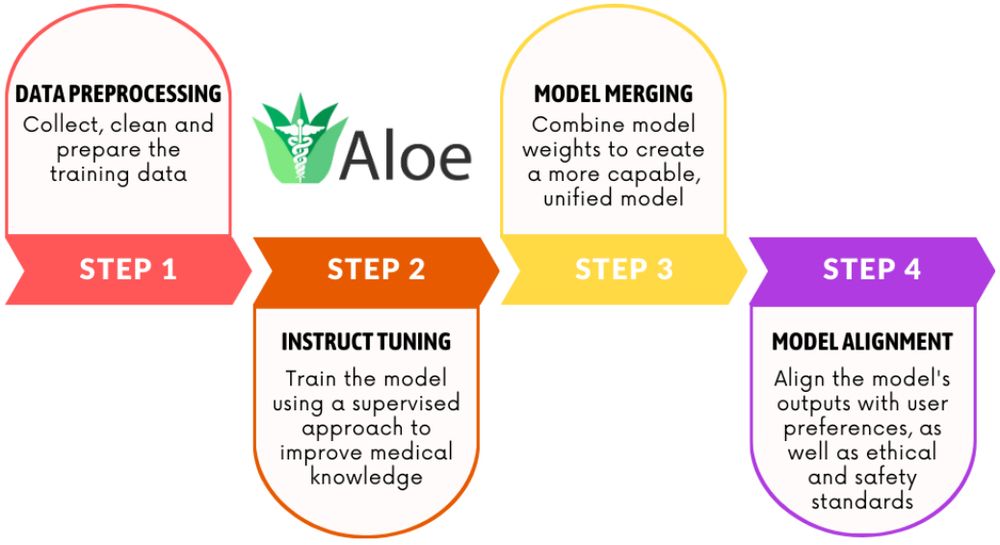

For an incoming paper, did this little visual summary. Would you change anything?

For an incoming paper, did this little visual summary. Would you change anything?

10 days to go, and an award to be decided!

Submit your paper and join us sites.google.com/view/cadl2025/

10 days to go, and an award to be decided!

Submit your paper and join us sites.google.com/view/cadl2025/

Submit your paper and see you in Germany :)

Deadline: 28 Feb

Topics:

-Energy-efficient AI

-Large-scale pre-training

-Distributed learning approaches

-Model optimization strategies

Submit your paper and see you in Germany :)

"Don't be a hero"

Andrej Karpathy

"Attention is all you need"

Vaswani et al

"We Have No Moat

And neither does OpenAI"

Google Engineer

"Don't be a hero"

Andrej Karpathy

"Attention is all you need"

Vaswani et al

"We Have No Moat

And neither does OpenAI"

Google Engineer

"If you can bear to hear the truth you’ve spoken

Twisted by knaves to make a trap for fools,

Or watch the things you gave your life to, broken,

And stoop and build ’em up with worn-out tools"

"If you can bear to hear the truth you’ve spoken

Twisted by knaves to make a trap for fools,

Or watch the things you gave your life to, broken,

And stoop and build ’em up with worn-out tools"