Daniel Scalena

@danielsc4.it

PhDing @unimib 🇮🇹 & @gronlp.bsky.social 🇳🇱, interpretability et similia

danielsc4.it

danielsc4.it

Takeaway: EAGer shows we can be MORE efficient & MORE effective by letting models focus compute where it matters most.

📄Paper: arxiv.org/abs/2510.11170

💻Code: github.com/DanielSc4/EA...

✨Huge thanks to my mentors and collaborators @leozotos.bsky.social E. Fersini @malvinanissim.bsky.social A. Üstün

📄Paper: arxiv.org/abs/2510.11170

💻Code: github.com/DanielSc4/EA...

✨Huge thanks to my mentors and collaborators @leozotos.bsky.social E. Fersini @malvinanissim.bsky.social A. Üstün

EAGER: Entropy-Aware GEneRation for Adaptive Inference-Time Scaling

With the rise of reasoning language models and test-time scaling methods as a paradigm for improving model performance, substantial computation is often required to generate multiple candidate sequenc...

arxiv.org

October 16, 2025 at 12:07 PM

Takeaway: EAGer shows we can be MORE efficient & MORE effective by letting models focus compute where it matters most.

📄Paper: arxiv.org/abs/2510.11170

💻Code: github.com/DanielSc4/EA...

✨Huge thanks to my mentors and collaborators @leozotos.bsky.social E. Fersini @malvinanissim.bsky.social A. Üstün

📄Paper: arxiv.org/abs/2510.11170

💻Code: github.com/DanielSc4/EA...

✨Huge thanks to my mentors and collaborators @leozotos.bsky.social E. Fersini @malvinanissim.bsky.social A. Üstün

Results: Across 3B-20B models, EAGer cuts budget by up to 80%, boosts perf 13% w/o labels & 37% w/ labels on AIME.

As M scales, EAGer consistently:

🚀 Achieves HIGHER Pass@k,

✂️ Uses FEWER tokens than baseline,

🕺 Shifts the Pareto frontier favorably across all tasks.

🧵5/

As M scales, EAGer consistently:

🚀 Achieves HIGHER Pass@k,

✂️ Uses FEWER tokens than baseline,

🕺 Shifts the Pareto frontier favorably across all tasks.

🧵5/

October 16, 2025 at 12:07 PM

Results: Across 3B-20B models, EAGer cuts budget by up to 80%, boosts perf 13% w/o labels & 37% w/ labels on AIME.

As M scales, EAGer consistently:

🚀 Achieves HIGHER Pass@k,

✂️ Uses FEWER tokens than baseline,

🕺 Shifts the Pareto frontier favorably across all tasks.

🧵5/

As M scales, EAGer consistently:

🚀 Achieves HIGHER Pass@k,

✂️ Uses FEWER tokens than baseline,

🕺 Shifts the Pareto frontier favorably across all tasks.

🧵5/

The fun part: EAGer-adapt reallocates saved budget to "saturating" prompts hitting the M cap, no labels needed! – Training & Verification-Free 🚀

Full EAGer uses labels to catch failing prompts, lowering threshold to branch or add sequences. Great for verifiable pipelines!

🧵4/

Full EAGer uses labels to catch failing prompts, lowering threshold to branch or add sequences. Great for verifiable pipelines!

🧵4/

October 16, 2025 at 12:07 PM

The fun part: EAGer-adapt reallocates saved budget to "saturating" prompts hitting the M cap, no labels needed! – Training & Verification-Free 🚀

Full EAGer uses labels to catch failing prompts, lowering threshold to branch or add sequences. Great for verifiable pipelines!

🧵4/

Full EAGer uses labels to catch failing prompts, lowering threshold to branch or add sequences. Great for verifiable pipelines!

🧵4/

EAGer works by monitoring token entropy during generation. High entropy token → It branches to explore new paths (reusing prefixes). Token with low entropy → It continues a single path.

We cap at M sequences/prompt, saving budget on easy ones without regen. Training-free!

🧵3/

We cap at M sequences/prompt, saving budget on easy ones without regen. Training-free!

🧵3/

October 16, 2025 at 12:07 PM

EAGer works by monitoring token entropy during generation. High entropy token → It branches to explore new paths (reusing prefixes). Token with low entropy → It continues a single path.

We cap at M sequences/prompt, saving budget on easy ones without regen. Training-free!

🧵3/

We cap at M sequences/prompt, saving budget on easy ones without regen. Training-free!

🧵3/

Why? Reasoning LLMs shine with CoTs, but full parallel sampling—generating multiple paths per prompt—is inefficient 😤.

It wastes compute on redundant, predictable tokens, esp. for easy prompts. Hard prompts need more exploration but get the same budget. Enter EAGER🧠!

🧵2/

It wastes compute on redundant, predictable tokens, esp. for easy prompts. Hard prompts need more exploration but get the same budget. Enter EAGER🧠!

🧵2/

October 16, 2025 at 12:07 PM

Why? Reasoning LLMs shine with CoTs, but full parallel sampling—generating multiple paths per prompt—is inefficient 😤.

It wastes compute on redundant, predictable tokens, esp. for easy prompts. Hard prompts need more exploration but get the same budget. Enter EAGER🧠!

🧵2/

It wastes compute on redundant, predictable tokens, esp. for easy prompts. Hard prompts need more exploration but get the same budget. Enter EAGER🧠!

🧵2/

📝 Paper: arxiv.org/abs/2505.16612

🔗 Code: github.com/DanielSc4/st...

Thanks to my amazing co-authors:

@gsarti.com , @arianna-bis.bsky.social , Elisabetta Fersini, @malvinanissim.bsky.social

7/7

🔗 Code: github.com/DanielSc4/st...

Thanks to my amazing co-authors:

@gsarti.com , @arianna-bis.bsky.social , Elisabetta Fersini, @malvinanissim.bsky.social

7/7

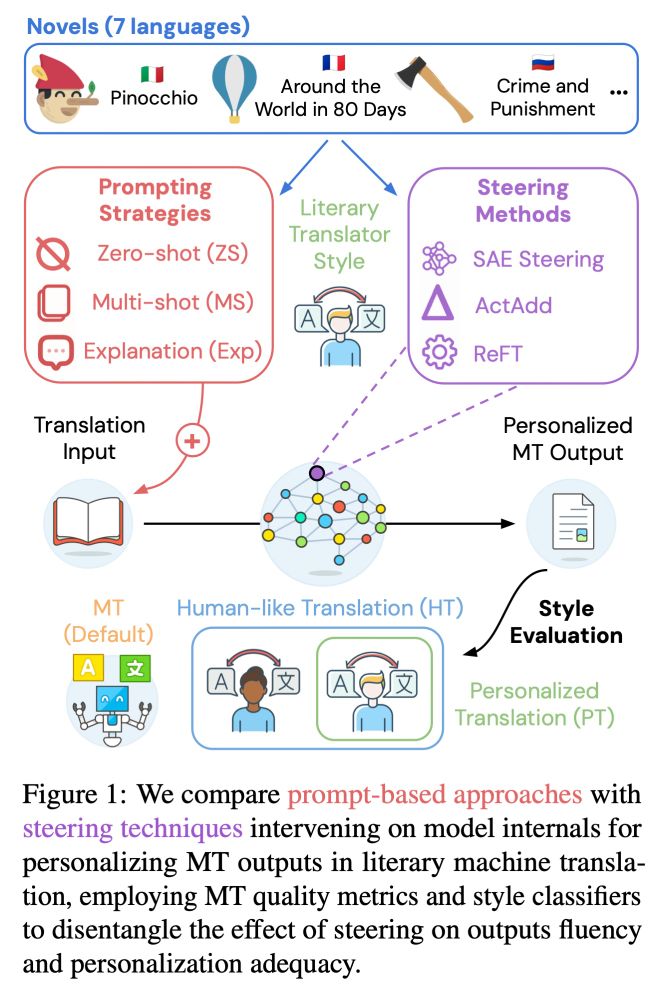

Steering Large Language Models for Machine Translation Personalization

High-quality machine translation systems based on large language models (LLMs) have simplified the production of personalized translations reflecting specific stylistic constraints. However, these sys...

arxiv.org

May 23, 2025 at 12:23 PM

📝 Paper: arxiv.org/abs/2505.16612

🔗 Code: github.com/DanielSc4/st...

Thanks to my amazing co-authors:

@gsarti.com , @arianna-bis.bsky.social , Elisabetta Fersini, @malvinanissim.bsky.social

7/7

🔗 Code: github.com/DanielSc4/st...

Thanks to my amazing co-authors:

@gsarti.com , @arianna-bis.bsky.social , Elisabetta Fersini, @malvinanissim.bsky.social

7/7

🔍 What’s happening in the model?

We find that SAE steering and multi-shot prompting impact internal representations similarly, suggesting insight from user examples are summarized with extra interpretability potential (look at latents) and better efficiency (no long context) 6/

We find that SAE steering and multi-shot prompting impact internal representations similarly, suggesting insight from user examples are summarized with extra interpretability potential (look at latents) and better efficiency (no long context) 6/

May 23, 2025 at 12:23 PM

🔍 What’s happening in the model?

We find that SAE steering and multi-shot prompting impact internal representations similarly, suggesting insight from user examples are summarized with extra interpretability potential (look at latents) and better efficiency (no long context) 6/

We find that SAE steering and multi-shot prompting impact internal representations similarly, suggesting insight from user examples are summarized with extra interpretability potential (look at latents) and better efficiency (no long context) 6/

🌍 Across 7 languages, our SAE-based method matches or outperforms traditional prompting methods! Our method obtains better human-like translations (H) personalization accuracy (P), and maintains translation quality (Comet ☄️ @nunonmg.bsky.social) especially for smaller LLMs. 5/

May 23, 2025 at 12:23 PM

🌍 Across 7 languages, our SAE-based method matches or outperforms traditional prompting methods! Our method obtains better human-like translations (H) personalization accuracy (P), and maintains translation quality (Comet ☄️ @nunonmg.bsky.social) especially for smaller LLMs. 5/

💡 We compare prompting (zero and multi-shot + explanations) and inference-time interventions (ActAdd, REFT and SAEs).

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

May 23, 2025 at 12:23 PM

💡 We compare prompting (zero and multi-shot + explanations) and inference-time interventions (ActAdd, REFT and SAEs).

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

📈 But can models recognize and replicate individual translator styles?:

✓ Classifiers can find styles with high acc. (humans kinda don’t)

✓ Multi-shot prompting boosts style a lot

✓ We can detect strong style traces in activations (esp. mid layers) 3/

✓ Classifiers can find styles with high acc. (humans kinda don’t)

✓ Multi-shot prompting boosts style a lot

✓ We can detect strong style traces in activations (esp. mid layers) 3/

May 23, 2025 at 12:23 PM

📈 But can models recognize and replicate individual translator styles?:

✓ Classifiers can find styles with high acc. (humans kinda don’t)

✓ Multi-shot prompting boosts style a lot

✓ We can detect strong style traces in activations (esp. mid layers) 3/

✓ Classifiers can find styles with high acc. (humans kinda don’t)

✓ Multi-shot prompting boosts style a lot

✓ We can detect strong style traces in activations (esp. mid layers) 3/

📘 Literary translation isn't just about accuracy, but also creatively conveying meaning across languages. But LLMs prompted for MT are very literal. Prompting & steering to the rescue!

Can we personalize LLM’s MT when few examples are available, without further tuning? 🔍 2/

Can we personalize LLM’s MT when few examples are available, without further tuning? 🔍 2/

May 23, 2025 at 12:23 PM

📘 Literary translation isn't just about accuracy, but also creatively conveying meaning across languages. But LLMs prompted for MT are very literal. Prompting & steering to the rescue!

Can we personalize LLM’s MT when few examples are available, without further tuning? 🔍 2/

Can we personalize LLM’s MT when few examples are available, without further tuning? 🔍 2/