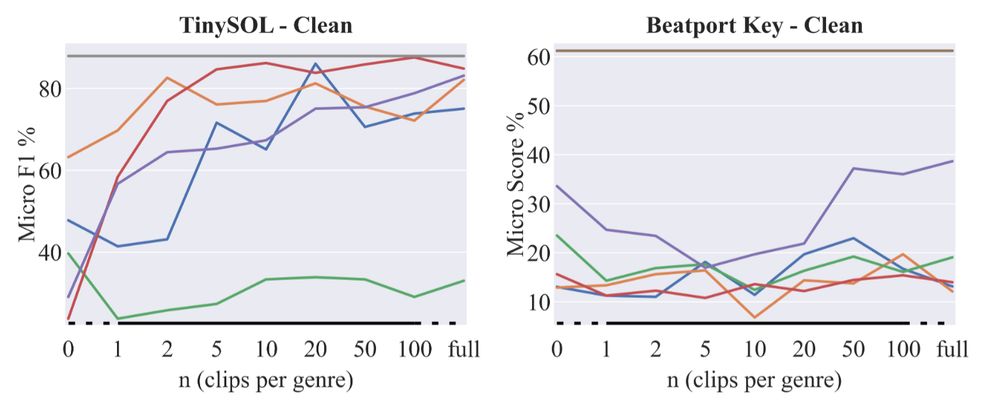

(MusiCNN 🟠, VGG 🔵, AST 🟢, CLMR 🔴, TMAE 🟣, MFCC 🩶)

(MusiCNN 🟠, VGG 🔵, AST 🟢, CLMR 🔴, TMAE 🟣, MFCC 🩶)

(MFCCs 🩶, Chroma 🤎)

(MFCCs 🩶, Chroma 🤎)

(MusiCNN 🟠, VGG 🔵)

(MusiCNN 🟠, VGG 🔵)

(MusiCNN 🟠, VGG 🔵, AST 🟢, CLMR 🔴, TMAE 🟣, MFCC 🩶)

(MusiCNN 🟠, VGG 🔵, AST 🟢, CLMR 🔴, TMAE 🟣, MFCC 🩶)