https://intellisec.de/chris

🌐 https://eurosp2026.ieee-security.org/cfw.html

⏱️ Deadline: Oct 2̶4̶t̶h̶ 30th AoE

📍Lisbon, PT

🌐 https://eurosp2026.ieee-security.org/cfw.html

⏱️ Deadline: Oct 2̶4̶t̶h̶ 30th AoE

📍Lisbon, PT

EuroS&P is the premier, European forum for security & privacy research. The main conference is accompanied by a series of workshops. Be part of it! 😎

🌐 https://eurosp2026.ieee-security.org/cfw.html

⏱️ Deadline: Oct 24th AoE

📍Lisbon, PT

EuroS&P is the premier, European forum for security & privacy research. The main conference is accompanied by a series of workshops. Be part of it! 😎

🌐 https://eurosp2026.ieee-security.org/cfw.html

⏱️ Deadline: Oct 24th AoE

📍Lisbon, PT

🌐 https://intellisec.de/research/adv-code

(1/3)

🌐 https://intellisec.de/research/adv-code

(1/3)

🗞️ https://intellisec.de/pubs/2025-aaai.pdf

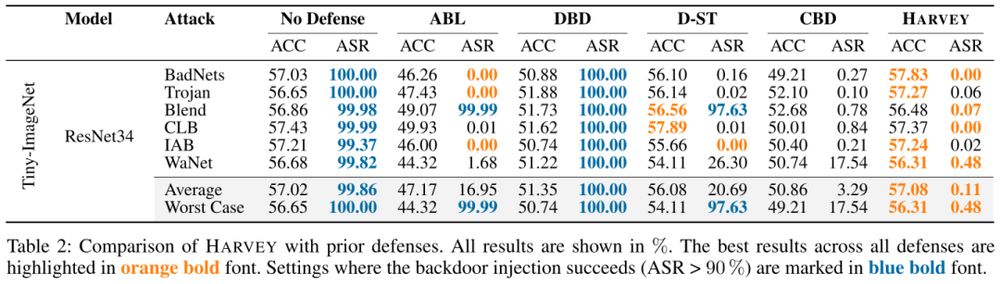

💻️ https://intellisec.de/research/harvey

🗞️ https://intellisec.de/pubs/2025-aaai.pdf

💻️ https://intellisec.de/research/harvey

💻️ xaisec.org/makrut

🗞️ intellisec.de/pubs/2024-ac...

💻️ xaisec.org/makrut

🗞️ intellisec.de/pubs/2024-ac...