Meet WeatherWeaver, a video model for controllable synthesis and removal of diverse weather effects — such as 🌧️ rain, ☃️ snow, 🌁 fog, and ☁️ clouds — for any input video.

Come visit #337 and see how we make it snow in Hawaii 🏝️❄️⛄

Meet WeatherWeaver, a video model for controllable synthesis and removal of diverse weather effects — such as 🌧️ rain, ☃️ snow, 🌁 fog, and ☁️ clouds — for any input video.

Come visit #337 and see how we make it snow in Hawaii 🏝️❄️⛄

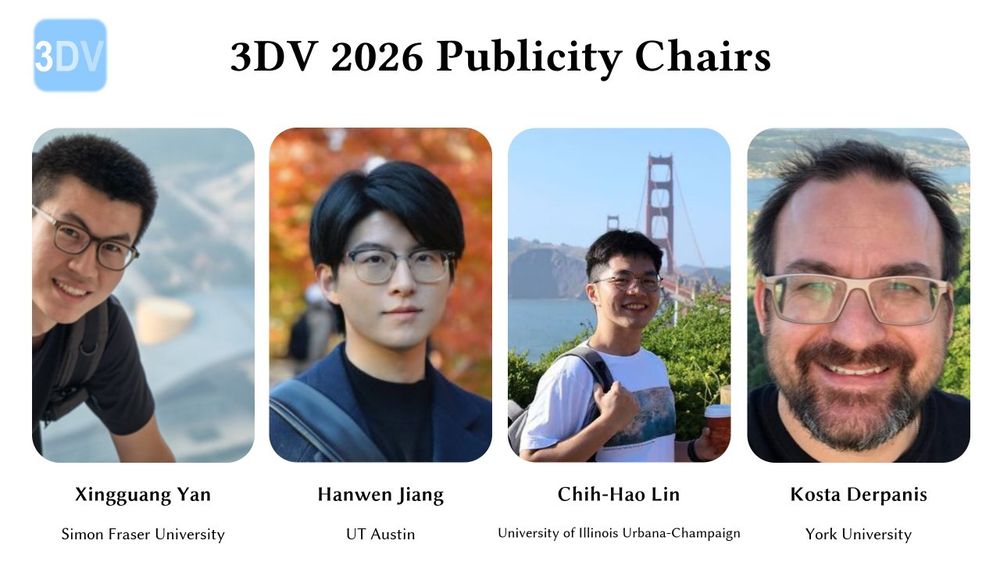

@hanwenjiang1 @yanxg.bsky.social @chih-hao.bsky.social @csprofkgd.bsky.social

We’ll keep the 3DV conversation alive: posting updates, refreshing the website, and listening to your feedback.

Got questions or ideas? Tag @3dvconf.bsky.social anytime!

@hanwenjiang1 @yanxg.bsky.social @chih-hao.bsky.social @csprofkgd.bsky.social

We’ll keep the 3DV conversation alive: posting updates, refreshing the website, and listening to your feedback.

Got questions or ideas? Tag @3dvconf.bsky.social anytime!

📝Publication Chairs ensure accepted papers are properly published in the conference proceedings

🔍Research Interaction Chairs encourage engagement by spotlighting exceptional research in 3D vision

📝Publication Chairs ensure accepted papers are properly published in the conference proceedings

🔍Research Interaction Chairs encourage engagement by spotlighting exceptional research in 3D vision

If you're pushing the boundaries, please consider submitting your work to #3DV2026 in Vancouver! (Deadline: Aug. 18, 2025)

👁️IRIS estimates accurate surface material, spatially-varying HDR lighting, and camera response function given a set of LDR images! It enables realistic, view-consistent, and controllable relighting and object insertion.

(links in 🧵)

👁️IRIS estimates accurate surface material, spatially-varying HDR lighting, and camera response function given a set of LDR images! It enables realistic, view-consistent, and controllable relighting and object insertion.

(links in 🧵)

📝 Paper Deadline: Aug 18

🎥 Supplementary: Aug 21

🔗 3dvconf.github.io/2026/call-fo...

📅 Conference Date: Mar 20–23, 2026

🌆 Location: Vancouver 🇨🇦

🚀 Showcase your latest research to the world!

#3DV2026 #CallForPapers #Vancouver #Canada

📝 Paper Deadline: Aug 18

🎥 Supplementary: Aug 21

🔗 3dvconf.github.io/2026/call-fo...

📅 Conference Date: Mar 20–23, 2026

🌆 Location: Vancouver 🇨🇦

🚀 Showcase your latest research to the world!

#3DV2026 #CallForPapers #Vancouver #Canada

We repurpose Score Distillation Sampling (SDS) for audio, turning any pretrained audio diffusion model into a tool for diverse tasks, including source separation, impact synthesis & more.

🎧 Demos, audio examples, paper: research.nvidia.com/labs/toronto...

🧵below

We repurpose Score Distillation Sampling (SDS) for audio, turning any pretrained audio diffusion model into a tool for diverse tasks, including source separation, impact synthesis & more.

🎧 Demos, audio examples, paper: research.nvidia.com/labs/toronto...

🧵below

Meet WeatherWeaver, a video model for controllable synthesis and removal of diverse weather effects — such as 🌧️ rain, ☃️ snow, 🌁 fog, and ☁️ clouds — for any input video.

Meet WeatherWeaver, a video model for controllable synthesis and removal of diverse weather effects — such as 🌧️ rain, ☃️ snow, 🌁 fog, and ☁️ clouds — for any input video.

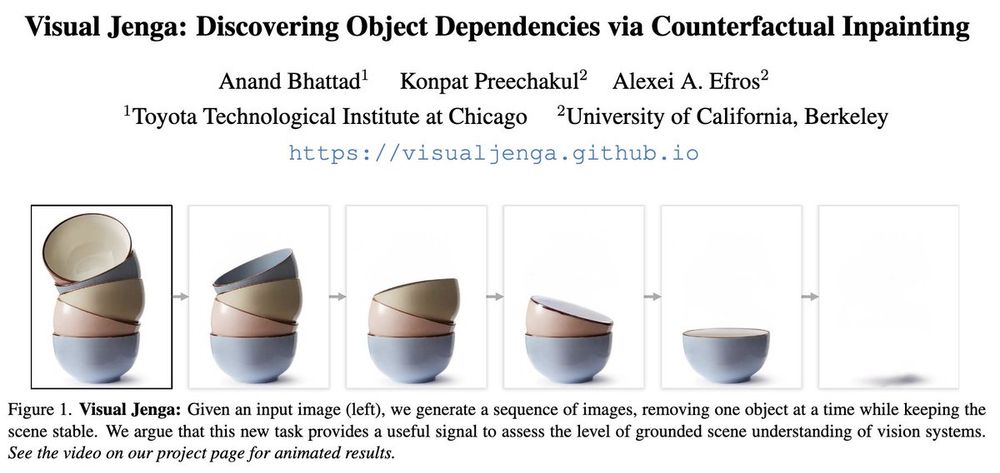

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

This is a cool project led by Hao-Yu, and we will present it at #3DV 2025!

This is a cool project led by Hao-Yu, and we will present it at #3DV 2025!

Check out our #3DV2025 UrbanIR paper, led by @chih-hao.bsky.social that does exactly this.

🔗: urbaninverserendering.github.io

Check out our #3DV2025 UrbanIR paper, led by @chih-hao.bsky.social that does exactly this.

🔗: urbaninverserendering.github.io

It’s a super cool project led by the amazing Chih-Hao!

@chih-hao.bsky.social is a rising star in 3DV! Follow him!

Learn more here👇

We introduce UrbanIR, a neural rendering framework for 💡relighting, 🌃nighttime simulation, and 🚘 object insertion—all from a single video of urban scenes!

It’s a super cool project led by the amazing Chih-Hao!

@chih-hao.bsky.social is a rising star in 3DV! Follow him!

Learn more here👇

We introduce UrbanIR, a neural rendering framework for 💡relighting, 🌃nighttime simulation, and 🚘 object insertion—all from a single video of urban scenes!

We introduce UrbanIR, a neural rendering framework for 💡relighting, 🌃nighttime simulation, and 🚘 object insertion—all from a single video of urban scenes!

👁️ IRIS: Inverse Rendering of Indoor Scenes

IRIS estimates accurate material, lighting, and camera response functions given a set of LDR images, enabling photorealistic and view-consistent relighting and object insertion.

👁️ IRIS: Inverse Rendering of Indoor Scenes

IRIS estimates accurate material, lighting, and camera response functions given a set of LDR images, enabling photorealistic and view-consistent relighting and object insertion.