unique: trained on AMD GPUs

focus is on long context & low hallucination rate — imo this is a growing genre of LLM that enables new search patterns

huggingface.co/Motif-Techno...

unique: trained on AMD GPUs

focus is on long context & low hallucination rate — imo this is a growing genre of LLM that enables new search patterns

huggingface.co/Motif-Techno...

best model price-per-dollar, by far

if GPT-4 brought mixture of experts (MoE), GPT-5 brought mixture of models (the router)

open.substack.com/pub/swyx/p/g...

best model price-per-dollar, by far

if GPT-4 brought mixture of experts (MoE), GPT-5 brought mixture of models (the router)

open.substack.com/pub/swyx/p/g...

brb 👀👀👀👀👀👀

Anthropic just dropped this paper. They can steer models quite effectively, and even detect training data that elicits a certain (e.g. evil) persona

arxiv.org/abs/2507.21509

brb 👀👀👀👀👀👀

Anthropic just dropped this paper. They can steer models quite effectively, and even detect training data that elicits a certain (e.g. evil) persona

arxiv.org/abs/2507.21509

"when they [linguists] say things like, "These things don't understand anything, they're just a statistical trick," they don't actually have a model of what understanding is...if you ask what's the best model we have of understanding, it's these large language models."

"when they [linguists] say things like, "These things don't understand anything, they're just a statistical trick," they don't actually have a model of what understanding is...if you ask what's the best model we have of understanding, it's these large language models."

This research got cited today in a column by the Financial Times:

This research got cited today in a column by the Financial Times:

By Akarsh Kumar, Jeff Clune, Joel Lehman, Ken Stanley

Paper: arxiv.org/abs/2505.11581

www.youtube.com/watch?v=o1q6...

By Akarsh Kumar, Jeff Clune, Joel Lehman, Ken Stanley

Paper: arxiv.org/abs/2505.11581

www.youtube.com/watch?v=o1q6...

Is an art, like everything else.

I do it exceptionally well.’

From 'Lady Lazarus', which appears in Sylvia Plath's Ariel.

Is an art, like everything else.

I do it exceptionally well.’

From 'Lady Lazarus', which appears in Sylvia Plath's Ariel.

Yet Roman workers still found ways to resist exploitation through strikes and other forms of collective action.

Yet Roman workers still found ways to resist exploitation through strikes and other forms of collective action.

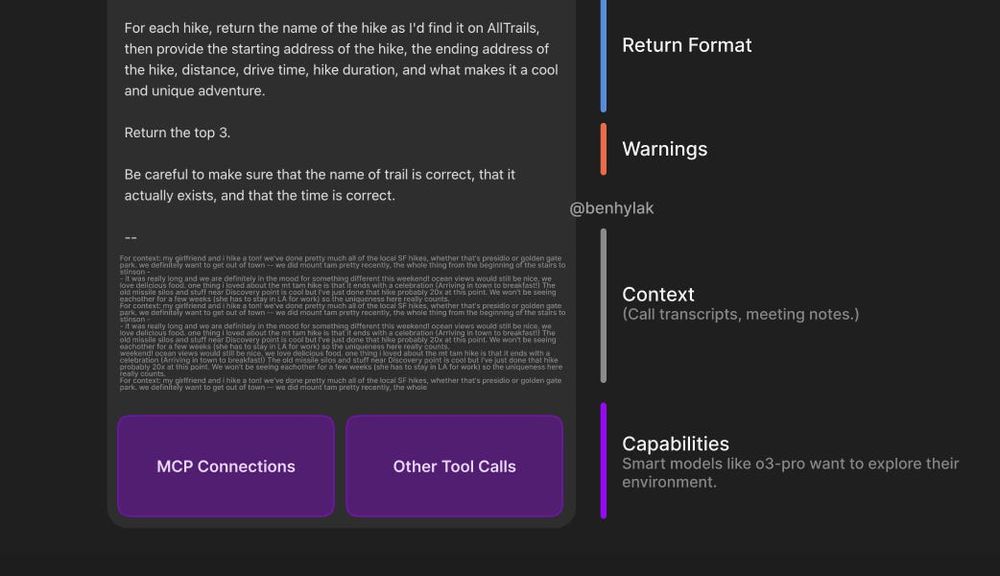

“The plan o3 gave us was plausible, reasonable; but the plan o3 Pro gave us was specific and rooted enough that it actually changed how we are thinking about our future.”

this is a very good article, read it

www.latent.space/p/o3-pro

“The plan o3 gave us was plausible, reasonable; but the plan o3 Pro gave us was specific and rooted enough that it actually changed how we are thinking about our future.”

this is a very good article, read it

www.latent.space/p/o3-pro

It's basically the secret missing manual for Claude 4, it's fascinating!

simonwillison.net/2025/May/25/...

It's basically the secret missing manual for Claude 4, it's fascinating!

simonwillison.net/2025/May/25/...

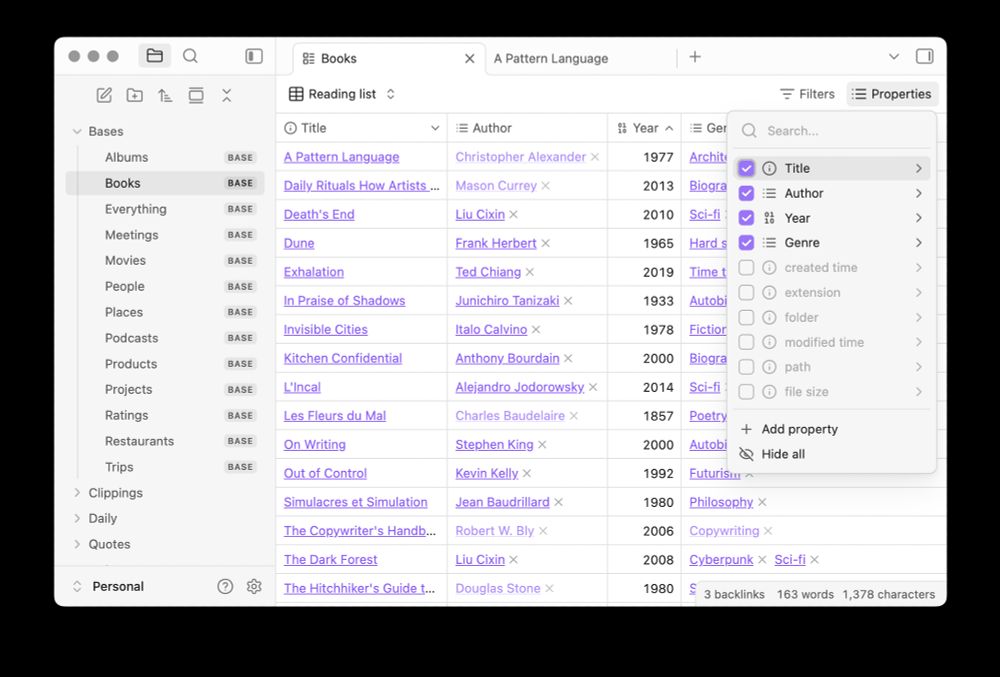

Bases are now available in Obsidian 1.9.0 for early access users.

Bases are now available in Obsidian 1.9.0 for early access users.

The film will premiere at this year's Cannes Film Festival and be released in theaters on May 30.

The film will premiere at this year's Cannes Film Festival and be released in theaters on May 30.

OpenAI, Anthropic, and Perplexity did not respond to interview requests. I wonder why?

OpenAI, Anthropic, and Perplexity did not respond to interview requests. I wonder why?