ai-scientific-discovery.github.io

ai-scientific-discovery.github.io

ai-scientific-discovery.github.io

ai-scientific-discovery.github.io

ai-scientific-discovery.github.io

ai-scientific-discovery.github.io

ai-scientific-discovery.github.io

ai-scientific-discovery.github.io

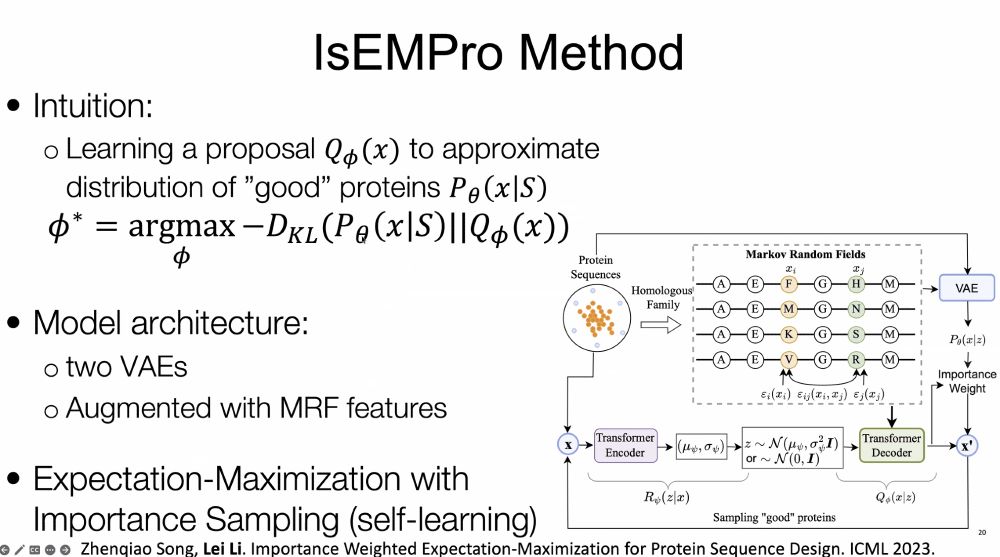

The analogy between language and molecule generation is interesting. Also, it is nice to see markov random field after a long exposure to LLM papers!

ai-scientific-discovery.github.io

The analogy between language and molecule generation is interesting. Also, it is nice to see markov random field after a long exposure to LLM papers!

ai-scientific-discovery.github.io

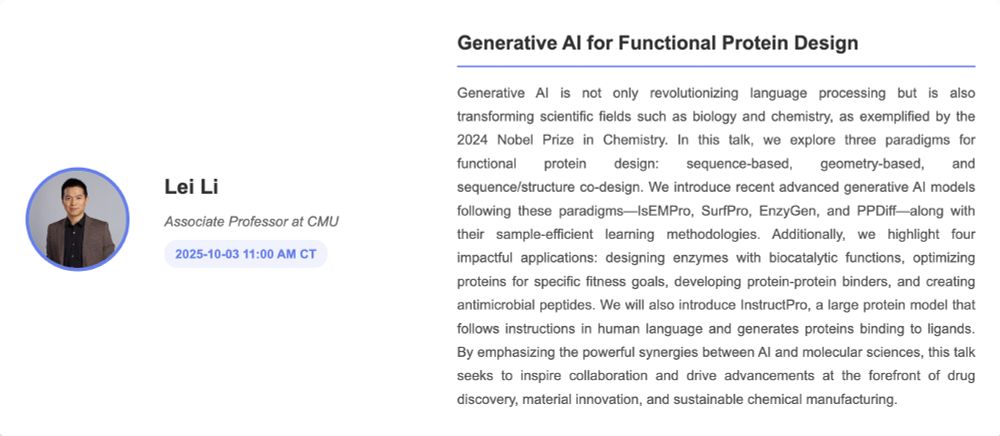

🧪 Generative AI for Functional Protein Design🤖

#artificialintelligence #scientificdiscovery

ai-scientific-discovery.github.io

🧪 Generative AI for Functional Protein Design🤖

#artificialintelligence #scientificdiscovery

ai-scientific-discovery.github.io

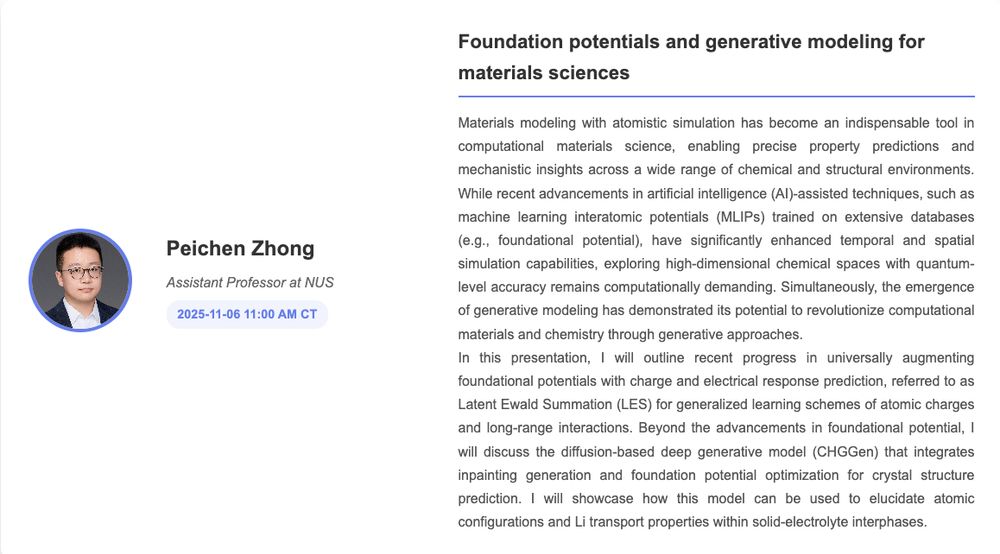

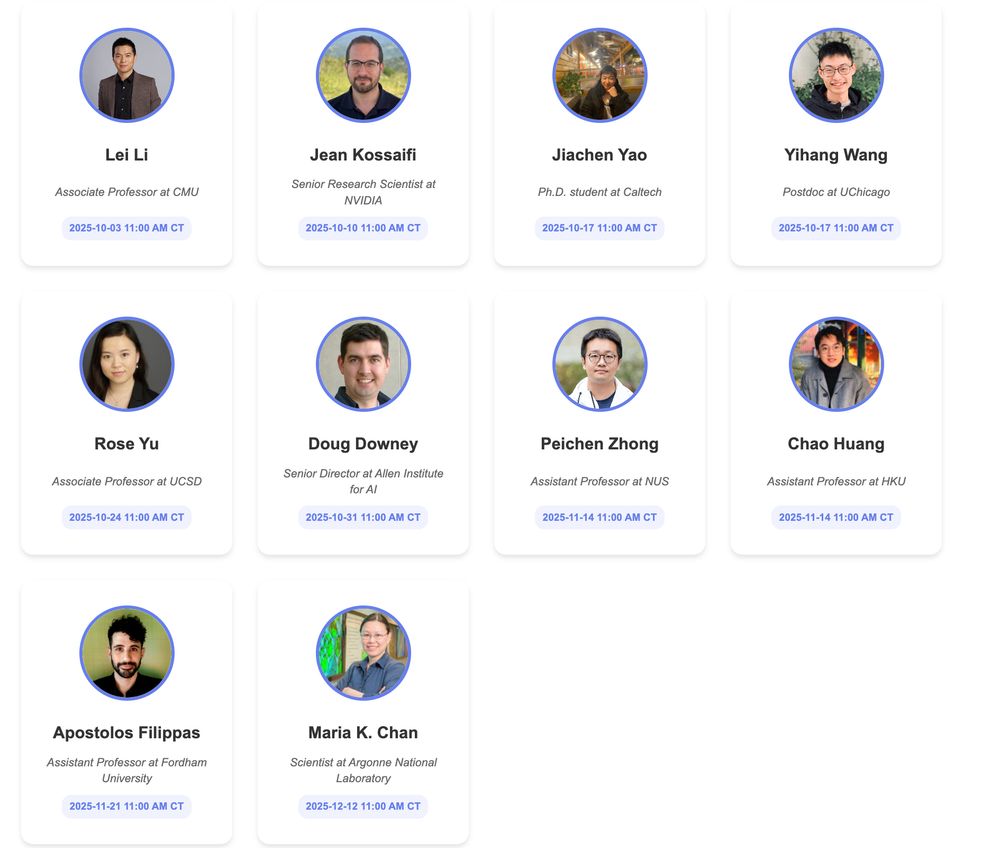

This series will dive into how AI is accelerating research, enabling breakthroughs, and shaping the future of research across disciplines.

ai-scientific-discovery.github.io

This series will dive into how AI is accelerating research, enabling breakthroughs, and shaping the future of research across disciplines.

ai-scientific-discovery.github.io

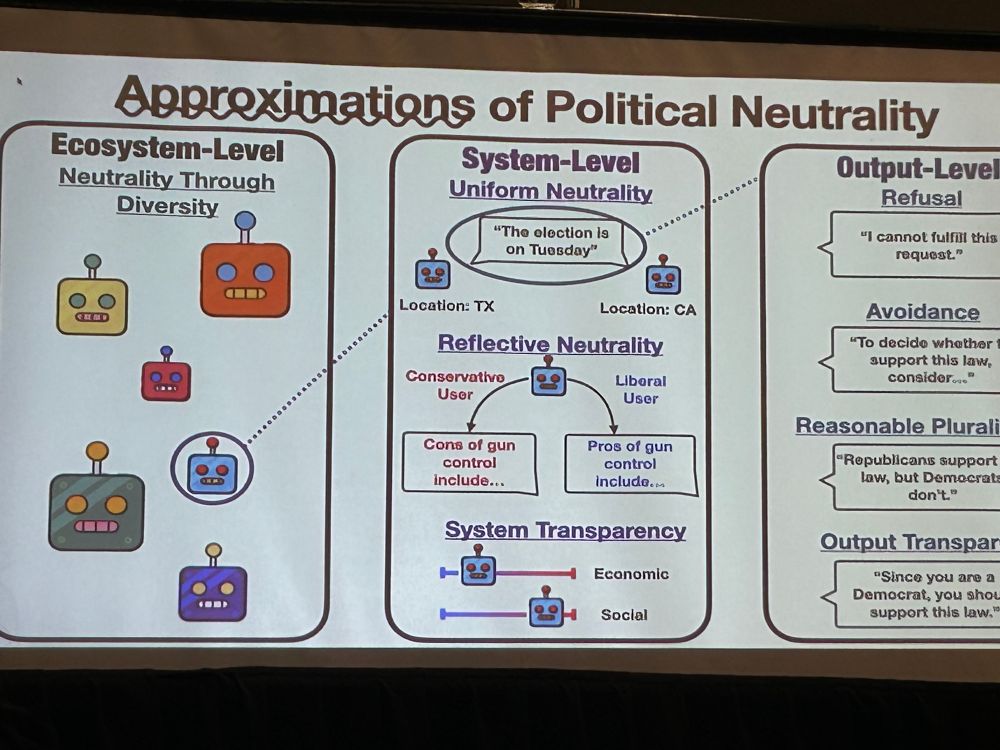

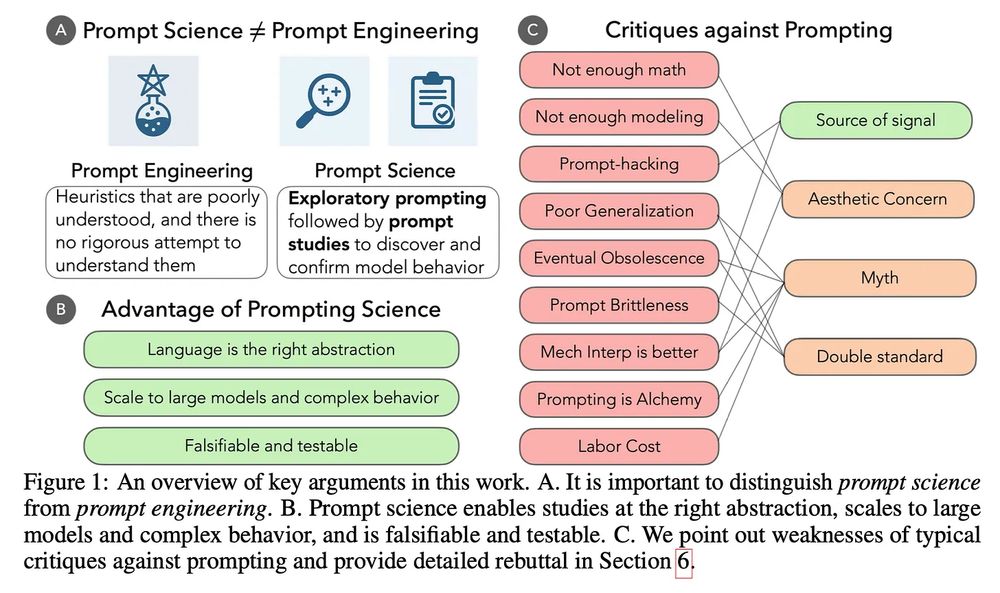

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

It's a bit like predicting chronological age to measure biological age.

If workload and other shocks affect fatigue, and that's what affects note-writing...

Predicting workload gets you fatigue for free!

It's a bit like predicting chronological age to measure biological age.

If workload and other shocks affect fatigue, and that's what affects note-writing...

Predicting workload gets you fatigue for free!

Tired physician notes turn out to be highly predictable too.

So LLM notes might LOOK fine…

…but encode the same subtle problems as fatigued human writing.

Tired physician notes turn out to be highly predictable too.

So LLM notes might LOOK fine…

…but encode the same subtle problems as fatigued human writing.

For example: Yield of testing for heart attack was *far lower*

(Past studies of fatigue show little impact on patients—but they looked only at schedules: mental state is hard to measure!)

For example: Yield of testing for heart attack was *far lower*

(Past studies of fatigue show little impact on patients—but they looked only at schedules: mental state is hard to measure!)

- Write more *predictable* notes (i.e., an LLM can easily predict the next word)

- Use fewer *insight* words ("I think," "believe")

- Use more *certainty* words ("clear," "definite")

- Write less *complex* sentences

- Write more *predictable* notes (i.e., an LLM can easily predict the next word)

- Use fewer *insight* words ("I think," "believe")

- Use more *certainty* words ("clear," "definite")

- Write less *complex* sentences

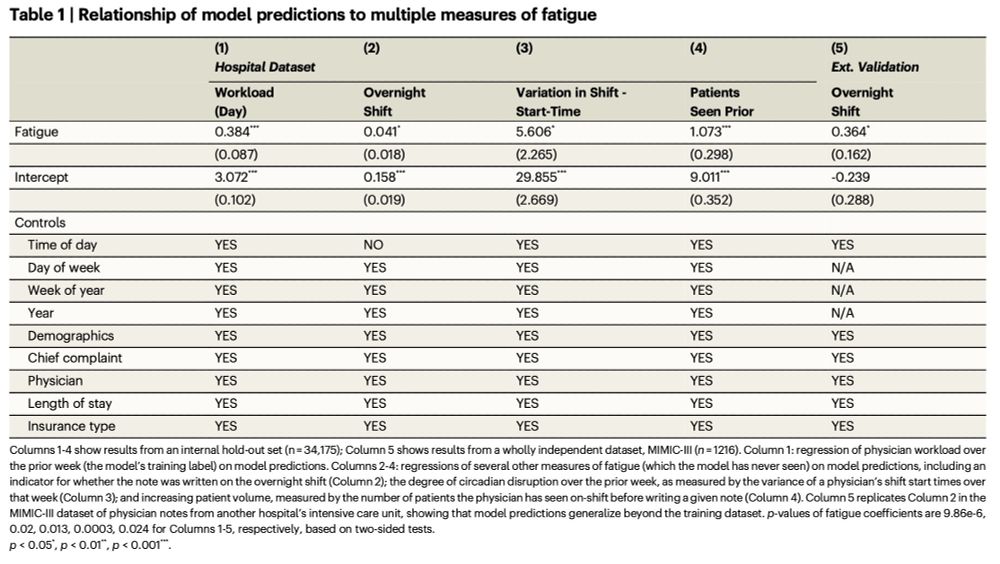

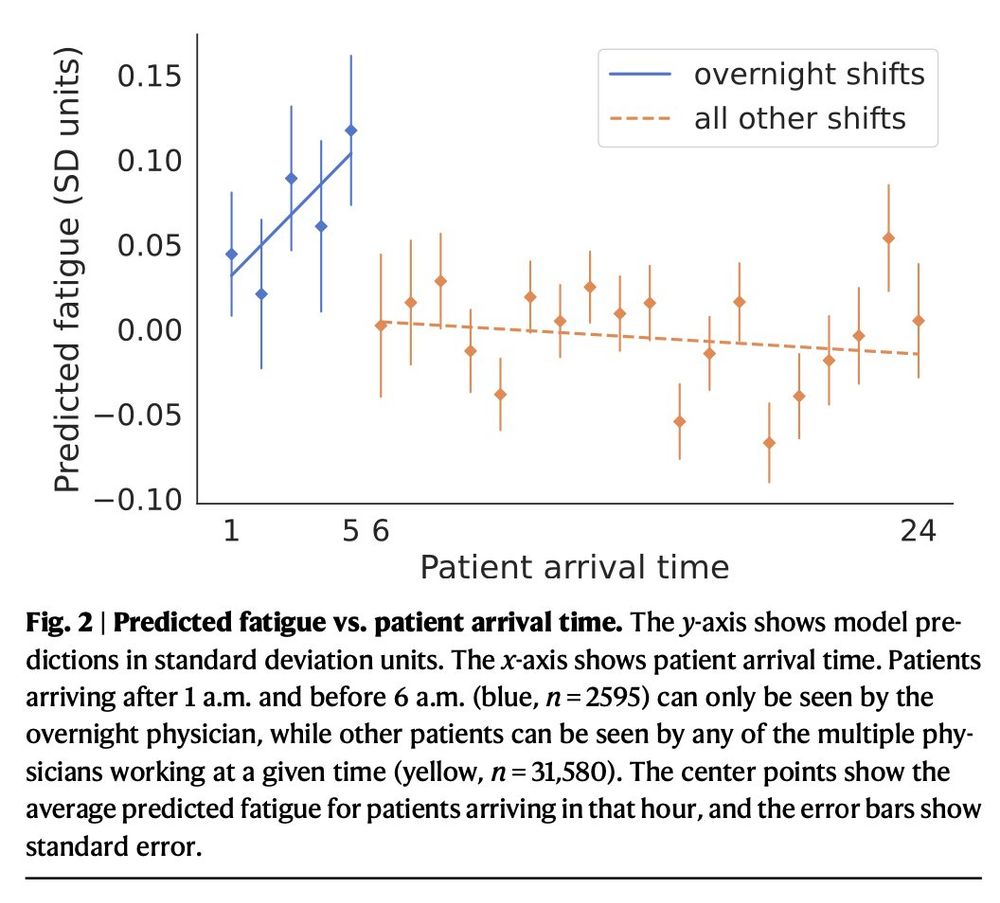

1. Who’s working an overnight shift (in our data + external validation in MIMIC)

2. Who’s working on a disruptive circadian schedule

3. How many patients has the doc seen *on the current shift*

1. Who’s working an overnight shift (in our data + external validation in MIMIC)

2. Who’s working on a disruptive circadian schedule

3. How many patients has the doc seen *on the current shift*

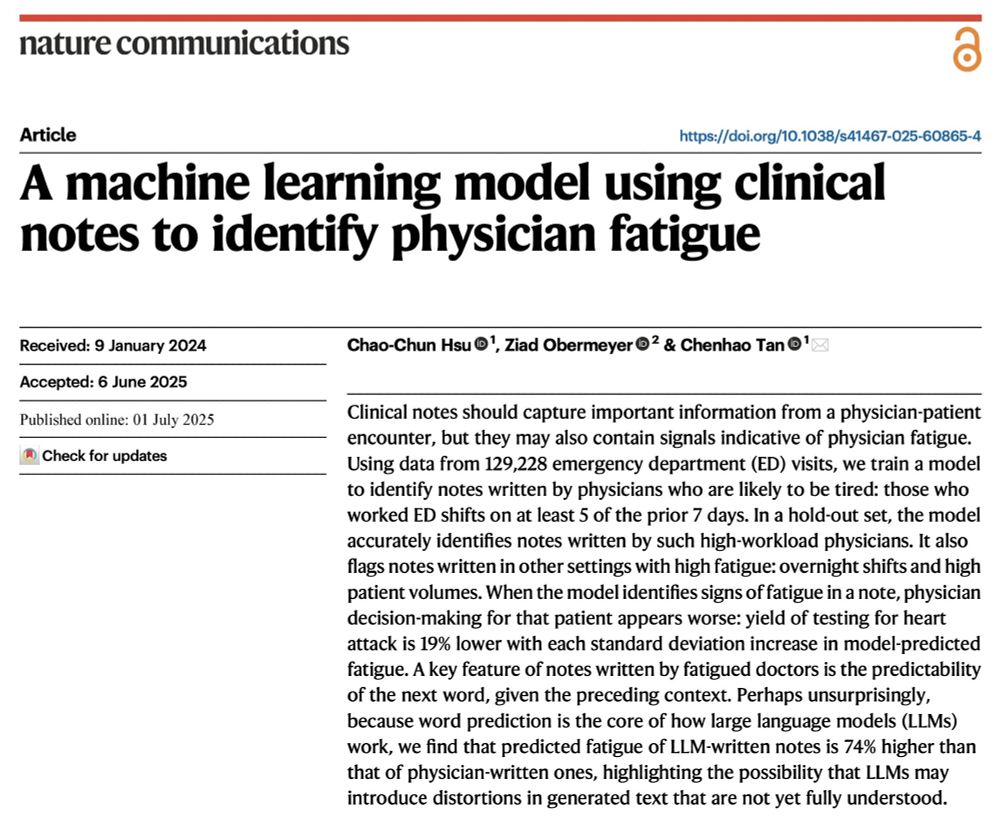

…and detect whether the doc who wrote it was tired.

(Basically: predict quasi-random variation in number of shifts worked in the past week—the result is a “tiredness detector”)

…and detect whether the doc who wrote it was tired.

(Basically: predict quasi-random variation in number of shifts worked in the past week—the result is a “tiredness detector”)

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...