@mcgill-nlp.bsky.social

@mila-quebec.bsky.social

https://cesare-spinoso.github.io/

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

Come to our poster at #ACL2025 on July 29th at 4 PM in Level 0, Halls X4/X5. Would love to chat about interpretability, hallucinations, and reasoning :)

@mcgill-nlp.bsky.social @mila-quebec.bsky.social

Come to our poster at #ACL2025 on July 29th at 4 PM in Level 0, Halls X4/X5. Would love to chat about interpretability, hallucinations, and reasoning :)

@mcgill-nlp.bsky.social @mila-quebec.bsky.social

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

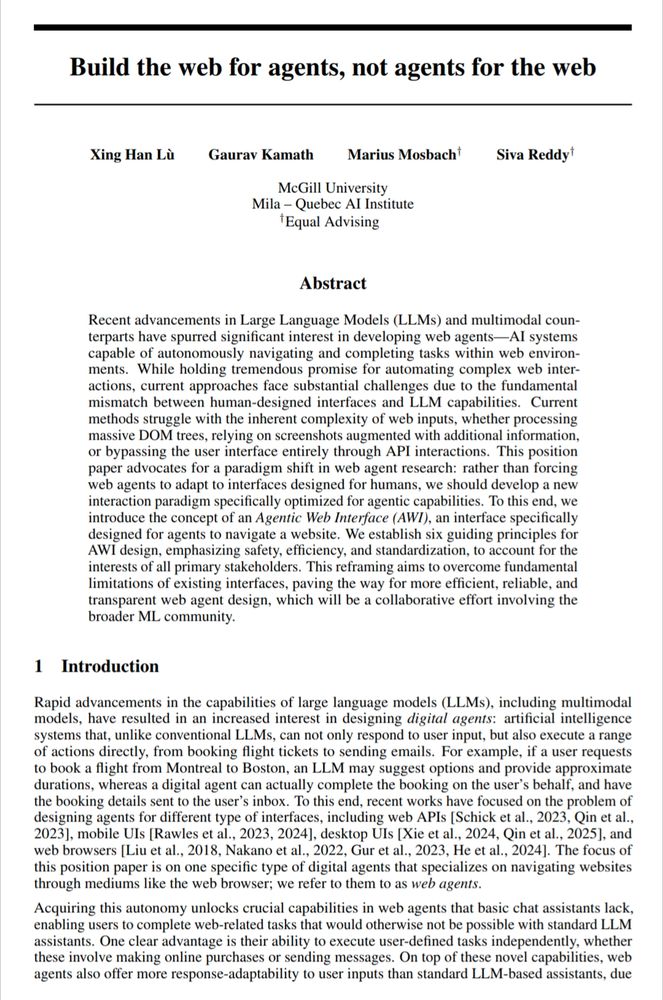

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

@interspeech.bsky.social

A Robust Model for Arabic Dialect Identification using Voice Conversion

Paper 📝 arxiv.org/pdf/2505.24713

Demo 🎙️https://shorturl.at/rrMm6

#Arabic #SpeechTech #NLProc #AI #Speech #ArabicDialects #Interspeech2025 #ArabicNLP

@interspeech.bsky.social

A Robust Model for Arabic Dialect Identification using Voice Conversion

Paper 📝 arxiv.org/pdf/2505.24713

Demo 🎙️https://shorturl.at/rrMm6

#Arabic #SpeechTech #NLProc #AI #Speech #ArabicDialects #Interspeech2025 #ArabicNLP

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Retrievers need to be aligned too! 🚨🚨🚨

Work done with the wonderful Nick and @sivareddyg.bsky.social

🔗 mcgill-nlp.github.io/malicious-ir/

Thread: 🧵👇

Retrievers need to be aligned too! 🚨🚨🚨

Work done with the wonderful Nick and @sivareddyg.bsky.social

🔗 mcgill-nlp.github.io/malicious-ir/

Thread: 🧵👇

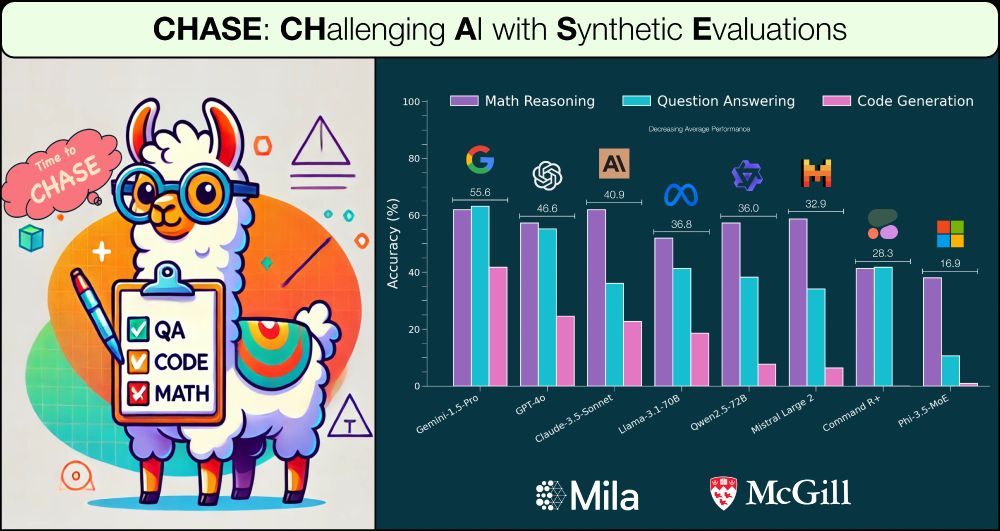

Problems for Evaluation? 🤔 Check out our CHASE recipe. A highly relevant problem given that most human-curated datasets are crushed within days.

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

Problems for Evaluation? 🤔 Check out our CHASE recipe. A highly relevant problem given that most human-curated datasets are crushed within days.

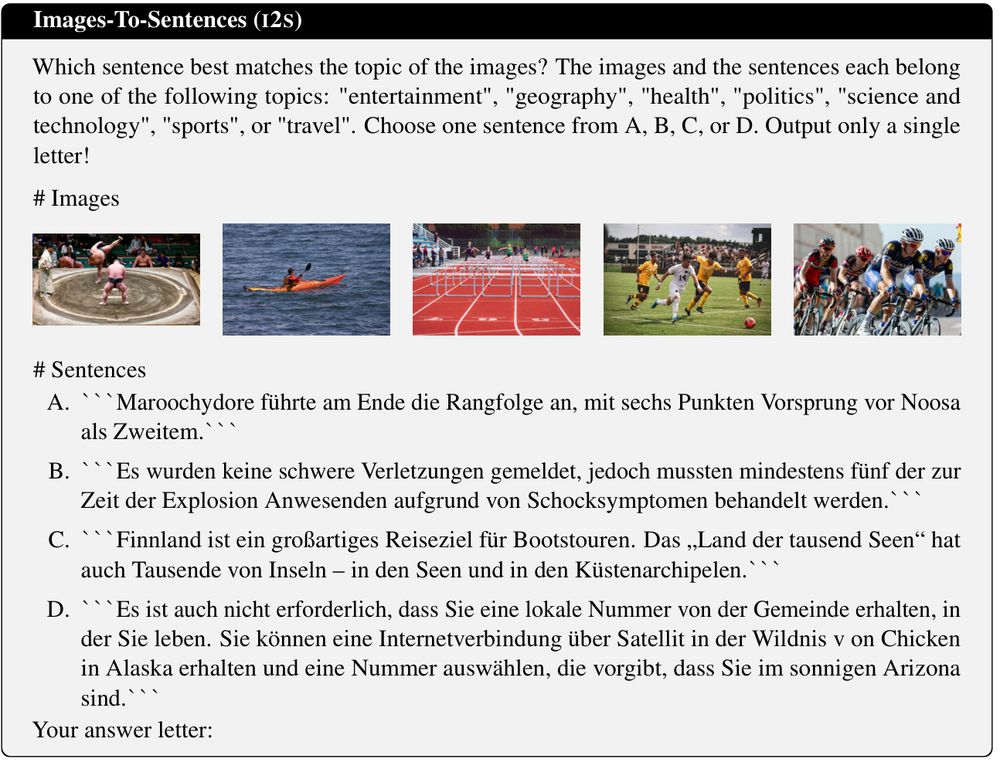

🤔Tasks: Given images (sentences), select topically matching sentence (image).

Arxiv: arxiv.org/abs/2502.12852

HF: huggingface.co/datasets/Wue...

Details👇

🤔Tasks: Given images (sentences), select topically matching sentence (image).

Arxiv: arxiv.org/abs/2502.12852

HF: huggingface.co/datasets/Wue...

Details👇

Workshop submission deadline: Feb 17 AoE

More info at heal-workshop.github.io.

Workshop submission deadline: Feb 17 AoE

More info at heal-workshop.github.io.

Workshop submission deadline: Feb 17 AoE

More info at heal-workshop.github.io.

Let's complete the list with three more🧵

From interpretability to bias/fairness and cultural understanding -> 🧵

Let's complete the list with three more🧵

From interpretability to bias/fairness and cultural understanding -> 🧵

From interpretability to bias/fairness and cultural understanding -> 🧵

(It looks NLP-centric at the moment, but that’s due to the current limits of my own knowledge 🙈)

go.bsky.app/G3w9LpE

(It looks NLP-centric at the moment, but that’s due to the current limits of my own knowledge 🙈)

go.bsky.app/G3w9LpE

This is the first time I’ve been in the Herald since high school 😂.

www.nzherald.co.nz/nz/data-scie...

This is the first time I’ve been in the Herald since high school 😂.